High Availability Cluster: Difference between revisions

No edit summary |

(archive) |

||

| (15 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

{{Note| | {{Note|This article is about the old stable Proxmox VE 3.x releases. See [[High Availability]] for the current release.}} | ||

=Introduction= | |||

== Introduction == | |||

Proxmox VE High Availability Cluster (Proxmox VE HA Cluster) enables the definition of high available virtual machines. In simple words, if a virtual machine (VM) is configured as HA and the physical host fails, the VM is automatically restarted on one of the remaining Proxmox VE Cluster nodes. | Proxmox VE High Availability Cluster (Proxmox VE HA Cluster) enables the definition of high available virtual machines. In simple words, if a virtual machine (VM) is configured as HA and the physical host fails, the VM is automatically restarted on one of the remaining Proxmox VE Cluster nodes. | ||

| Line 7: | Line 8: | ||

[[Image:Screen-HA-status.png|Screen-HA-status]] | [[Image:Screen-HA-status.png|Screen-HA-status]] | ||

==Update to the latest version== | === Update to the latest version === | ||

Before you start, make sure you have installed the latest packages, just run this on all nodes: | Before you start, make sure you have installed the latest packages, just run this on all nodes: | ||

apt-get update && apt-get dist-upgrade | |||

= System requirements = | == System requirements == | ||

If you run HA, only high end server hardware with no single point of failure should be used. This includes redundant disks (Hardware Raid), redundant power supply, UPS systems, network bonding. | If you run HA, only high end server hardware with no single point of failure should be used. This includes redundant disks (Hardware Raid), redundant power supply, UPS systems, network bonding. | ||

*Fully configured [[ | *[[Fencing]] device(s) - reliable and TESTED! <b>NOTE:</b> this is NEEDED, <b>there isn't software fencing</b>. | ||

*Fully configured [[Proxmox_VE_2.0_Cluster]] (version 2.0 and later), with at least 3 nodes (maximum supported configuration: currently 16 nodes per cluster). Note that, with certain limitations, 2-node configuration is also possible ([[Two-Node High Availability Cluster]]). | |||

*Shared storage (SAN or NAS/NFS for Virtual Disk Image Store for HA KVM) | *Shared storage (SAN or NAS/NFS for Virtual Disk Image Store for HA KVM) | ||

*Reliable, redundant network, suitable configured | *Reliable, redundant network, suitable configured | ||

*A extra network for Cluster communication, one network for VM traffic and one network for Storage traffic. | |||

*NFS for Containers | *NFS for Containers | ||

It is essential that you use redundant network connections for the cluster communication (bonding). Else a simple switch reboot (or power loss on the switch) can lock all HA nodes (see [http://bugzilla.proxmox.com/show_bug.cgi?id=105 bug #105]) | It is essential that you use redundant network connections for the cluster communication (bonding). Else a simple switch reboot (or power loss on the switch) can lock all HA nodes (see [http://bugzilla.proxmox.com/show_bug.cgi?id=105 bug #105]) | ||

== HA Configuration == | |||

=HA Configuration= | |||

Adding and managing VM´s and containers for HA should be done via GUI. The configuration of fence devices is CLI only. | Adding and managing VM´s and containers for HA should be done via GUI. The configuration of fence devices is CLI only. | ||

==Fencing== | === Fencing === | ||

Fencing is an essential part for Proxmox VE 2.0 | Fencing is an essential part for Proxmox VE HA (version 2.0 and later), without fencing, HA will not work. REMEMBER: you NEED at least a fencing device for every node. | ||

Detailed steps to configure and test fencing can be found [[Fencing|here]]. | Detailed steps to configure and test fencing can be found [[Fencing|here]]. | ||

==Configure VM or Containers for HA== | === Configure VM or Containers for HA === | ||

Review again if you have everything you need and if all systems are running reliable. It makes no sense to configure HA cluster setup on unreliable hardware. | Review again if you have everything you need and if all systems are running reliable. It makes no sense to configure HA cluster setup on unreliable hardware. | ||

*See [[High_Availability_Cluster#System_requirements]] | *See [[High_Availability_Cluster#System_requirements]] | ||

===Enable a KVM VM or a Container for HA=== | ==== Enable a KVM VM or a Container for HA ==== | ||

See also the video tutorial on [http://www.youtube.com/user/ProxmoxVE Proxmox VE Youtube channel] | See also the video tutorial on [http://www.youtube.com/user/ProxmoxVE Proxmox VE Youtube channel] | ||

| Line 47: | Line 48: | ||

[[Image:Screen-Show-HA-managed_VM-CT.png|Screen-Show-HA-managed_VM-CT]] | [[Image:Screen-Show-HA-managed_VM-CT.png|Screen-Show-HA-managed_VM-CT]] | ||

==HA Cluster maintenance (node reboots)== | === HA Cluster maintenance (node reboots) === | ||

If you need to reboot a node, e.g. because of a kernel update you need to stop rgmanager. By doing this, all resources are stopped and moved to other nodes. All KVM guests will get a ACPI shutdown request (if this does not work due to VM internal setting just a 'stop'). | If you need to reboot a node, e.g. because of a kernel update you need to stop rgmanager. By doing this, all resources are stopped and moved to other nodes. All KVM guests will get a ACPI shutdown request (if this does not work due to VM internal setting just a 'stop'). | ||

| Line 56: | Line 57: | ||

The command will take a while, monitor the "tasks" and the VM´s and CT´s on the GUI. as soon as the rgmanager is stopped, you can reboot your node. as soon as the node is up again, continue with the next node and so on. | The command will take a while, monitor the "tasks" and the VM´s and CT´s on the GUI. as soon as the rgmanager is stopped, you can reboot your node. as soon as the node is up again, continue with the next node and so on. | ||

=Video Tutorials= | == Video Tutorials == | ||

[http://www.youtube.com/user/ProxmoxVE Proxmox VE Youtube channel] | [http://www.youtube.com/user/ProxmoxVE Proxmox VE Youtube channel] | ||

=Certified Configurations and Examples= | == Certified Configurations and Examples == | ||

*[[Intel Modular Server HA]] | *[[Intel Modular Server HA]] | ||

*tbe | *tbe | ||

=Testing= | == Testing == | ||

Before going in production do as many tests as possible. | Before going in production do as many tests as possible. | ||

==Useful command line tools== | |||

=== Useful command line tools === | |||

Here is a list of useful CLI tools: | Here is a list of useful CLI tools: | ||

*clustat - Cluster Status Utility | *clustat - Cluster Status Utility | ||

| Line 72: | Line 74: | ||

*fence_tool - a utility for the fenced daemon | *fence_tool - a utility for the fenced daemon | ||

*fence_node - a utility to run fence agents | *fence_node - a utility to run fence agents | ||

*fence_ack_manual - a utility to manually manage fencing using /bin/false | |||

[[Category: | [[Category: Archive]] | ||

[[Category: Proxmox VE 3.x]] | |||

[[Category: | |||

Latest revision as of 15:57, 18 July 2019

| Note: This article is about the old stable Proxmox VE 3.x releases. See High Availability for the current release. |

Introduction

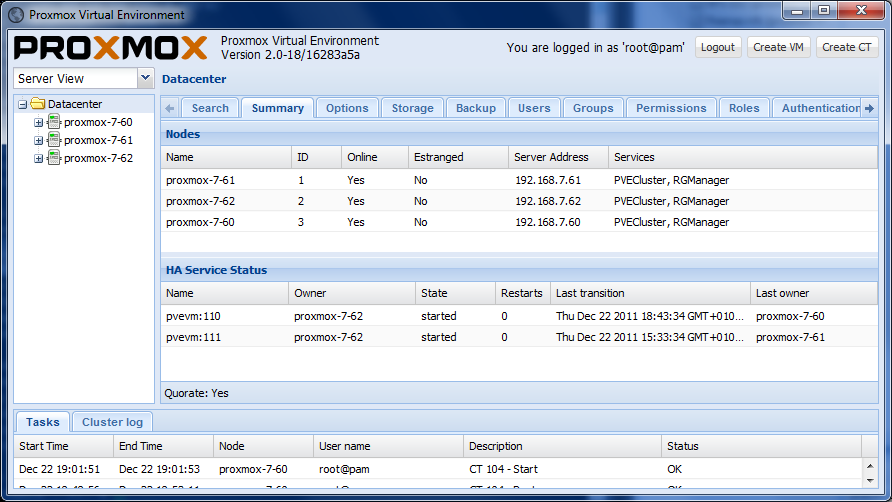

Proxmox VE High Availability Cluster (Proxmox VE HA Cluster) enables the definition of high available virtual machines. In simple words, if a virtual machine (VM) is configured as HA and the physical host fails, the VM is automatically restarted on one of the remaining Proxmox VE Cluster nodes.

The Proxmox VE HA Cluster is based on proven Linux HA technologies, providing stable and reliable HA service.

Update to the latest version

Before you start, make sure you have installed the latest packages, just run this on all nodes:

apt-get update && apt-get dist-upgrade

System requirements

If you run HA, only high end server hardware with no single point of failure should be used. This includes redundant disks (Hardware Raid), redundant power supply, UPS systems, network bonding.

- Fencing device(s) - reliable and TESTED! NOTE: this is NEEDED, there isn't software fencing.

- Fully configured Proxmox_VE_2.0_Cluster (version 2.0 and later), with at least 3 nodes (maximum supported configuration: currently 16 nodes per cluster). Note that, with certain limitations, 2-node configuration is also possible (Two-Node High Availability Cluster).

- Shared storage (SAN or NAS/NFS for Virtual Disk Image Store for HA KVM)

- Reliable, redundant network, suitable configured

- A extra network for Cluster communication, one network for VM traffic and one network for Storage traffic.

- NFS for Containers

It is essential that you use redundant network connections for the cluster communication (bonding). Else a simple switch reboot (or power loss on the switch) can lock all HA nodes (see bug #105)

HA Configuration

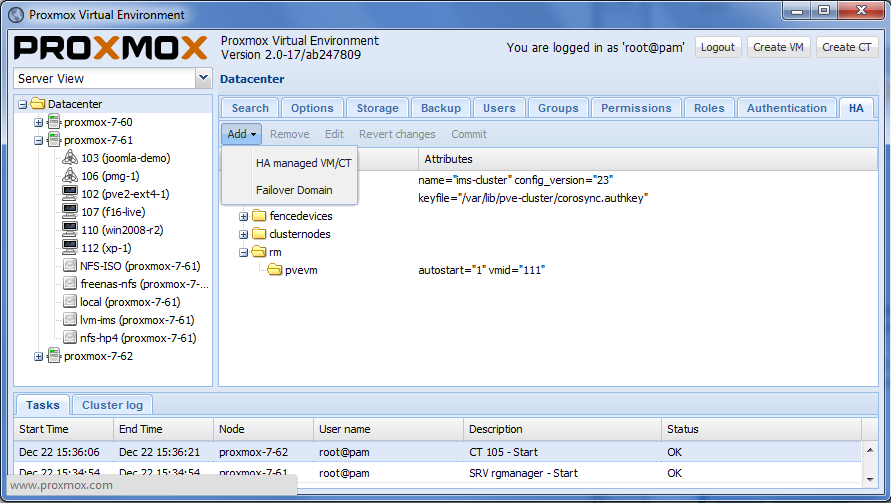

Adding and managing VM´s and containers for HA should be done via GUI. The configuration of fence devices is CLI only.

Fencing

Fencing is an essential part for Proxmox VE HA (version 2.0 and later), without fencing, HA will not work. REMEMBER: you NEED at least a fencing device for every node. Detailed steps to configure and test fencing can be found here.

Configure VM or Containers for HA

Review again if you have everything you need and if all systems are running reliable. It makes no sense to configure HA cluster setup on unreliable hardware.

Enable a KVM VM or a Container for HA

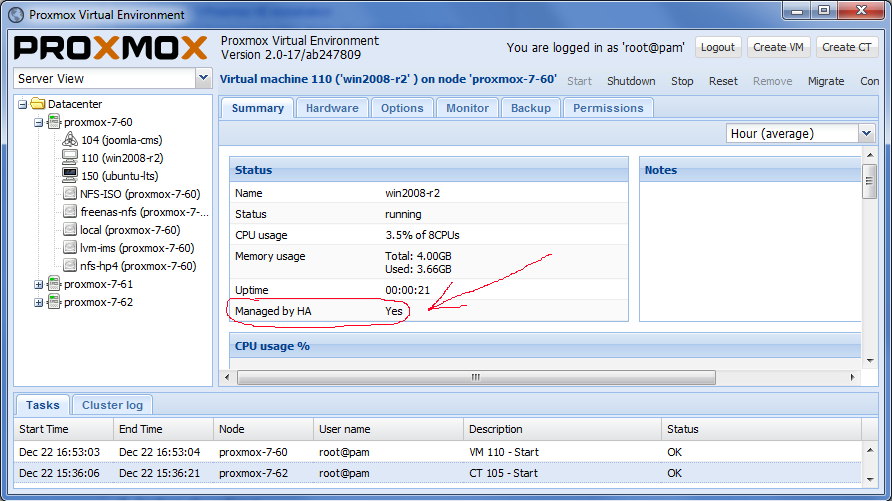

See also the video tutorial on Proxmox VE Youtube channel

Note: Make sure that the VMs or CTs are not running when you add them to HA.

HA Cluster maintenance (node reboots)

If you need to reboot a node, e.g. because of a kernel update you need to stop rgmanager. By doing this, all resources are stopped and moved to other nodes. All KVM guests will get a ACPI shutdown request (if this does not work due to VM internal setting just a 'stop').

You can stop the rgmanager service via GUI or just run:

/etc/init.d/rgmanager stop

The command will take a while, monitor the "tasks" and the VM´s and CT´s on the GUI. as soon as the rgmanager is stopped, you can reboot your node. as soon as the node is up again, continue with the next node and so on.

Video Tutorials

Certified Configurations and Examples

Testing

Before going in production do as many tests as possible.

Useful command line tools

Here is a list of useful CLI tools:

- clustat - Cluster Status Utility

- clusvcadm - Cluster User Service Administration Utility

- ccs_config_validate - validate cluster.conf file

- fence_tool - a utility for the fenced daemon

- fence_node - a utility to run fence agents

- fence_ack_manual - a utility to manually manage fencing using /bin/false