Multicast notes: Difference between revisions

Bread-baker (talk | contribs) |

m (→Introduction) |

||

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

Multicast allows a single transmission to be delivered to multiple servers at the same time. | Multicast allows a single transmission to be delivered to multiple servers at the same time. | ||

This is the basis for cluster communications in Proxmox VE 2.0 | |||

This is the basis for cluster communications in Proxmox VE 2.0. | |||

If multicast does not comply with your corporate IT policy, use unicast instead. | |||

=Troubleshooting= | =Troubleshooting= | ||

Revision as of 12:05, 14 May 2012

Introduction

Multicast allows a single transmission to be delivered to multiple servers at the same time.

This is the basis for cluster communications in Proxmox VE 2.0.

If multicast does not comply with your corporate IT policy, use unicast instead.

Troubleshooting

not all hosting companies allow multicast traffic.

Some switches have multicast disabled by default.

test if multicast is working between two nodes

Copied from a post by e100 on forum .

- this uses ssmping

Install this on all nodes .

aptitude install ssmping

run this on Node A:

ssmpingd

then on Node B:

asmping 224.0.2.1 ip_for_NODE_A_here

example output

asmping joined (S,G) = (*,224.0.2.234) pinging 192.168.8.6 from 192.168.8.5 unicast from 192.168.8.6, seq=1 dist=0 time=0.221 ms unicast from 192.168.8.6, seq=2 dist=0 time=0.229 ms multicast from 192.168.8.6, seq=2 dist=0 time=0.261 ms unicast from 192.168.8.6, seq=3 dist=0 time=0.198 ms multicast from 192.168.8.6, seq=3 dist=0 time=0.213 ms unicast from 192.168.8.6, seq=4 dist=0 time=0.234 ms multicast from 192.168.8.6, seq=4 dist=0 time=0.248 ms unicast from 192.168.8.6, seq=5 dist=0 time=0.249 ms multicast from 192.168.8.6, seq=5 dist=0 time=0.263 ms unicast from 192.168.8.6, seq=6 dist=0 time=0.250 ms multicast from 192.168.8.6, seq=6 dist=0 time=0.264 ms unicast from 192.168.8.6, seq=7 dist=0 time=0.245 ms multicast from 192.168.8.6, seq=7 dist=0 time=0.260 ms

for more information see

man ssmping

and

less /usr/share/doc/ssmping/README.gz

ssmping notes

- there are a few other programs included in ssmping which may be of use. here is a list of the files in the package:

apt-file list ssmping

ssmping: /usr/bin/asmping ssmping: /usr/bin/mcfirst ssmping: /usr/bin/ssmping ssmping: /usr/bin/ssmpingd ssmping: /usr/share/doc/ssmping/README.gz ssmping: /usr/share/doc/ssmping/changelog.Debian.gz ssmping: /usr/share/doc/ssmping/copyright ssmping: /usr/share/man/man1/asmping.1.gz ssmping: /usr/share/man/man1/mcfirst.1.gz ssmping: /usr/share/man/man1/ssmping.1.gz ssmping: /usr/share/man/man1/ssmpingd.1.gz

- If you want to use apt-file do this:

aptitude install apt-file apt-file update

then set up a cronjob to do apt-file update weekly or monthly ..

Multicast with Infiniband

IP over Infiniband (IPoIB) supports Multicast but Multicast traffic is limited to 2044 Bytes when using connected mode even if you set a larger MTU on the IPoIB interface.

Corosync has a setting, netmtu, that defaults to 1500 making it compatible with connected mode Infiniband.

Changing netmtu

Changing the netmtu can increase throughput The following information is untested.

Edit the /etc/pve/cluster.conf file Add the section:

<totem netmtu="2044" />

<?xml version="1.0"?>

<cluster name="clustername" config_version="2">

<totem netmtu="2044" />

<cman keyfile="/var/lib/pve-cluster/corosync.authkey">

</cman>

<clusternodes>

<clusternode name="node1" votes="1" nodeid="1"/>

<clusternode name="node2" votes="1" nodeid="2"/>

<clusternode name="node3" votes="1" nodeid="3"/></clusternodes>

</cluster>

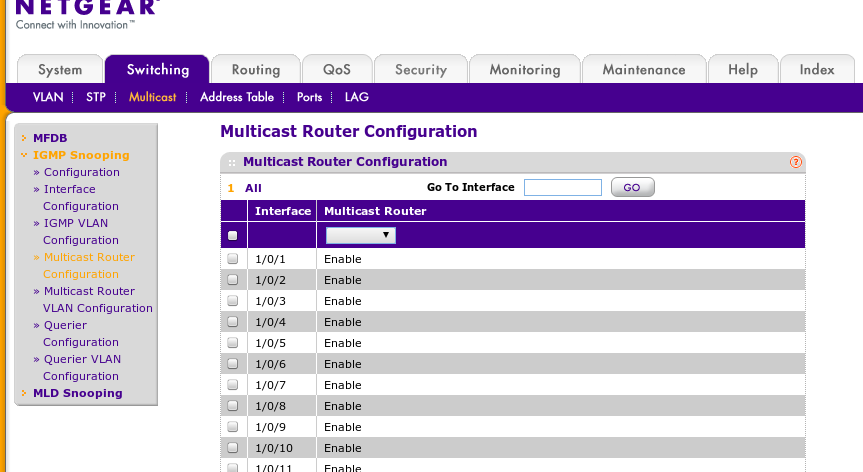

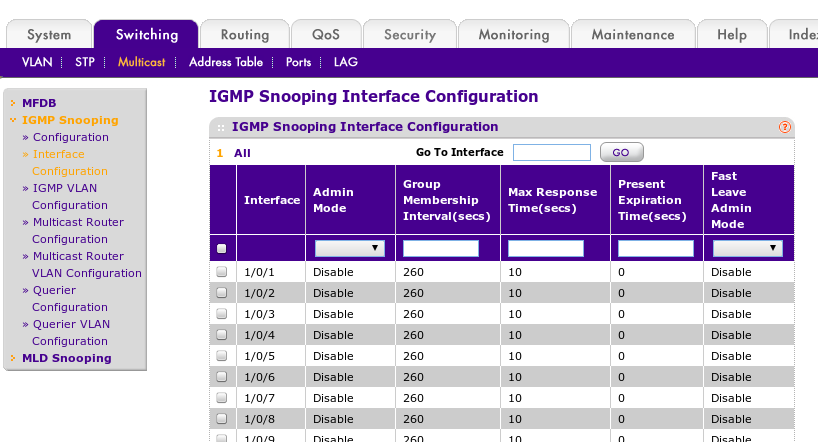

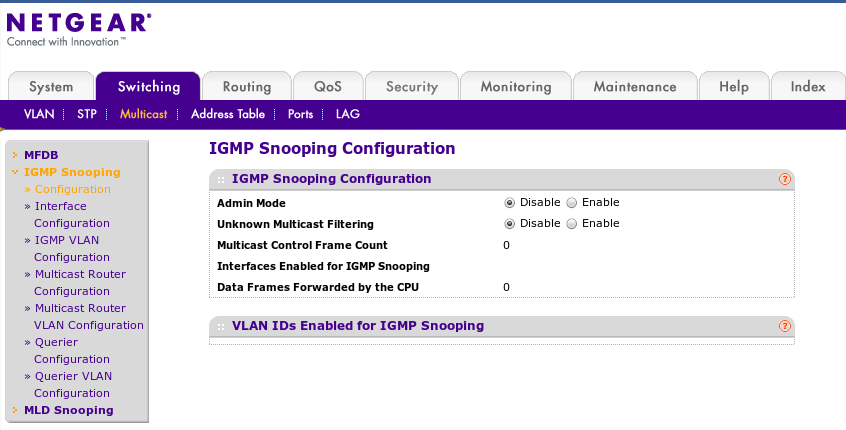

Netgear Managed Switches

the following are pics of setting to get multicast working on our netgear 7300 series switches. for more information see http://documentation.netgear.com/gs700at/enu/202-10360-01/GS700AT%20Series%20UG-06-18.html