Difference between revisions of "Ceph Server"

| Line 23: | Line 23: | ||

*Easy management | *Easy management | ||

*Open source | *Open source | ||

| + | |||

| + | == Why do we need a new command line tool (pveceph)? == | ||

| + | |||

| + | For the use in the specific Proxmox VE architecture we use pveceph. Proxmox VE provides a distributed file system ([http://pve.proxmox.com/wiki/Proxmox_Cluster_file_system_%28pmxcfs%29 pmxcfs]) to store configuration files. | ||

| + | |||

| + | We use this to store the Ceph configuration. The advantage is that all nodes see the same file, and there is no need to copy configuration data around using ssh/scp. The tool can also use additional information from your Proxmox VE setup. | ||

| + | |||

| + | Tools like ceph-deploy cannot take advantage of that architecture. | ||

== Recommended hardware == | == Recommended hardware == | ||

| Line 49: | Line 57: | ||

== Installation of Proxmox VE == | == Installation of Proxmox VE == | ||

| − | Before you start with Ceph, you need a working Proxmox VE cluster with 3 nodes (or more). We install Proxmox VE on a fast and reliable enterprise class SSD, so we can use all bays for OSD (Object Storage Devices) data. Just follow the well known instructions on [[Installation]] and [[ | + | Before you start with Ceph, you need a working Proxmox VE cluster with 3 nodes (or more). We install Proxmox VE on a fast and reliable enterprise class SSD, so we can use all bays for OSD (Object Storage Devices) data. Just follow the well known instructions on [[Installation]] and [[Cluster_Manager]]. |

'''Note:''' | '''Note:''' | ||

Use ext4 if you install on SSD (at the boot prompt of the installation ISO you can specify parameters, e.g. "linux ext4 swapsize=4"). | Use ext4 if you install on SSD (at the boot prompt of the installation ISO you can specify parameters, e.g. "linux ext4 swapsize=4"). | ||

| + | |||

| + | === Ceph on Proxmox VE 5.0 === | ||

| + | {{Note|the current Ceph Luminous 12.1.x is the release candidate, for production ready Ceph Cluster packages please wait for version 12.2.x}} | ||

| + | |||

| + | If you need to roll out now please install Proxmox VE 4.4 with Ceph Jewel and make a inplace upgrade to Proxmox VE 5.0 later, when Ceph Luminous is stable. | ||

| + | |||

| + | In Proxmox VE 5.0 the only available Ceph version is Luminous. So when you install the ceph packages use --version luminous instead of jewel. | ||

== Network for Ceph == | == Network for Ceph == | ||

| Line 97: | Line 112: | ||

You now need to select 3 nodes and install the Ceph software packages there. We wrote a small command line utility called 'pveceph' which helps you performing this tasks, you can also choose the version of Ceph. Login to all your nodes and execute the following on all: | You now need to select 3 nodes and install the Ceph software packages there. We wrote a small command line utility called 'pveceph' which helps you performing this tasks, you can also choose the version of Ceph. Login to all your nodes and execute the following on all: | ||

| − | node1# pveceph install -version jewel | + | node1# pveceph install --version jewel |

| − | node2# pveceph install -version jewel | + | node2# pveceph install --version jewel |

| − | node3# pveceph install -version jewel | + | node3# pveceph install --version jewel |

This sets up an 'apt' package repository in /etc/apt/sources.list.d/ceph.list and installs the required software. | This sets up an 'apt' package repository in /etc/apt/sources.list.d/ceph.list and installs the required software. | ||

| Line 158: | Line 173: | ||

This partitions the disk (data and journal partition), creates filesystems, starts the OSD and add it to the existing crush map. So afterward the OSD is running and fully functional. Please create at least 12 OSDs, distributed among your nodes (4 on each node). | This partitions the disk (data and journal partition), creates filesystems, starts the OSD and add it to the existing crush map. So afterward the OSD is running and fully functional. Please create at least 12 OSDs, distributed among your nodes (4 on each node). | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

You can create OSDs containing both journal and data partitions or you can place the journal on a dedicated SSD. Using a SSD journal disk is highly recommended if you expect good performance. | You can create OSDs containing both journal and data partitions or you can place the journal on a dedicated SSD. Using a SSD journal disk is highly recommended if you expect good performance. | ||

| Line 203: | Line 190: | ||

http://ceph.com/pgcalc/ | http://ceph.com/pgcalc/ | ||

===pool size notes=== | ===pool size notes=== | ||

| − | + | The recommended pool setting is size 3 and min.size 2. | |

| − | + | All lower size settings are dangerous and you can lose your pool data. | |

| − | |||

| − | |||

| − | + | == Ceph Client == | |

| + | You also need to copy the keyring to a predefined location. | ||

| − | '' | + | '''Note that the file name needs to be storage id + .keyring storage id is the expression after 'rbd:' in /etc/pve/storage.cfg which is my-ceph-storage in the current example.''' |

| − | + | # mkdir /etc/pve/priv/ceph | |

| − | + | # cp /etc/pve/priv/ceph.client.admin.keyring /etc/pve/priv/ceph/my-ceph-storage.keyring | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

You can then configure Proxmox VE to use such pools to store VM images, just use the GUI ("Add Storage": RBD). A typical entry in the Proxmox VE storage configuration looks like: | You can then configure Proxmox VE to use such pools to store VM images, just use the GUI ("Add Storage": RBD). A typical entry in the Proxmox VE storage configuration looks like: | ||

| Line 230: | Line 210: | ||

username admin | username admin | ||

krbd 0 | krbd 0 | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

If you want to store containers on Ceph, you need to create an extra pool using KRBD. | If you want to store containers on Ceph, you need to create an extra pool using KRBD. | ||

| Line 248: | Line 221: | ||

krbd 1 | krbd 1 | ||

| − | == Set the Ceph OSD tunables == | + | == Further readings about Ceph == |

| + | |||

| + | Ceph comes with plenty of documentation [http://ceph.com/docs/master/ here]. Even better, the dissertation from the creator of Ceph - Sage A. Weil - is also [http://ceph.com/papers/weil-thesis.pdf available]. By reading this you can get a deep insight how it works. | ||

| + | |||

| + | *http://ceph.com/ | ||

| + | *https://www.redhat.com/en/technologies/storage/ceph | ||

| + | |||

| + | *https://www.sebastien-han.fr/blog/2014/10/10/ceph-how-to-test-if-your-ssd-is-suitable-as-a-journal-device/, Journal SSD Recommendations | ||

| + | |||

| + | == Video Tutorials == | ||

| + | *[https://www.proxmox.com/en/training/video-tutorials/item/install-ceph-server-on-proxmox-ve Install Ceph Server on Proxmox VE] | ||

| + | |||

| + | ===Proxmox YouTube channel=== | ||

| + | You can subscribe to our [http://www.youtube.com/ProxmoxVE Proxmox VE Channel] on YouTube to get updates about new videos. | ||

| + | |||

| + | == Ceph Misc == | ||

| + | |||

| + | === Set the Ceph OSD tunables === | ||

ceph osd crush tunables hammer | ceph osd crush tunables hammer | ||

| Line 261: | Line 251: | ||

Set it to hammer. | Set it to hammer. | ||

| − | == | + | === Prepare OSD Disks === |

| − | + | It should be noted that this command refuses to initialize disk when it detects existing data. So if you want to overwrite a disk you should remove existing data first. You can do that using: | |

| − | + | # ceph-disk zap /dev/sd[X] | |

| + | *In some cases disks that used to be part of a 3ware raid need the following in addition to zap. | ||

| − | + | try this: | |

| + | <pre> | ||

| + | #To remove partition table and boot sector the following should be sufficient: | ||

| + | dd if=/dev/zero of=/dev/$DISK bs=1024 count=1 | ||

| + | </pre> | ||

| + | or | ||

| + | <pre> | ||

| + | DISK=$1 | ||

| − | = | + | if [ "$1" = "" ]; then |

| + | echo "Need to supply a dev name like sdg . exiting" | ||

| + | exit 1 | ||

| + | fi | ||

| + | echo " make sure this is the correct disk " | ||

| + | echo $DISK | ||

| + | echo " you will end up with NO partition table when this procedes . example: | ||

| + | Disk /dev/$1 doesn't contain a valid partition table | ||

| + | Press enter to procede , or ctl-c to exit " | ||

| − | + | read x | |

| − | + | dd if=/dev/zero of=/dev/$DISK bs=512 count=50000 | |

| − | + | </pre> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Upgrading existing Ceph Server from Hammer to Jewel === | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | == Upgrading existing Ceph Server from Hammer to Jewel == | ||

See [[Ceph Hammer to Jewel]] | See [[Ceph Hammer to Jewel]] | ||

| − | == | + | === Upgrading existing Ceph Server from Jewel to Luminous === |

| − | + | See [[Ceph Jewel to Luminous]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

===osd hardware=== | ===osd hardware=== | ||

it is best to use the same suggested model drive for all osd's. | it is best to use the same suggested model drive for all osd's. | ||

| + | |||

===make one change at a time=== | ===make one change at a time=== | ||

after ceph is set up and running make only one change at a time. | after ceph is set up and running make only one change at a time. | ||

| Line 366: | Line 343: | ||

To stop all daemons on a Ceph Node (irrespective of type), execute the following: | To stop all daemons on a Ceph Node (irrespective of type), execute the following: | ||

** Do on all mon nodes ** | ** Do on all mon nodes ** | ||

| − | + | systemctl stop ceph\*.service ceph\*.target | |

4- you need also to remove the client key in /etc/pve/priv/ceph/ | 4- you need also to remove the client key in /etc/pve/priv/ceph/ | ||

| Line 383: | Line 360: | ||

6- start vm's | 6- start vm's | ||

| − | |||

| − | |||

| − | |||

| − | |||

[[Category:HOWTO]] [[Category:Installation]] | [[Category:HOWTO]] [[Category:Installation]] | ||

Revision as of 14:28, 4 July 2017

Introduction

Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability - See more at: http://ceph.com.

Proxmox VE supports Ceph’s RADOS Block Device to be used for VM and container disks. The Ceph storage services are usually hosted on external, dedicated storage nodes. Such storage clusters can sum up to several hundreds of nodes, providing petabytes of storage capacity.

For smaller deployments, it is also possible to run Ceph services directly on your Proxmox VE nodes. Recent hardware has plenty of CPU power and RAM, so running storage services and VM/CTs on same node is possible.

This articles describes how to setup and run Ceph storage services directly on Proxmox VE nodes. If you want to install and configure an external Ceph storage read the Ceph documentation. To configure an external Ceph storage works as described in section Ceph Client accordingly.

Advantages

- Easy setup and management with CLI and GUI support on Proxmox VE

- Thin provisioning

- Snapshots support

- Self healing

- No single point of failure

- Scalable to the exabyte level

- Setup pools with different performance and redundancy characteristics

- Data is replicated, making it fault tolerant

- Runs on economical commodity hardware

- No need for hardware RAID controllers

- Easy management

- Open source

Why do we need a new command line tool (pveceph)?

For the use in the specific Proxmox VE architecture we use pveceph. Proxmox VE provides a distributed file system (pmxcfs) to store configuration files.

We use this to store the Ceph configuration. The advantage is that all nodes see the same file, and there is no need to copy configuration data around using ssh/scp. The tool can also use additional information from your Proxmox VE setup.

Tools like ceph-deploy cannot take advantage of that architecture.

Recommended hardware

You need at least three identical servers for the redundant setup. Here is the specifications of one of our test lab clusters with Proxmox VE and Ceph (three nodes):

- Dual Xeon E5-2620v2, 64 GB RAM, Intel S2600CP mainboard, Intel RMM, Chenbro 2U chassis with eight 3.5” hot-swap drive bays, 2 fixed 2.5" SSD bays

- 10 GBit network for Ceph traffic (one Dual 10 Gbit Intel X540-T2 in each server, one 10Gb switch - Cisco SG350XG-2F1)

- Single enterprise class SSD for the Proxmox VE installation (because we run Ceph monitors there and quite a lot of logs), we use one Samsung SM863 240 GB per host.

- Use at least two SSD as OSD drives. You need high quality and enterprise class SSDs here, never use consumer or "PRO" consumer SSDs. In our testsetup, we have 4 Intel SSD DC S3520 1.2TB, 2.5" SATA SSD per host for storing the data (OSD, no extra journal) - This setup delivers about 14 TB storage. By using the redundancy of 3, you can store up to 4,7 TB (100%). But to be prepared for failed disks and hosts, you should never fill up your storage with 100 %.

- As a general rule, the more OSD the better, fast CPU (high GHZ) is also recommended. NVMe express cards are also possible, e.g. mix of slow SATA disks with SSD/NVMe journal devices.

Again, if you expect good performance, always use enterprise class SSD only, we have good results in our testlabs with:

- SATA SSDs:

- Intel SSD DC S3520

- Intel SSD DC S3610

- Intel SSD DC S3700/S3710

- Samsung SSD SM863

- NVMe PCIe 3.0 x4 as journal:

- Intel SSD DC P3700

By adding more OSD SSD/disks into the free drive bays, the storage can be expanded. Of course, you can add more servers too as soon as your business is growing, without service interruption and with minimal configuration changes.

If you do not want to run virtual machines and Ceph on the same host, you can just add more Proxmox VE nodes and use these for running the guests and the others just for the storage.

Installation of Proxmox VE

Before you start with Ceph, you need a working Proxmox VE cluster with 3 nodes (or more). We install Proxmox VE on a fast and reliable enterprise class SSD, so we can use all bays for OSD (Object Storage Devices) data. Just follow the well known instructions on Installation and Cluster_Manager.

Note:

Use ext4 if you install on SSD (at the boot prompt of the installation ISO you can specify parameters, e.g. "linux ext4 swapsize=4").

Ceph on Proxmox VE 5.0

| Note: the current Ceph Luminous 12.1.x is the release candidate, for production ready Ceph Cluster packages please wait for version 12.2.x |

If you need to roll out now please install Proxmox VE 4.4 with Ceph Jewel and make a inplace upgrade to Proxmox VE 5.0 later, when Ceph Luminous is stable.

In Proxmox VE 5.0 the only available Ceph version is Luminous. So when you install the ceph packages use --version luminous instead of jewel.

Network for Ceph

All nodes need access to a separate 10Gb network interface, exclusively used for Ceph. We use network 10.10.10.0/24 for this tutorial.

It is highly recommended to use 10Gb for that network to avoid performance problems. Bonding can be used to increase availability.

If you do not have fast network switches, you can also setup a Full Mesh Network for Ceph Server.

First node

The network setup (ceph private network) from our first node contains:

# from /etc/network/interfaces auto eth2 iface eth2 inet static address 10.10.10.1 netmask 255.255.255.0

Second node

The network setup (ceph private network) from our second node contains:

# from /etc/network/interfaces auto eth2 iface eth2 inet static address 10.10.10.2 netmask 255.255.255.0

Third node

The network setup (ceph private network) from our third node contains:

# from /etc/network/interfaces auto eth2 iface eth2 inet static address 10.10.10.3 netmask 255.255.255.0

Installation of Ceph packages

You now need to select 3 nodes and install the Ceph software packages there. We wrote a small command line utility called 'pveceph' which helps you performing this tasks, you can also choose the version of Ceph. Login to all your nodes and execute the following on all:

node1# pveceph install --version jewel

node2# pveceph install --version jewel

node3# pveceph install --version jewel

This sets up an 'apt' package repository in /etc/apt/sources.list.d/ceph.list and installs the required software.

Create initial Ceph configuration

After installation of packages, you need to create an initial Ceph configuration on just one node, based on your private network:

node1# pveceph init --network 10.10.10.0/24

This creates an initial config at /etc/pve/ceph.conf. That file is automatically distributed to all Proxmox VE nodes by using pmxcfs. The command also creates a symbolic link from /etc/ceph/ceph.conf pointing to that file. So you can simply run Ceph commands without the need to specify a configuration file.

Creating Ceph Monitors

After that you can create the first Ceph monitor service using:

node1# pveceph createmon

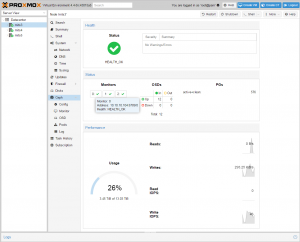

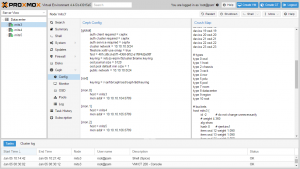

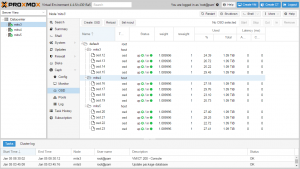

Continue with CLI or GUI

As soon as you have created the first monitor, you can start using the Proxmox GUI (see the video tutorial on Managing Ceph Server) to manage and view your Ceph configuration.

Of course, you can continue to use the command line tools (CLI). We continue with the CLI in this wiki article, but you should achieve the same results no matter which way you finish the remaining steps.

Creating more Ceph Monitors

You should run 3 monitors, one on each node. Create them via GUI or via CLI. So please login to the next node and run:

node2# pveceph createmon

And execute the same steps on the third node:

node3# pveceph createmon

Note:

If you add a node where you do not want to run a Ceph monitor, e.g. another node for OSDs, you need to install the Ceph packages with 'pveceph install'.

Creating Ceph OSDs

First, please be careful when you initialize your OSD disks, because it basically removes all existing data from those disks. So it is important to select the correct device names. The Proxmox VE GUI displays a list of all disk, together with device names, usage information and serial numbers.

Creating OSDs can be done via GUI - self explaining - or via CLI, explained here:

Having that said, initializing an OSD can be done with:

# pveceph createosd /dev/sd[X]

If you want to use a dedicated SSD journal disk:

# pveceph createosd /dev/sd[X] -journal_dev /dev/sd[X]

Example: /dev/sdf as data disk (4TB) and /dev/sdb is the dedicated SSD journal disk

# pveceph createosd /dev/sdf -journal_dev /dev/sdb

This partitions the disk (data and journal partition), creates filesystems, starts the OSD and add it to the existing crush map. So afterward the OSD is running and fully functional. Please create at least 12 OSDs, distributed among your nodes (4 on each node).

You can create OSDs containing both journal and data partitions or you can place the journal on a dedicated SSD. Using a SSD journal disk is highly recommended if you expect good performance.

Note:

In order to use a dedicated journal disk (SSD), the disk needs to have a GPT partition table. You can create this with 'gdisk /dev/sd(x)'. If there is no GPT, you cannot select the disk as journal. Currently the journal size is fixed to 5 GB.

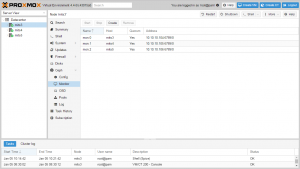

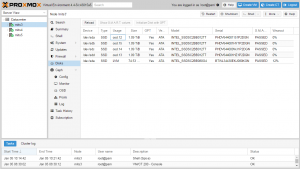

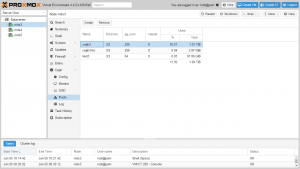

Ceph Pools

The standard installation creates some default pools, so you can either use the standard 'rbd' pool, or create your own pools using the GUI.

In order to calculate your the number of placement groups for your pools, you can use:

Ceph PGs per Pool Calculator

pool size notes

The recommended pool setting is size 3 and min.size 2. All lower size settings are dangerous and you can lose your pool data.

Ceph Client

You also need to copy the keyring to a predefined location.

Note that the file name needs to be storage id + .keyring storage id is the expression after 'rbd:' in /etc/pve/storage.cfg which is my-ceph-storage in the current example.

# mkdir /etc/pve/priv/ceph # cp /etc/pve/priv/ceph.client.admin.keyring /etc/pve/priv/ceph/my-ceph-storage.keyring

You can then configure Proxmox VE to use such pools to store VM images, just use the GUI ("Add Storage": RBD). A typical entry in the Proxmox VE storage configuration looks like:

# from /etc/pve/storage.cfg

rbd: my-ceph-storage

monhost 10.10.10.1;10.10.10.2;10.10.10.3

pool rbd

content images

username admin

krbd 0

If you want to store containers on Ceph, you need to create an extra pool using KRBD.

# from /etc/pve/storage.cfg

rbd: my-ceph-storage-for-lxc

monhost 10.10.10.1;10.10.10.2;10.10.10.3

pool rbd-lxc

content images

username admin

krbd 1

Further readings about Ceph

Ceph comes with plenty of documentation here. Even better, the dissertation from the creator of Ceph - Sage A. Weil - is also available. By reading this you can get a deep insight how it works.

- https://www.sebastien-han.fr/blog/2014/10/10/ceph-how-to-test-if-your-ssd-is-suitable-as-a-journal-device/, Journal SSD Recommendations

Video Tutorials

Proxmox YouTube channel

You can subscribe to our Proxmox VE Channel on YouTube to get updates about new videos.

Ceph Misc

Set the Ceph OSD tunables

ceph osd crush tunables hammer

If you set the tunable and your ceph cluster is used you should do this when the cluster has the least load. Also try to use the backfill option to do this process slower.

Important Do not set tunable to optimal because then krbd will not work. Set it to hammer.

Prepare OSD Disks

It should be noted that this command refuses to initialize disk when it detects existing data. So if you want to overwrite a disk you should remove existing data first. You can do that using:

# ceph-disk zap /dev/sd[X]

- In some cases disks that used to be part of a 3ware raid need the following in addition to zap.

try this:

#To remove partition table and boot sector the following should be sufficient: dd if=/dev/zero of=/dev/$DISK bs=1024 count=1

or

DISK=$1 if [ "$1" = "" ]; then echo "Need to supply a dev name like sdg . exiting" exit 1 fi echo " make sure this is the correct disk " echo $DISK echo " you will end up with NO partition table when this procedes . example: Disk /dev/$1 doesn't contain a valid partition table Press enter to procede , or ctl-c to exit " read x dd if=/dev/zero of=/dev/$DISK bs=512 count=50000

Upgrading existing Ceph Server from Hammer to Jewel

Upgrading existing Ceph Server from Jewel to Luminous

osd hardware

it is best to use the same suggested model drive for all osd's.

make one change at a time

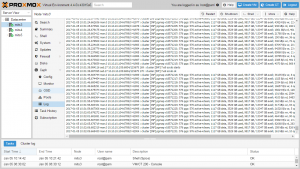

after ceph is set up and running make only one change at a time.

adding and osd, changing pool settings - check log and make sure health is normal before the next change. too many changes at the same time can result in slow systems - bad for cli.

to check that change has completed check logs. at pve>ceph>log

- or from cli

ceph -w

or

ceph -s

using a disk that was part of a zfs pool

as of now

ceph-disk zap /dev/sd?

is needed.

else it does not show up on pve > ceph > osd > Create OSD

restore lxc from zfs to ceph

if lxc is on zfs with compression the actual disk usage can be far greater then expected. see https://forum.proxmox.com/threads/lxc-restore-fail-to-ceph.32419/#post-161287

One way to know actual disk usage:

- restore backup to a ext4 directory and run du -sh , then do restore manually specifying target disk size.

scsi setting

make sure that you use virtio-scsi controller (not LSI), see VM options. I remember some panic when using LSI recently but I did not debug it further as modern OS should use virtio-scsi anyways. https://forum.proxmox.com/threads/restarted-a-node-some-kvms-on-other-nodes-panic.32806

kvm hard disk cache

use write-through [ from forum 2/2017 ]

noout

"Periodically, you may need to perform maintenance on a subset of your cluster, or resolve a problem that affects a failure domain (e.g., a rack). If you do not want CRUSH to automatically rebalance the cluster as you stop OSDs for maintenance, set the cluster to noout first:"

ceph osd set noout

that can be done from pve at ceph>osd . very important.

Disabling Cephx

research this 1ST . check forum , ceph mail list and http://docs.ceph.com/docs/master/rados/configuration/auth-config-ref/ :" The cephx protocol is enabled by default. Cryptographic authentication has some computational costs, though they should generally be quite low. If the network environment connecting your client and server hosts is very safe and you cannot afford authentication, you can turn it off. This is not generally recommended."

Our ceph network is isolated, and I am looking to speed up ceph performance so did this.

1- turn off all VM's which use ceph

2- /etc/pve/ceph.conf set:

auth cluster required = none auth service required = none auth client required = none

3- stop ceph daemons per http://docs.ceph.com/docs/master/rados/operations/operating/ To stop all daemons on a Ceph Node (irrespective of type), execute the following:

- Do on all mon nodes **

systemctl stop ceph\*.service ceph\*.target

4- you need also to remove the client key in /etc/pve/priv/ceph/

cd /etc/pve/priv # do not do this line, else this file will be recreated by /usr/share/perl5/PVE/API2/Ceph.pm and using old keys later will not work. #-#mv ceph.client.admin.keyring ceph.client.admin.keyring-old mkdir /etc/pve/priv/ceph/old mv /etc/pve/priv/ceph/ceph* /etc/pve/priv/ceph/old/

5- start ceph To start all daemons on a Ceph Node (irrespective of type), execute the following:

- On all mon nodes **

systemctl start ceph.target

6- start vm's