Difference between revisions of "Ceph Server"

m (→Creating Ceph OSDs: cosmetic) |

(make CLI/GUI option a bit clearer) |

||

| Line 1: | Line 1: | ||

| − | =Introduction= | + | = Introduction = |

| − | |||

| − | |||

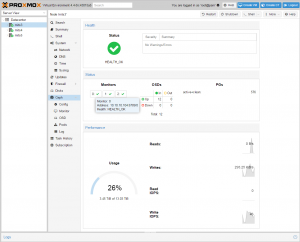

| − | + | [[Image:Screen-Ceph-Status.png|thumb]] Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability - See more at: http://ceph.com. | |

| − | + | Proxmox VE supports Ceph’s RADOS Block Device to be used for VM disks. The Ceph storage services are usually hosted on external, dedicated storage nodes. Such storage clusters can sum up to several hundreds of nodes, providing petabytes of storage capacity. | |

| − | + | For smaller deployments, it is also possible to run Ceph services directly on your Proxmox VE nodes. Recent hardware has plenty of CPU power and RAM, so running storage services and VMs on same node is possible. | |

| − | + | This articles describes how to setup and run Ceph storage services directly on Proxmox VE nodes. | |

| − | + | '''Note:''' | |

| − | To try the beta, just add the following line to your /etc/apt/sources.list: | + | Ceph Storage integration (CLI and GUI) will be introduced in Proxmox VE 3.2 as technology preview and is already available as beta in the pvetest repository. |

| + | |||

| + | To try the beta, just add the following line to your /etc/apt/sources.list: | ||

## PVE test repository provided by proxmox.com, only use it for testing | ## PVE test repository provided by proxmox.com, only use it for testing | ||

deb http://download.proxmox.com/ debian wheezy pvetest | deb http://download.proxmox.com/ debian wheezy pvetest | ||

| − | = Advantages = | + | = Advantages = |

| − | *Easy setup and management with CLI and GUI support on Proxmox VE | + | *Easy setup and management with CLI and GUI support on Proxmox VE |

| − | *Thin provisioning | + | *Thin provisioning |

*Snapshots support | *Snapshots support | ||

| − | *Self healing | + | *Self healing |

| − | *No single point of failure | + | *No single point of failure |

| − | *Scalable to the exabyte level | + | *Scalable to the exabyte level |

| − | *Setup pools with different performance and redundancy characteristics | + | *Setup pools with different performance and redundancy characteristics |

| − | *Data is replicated, making it fault tolerant | + | *Data is replicated, making it fault tolerant |

| − | *Runs on economical commodity hardware | + | *Runs on economical commodity hardware |

| − | *No need for hardware RAID controllers | + | *No need for hardware RAID controllers |

| − | *Easy management | + | *Easy management |

*Open source | *Open source | ||

= Recommended hardware = | = Recommended hardware = | ||

| − | You need at least three identical servers for the redundant setup. Here is the specifications of one of our test lab clusters with Proxmox VE and Ceph (three nodes): | + | You need at least three identical servers for the redundant setup. Here is the specifications of one of our test lab clusters with Proxmox VE and Ceph (three nodes): |

| − | * Dual Xeon E5-2620v2, 32 GB RAM, Intel S2600CP mainboard, Intel RMM, Chenbro 2U chassis with eight 3.5” hot-swap drive bays, 2 fixed 2.5" SSD bays | + | *Dual Xeon E5-2620v2, 32 GB RAM, Intel S2600CP mainboard, Intel RMM, Chenbro 2U chassis with eight 3.5” hot-swap drive bays, 2 fixed 2.5" SSD bays |

| − | * 10 GBit network for Ceph traffic (one Intel X540-T2 in each server, one 10Gb switch - Netgear XS712T) | + | *10 GBit network for Ceph traffic (one Intel X540-T2 in each server, one 10Gb switch - Netgear XS712T) |

| − | * Single enterprise class SSD for the Proxmox VE installation (because we run Ceph monitors there and quite a lot of logs), we use one Intel DC S3500 80 GB per host. | + | *Single enterprise class SSD for the Proxmox VE installation (because we run Ceph monitors there and quite a lot of logs), we use one Intel DC S3500 80 GB per host. |

| − | * Single, fast and reliable enterprise class SSD for Ceph Journal. For this test lab cluster, we used Samsung SSD 840 PRO with 240 GB | + | *Single, fast and reliable enterprise class SSD for Ceph Journal. For this test lab cluster, we used Samsung SSD 840 PRO with 240 GB |

| − | * SATA disk for storing the data (OSDs), use at least 4 disks/OSDs per server, more OSD disks are faster. We use four Seagate Constellation ES.3 SATA 6Gb (4TB model) per server. | + | *SATA disk for storing the data (OSDs), use at least 4 disks/OSDs per server, more OSD disks are faster. We use four Seagate Constellation ES.3 SATA 6Gb (4TB model) per server. |

| − | This setup delivers 48 TB storage. By using the redundancy of 3, you can store up to 16 TB (100%). But to be prepared for failed disks and hosts, you should never fill up your storage with 100 %. | + | This setup delivers 48 TB storage. By using the redundancy of 3, you can store up to 16 TB (100%). But to be prepared for failed disks and hosts, you should never fill up your storage with 100 %. |

| − | By adding more disks, the storage can be expanded up to 96 TB just by plugging in additional disks into the free drive bays. Of course, you can add more servers too as soon as your business is growing. | + | By adding more disks, the storage can be expanded up to 96 TB just by plugging in additional disks into the free drive bays. Of course, you can add more servers too as soon as your business is growing. |

| − | If you do not want to run virtual machines and Ceph on the same host, you can just add more Proxmox VE nodes and use these for running the guests and the others just for the storage. | + | If you do not want to run virtual machines and Ceph on the same host, you can just add more Proxmox VE nodes and use these for running the guests and the others just for the storage. |

| − | = Installation of Proxmox VE = | + | = Installation of Proxmox VE = |

| − | Before you start with Ceph, you need a working Proxmox VE cluster with 3 nodes (or more). We install Proxmox VE on a fast and reliable enterprise class SSD, so we can use all bays for OSD data. Just follow the well known instructions on [[Installation]] and [[Proxmox VE 2.0 Cluster]]. | + | Before you start with Ceph, you need a working Proxmox VE cluster with 3 nodes (or more). We install Proxmox VE on a fast and reliable enterprise class SSD, so we can use all bays for OSD data. Just follow the well known instructions on [[Installation]] and [[Proxmox VE 2.0 Cluster]]. |

| − | '''Note:''' | + | '''Note:''' |

| − | Use ext4 if you install on SSD (at the boot prompt of the installation ISO you can specify parameters, e.g. "linux ext4 swapsize=4"). | + | Use ext4 if you install on SSD (at the boot prompt of the installation ISO you can specify parameters, e.g. "linux ext4 swapsize=4"). |

| − | = Network for Ceph = | + | = Network for Ceph = |

| − | All nodes need access to a separate 10Gb network interface, exclusively used for Ceph. We use network 10.10.10.0/24 for this tutorial. | + | All nodes need access to a separate 10Gb network interface, exclusively used for Ceph. We use network 10.10.10.0/24 for this tutorial. |

| − | It is highly recommended to use 10Gb for that network to avoid performance problems. Bonding can be used to increase availability. | + | It is highly recommended to use 10Gb for that network to avoid performance problems. Bonding can be used to increase availability. |

| + | |||

| + | == First node == | ||

| − | |||

The network setup (ceph private network) from our first node contains: | The network setup (ceph private network) from our first node contains: | ||

| Line 69: | Line 70: | ||

auto eth2 | auto eth2 | ||

iface eth2 inet static | iface eth2 inet static | ||

| − | + | address 10.10.10.1 | |

| − | + | netmask 255.255.255.0 | |

| + | |||

| + | == Second node == | ||

| − | |||

The network setup (ceph private network) from our second node contains: | The network setup (ceph private network) from our second node contains: | ||

| Line 78: | Line 80: | ||

auto eth2 | auto eth2 | ||

iface eth2 inet static | iface eth2 inet static | ||

| − | + | address 10.10.10.2 | |

| − | + | netmask 255.255.255.0 | |

| + | |||

| + | == Third node == | ||

| − | |||

The network setup (ceph private network) from our third node contains: | The network setup (ceph private network) from our third node contains: | ||

| Line 87: | Line 90: | ||

auto eth2 | auto eth2 | ||

iface eth2 inet static | iface eth2 inet static | ||

| − | + | address 10.10.10.3 | |

| − | + | netmask 255.255.255.0 | |

| − | = Installation of Ceph packages = | + | = Installation of Ceph packages = |

You now need to select 3 nodes and install the Ceph software packages there. We wrote a small command line utility called 'pveceph' which helps you performing this tasks. Login to all your nodes and execute the following on all: | You now need to select 3 nodes and install the Ceph software packages there. We wrote a small command line utility called 'pveceph' which helps you performing this tasks. Login to all your nodes and execute the following on all: | ||

| Line 100: | Line 103: | ||

node3# pveceph install | node3# pveceph install | ||

| − | This sets up an 'apt' package repository in /etc/apt/sources.list.d/ceph.list and installs the required software. | + | This sets up an 'apt' package repository in /etc/apt/sources.list.d/ceph.list and installs the required software. |

| − | = Create initial Ceph configuration = | + | = Create initial Ceph configuration = |

| − | [[Image:Screen-Ceph-Config.png|thumb]] | + | |

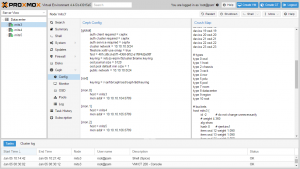

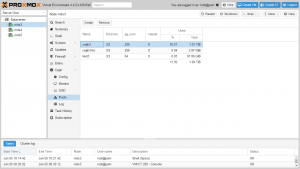

| − | After installation of packages, you need to create an initial Ceph configuration on just one node, based on your private network: | + | [[Image:Screen-Ceph-Config.png|thumb]] After installation of packages, you need to create an initial Ceph configuration on just one node, based on your private network: |

node1# pveceph init --network 10.10.10.0/24 | node1# pveceph init --network 10.10.10.0/24 | ||

| − | This creates an initial config at /etc/pve/ceph.conf. That file is automatically distributed to all Proxmox VE nodes by using | + | This creates an initial config at /etc/pve/ceph.conf. That file is automatically distributed to all Proxmox VE nodes by using [http://pve.proxmox.com/wiki/Proxmox_Cluster_file_system_%28pmxcfs%29 pmxcfs]. The command also creates a symbolic link from /etc/ceph/ceph.conf pointing to that file. So you can simply run Ceph commands without the need to specify a configuration file. |

| + | |||

| + | = Creating Ceph Monitors = | ||

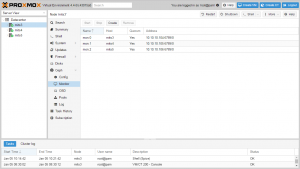

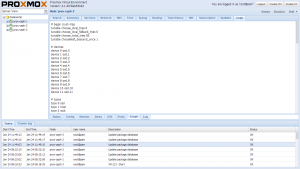

| − | + | [[Image:Screen-Ceph-Monitor.png|thumb]] After that you can create the first Ceph monitor service using: | |

| − | [[Image:Screen-Ceph-Monitor.png|thumb]] | ||

| − | After that you can create the first Ceph monitor service using: | ||

node1# pveceph createmon | node1# pveceph createmon | ||

| − | + | = Continue with CLI or GUI = | |

| + | |||

| + | As soon as you have created the first monitor, you can start using the Proxmox GUI (see the video tutorial on [http://youtu.be/ImyRUyMBrwo Managing Ceph Server]) to manage and view your Ceph configuration. | ||

| + | |||

| + | Of course, you can continue to use the command line tools (CLI). We continue with the CLI in this wiki article, but you should achieve the same results no matter which way you finish the remaining steps. | ||

| + | |||

| + | = Creating more Ceph Monitors = | ||

You should run 3 monitors, one on each node. Create them via GUI or via CLI. So please login to the next node and run: | You should run 3 monitors, one on each node. Create them via GUI or via CLI. So please login to the next node and run: | ||

| Line 122: | Line 131: | ||

node2# pveceph createmon | node2# pveceph createmon | ||

| − | And execute the same steps on the third node: | + | And execute the same steps on the third node: |

node3# pveceph createmon | node3# pveceph createmon | ||

| − | '''Note:''' | + | '''Note:''' |

| + | |||

| + | If you add a node where you do not want to run a Ceph monitor, e.g. another node for OSDs, you need to install the Ceph packages with 'pveceph install' and you need to initialize Ceph with 'pveceph init' | ||

| − | + | = Creating Ceph OSDs = | |

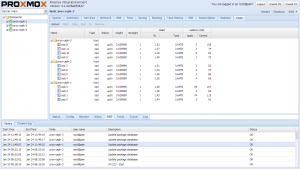

| − | + | [[Image:Screen-Ceph-Disks.png|thumb]] [[Image:Screen-Ceph-OSD.png|thumb]] First, please be careful when you initialize your OSD disks, because it basically removes all existing data from those disks. So it is important to select the correct device names. The Proxmox VE Ceph GUI displays a list of all disk, together with device names, usage information and serial numbers. | |

| − | [[Image:Screen-Ceph-Disks.png|thumb]] [[Image:Screen-Ceph-OSD.png|thumb]] | ||

| − | First, please be careful when you initialize your OSD disks, because it basically removes all existing data from those disks. So it is important to select the correct device names. The Proxmox VE Ceph GUI displays a list of all disk, together with device names, usage information and serial numbers. | ||

| − | Creating OSDs can be done via GUI - self explaining - or via CLI, explained here: | + | Creating OSDs can be done via GUI - self explaining - or via CLI, explained here: |

| − | Having that said, initializing an OSD can be done with: | + | Having that said, initializing an OSD can be done with: |

# pveceph createosd /dev/sd[X] | # pveceph createosd /dev/sd[X] | ||

| − | If you want to use a dedicated SSD journal disk: | + | If you want to use a dedicated SSD journal disk: |

# pveceph createosd /dev/sd[X] -journal_dev dev/sd[X] | # pveceph createosd /dev/sd[X] -journal_dev dev/sd[X] | ||

| Line 148: | Line 157: | ||

# pveceph createosd /dev/sdf -journal_dev /dev/sdb | # pveceph createosd /dev/sdf -journal_dev /dev/sdb | ||

| − | This partitions the disk (data and journal partition), creates filesystems, starts the OSD and add it to the existing crush map. So afterward the OSD is running and fully functional. Please create at least 12 OSDs, distributed among your nodes (4 on each node). | + | This partitions the disk (data and journal partition), creates filesystems, starts the OSD and add it to the existing crush map. So afterward the OSD is running and fully functional. Please create at least 12 OSDs, distributed among your nodes (4 on each node). |

| − | It should be noted that this command refuses to initialize disk when it detects existing data. So if you want to overwrite a disk you should remove existing data first. You can do that using: | + | It should be noted that this command refuses to initialize disk when it detects existing data. So if you want to overwrite a disk you should remove existing data first. You can do that using: |

# ceph-disk zap /dev/sd[X] | # ceph-disk zap /dev/sd[X] | ||

| − | You can create OSDs containing both journal and data partitions or you can place the journal on a dedicated SSD. Using a SSD journal disk is highly recommended if you expect good performance. | + | You can create OSDs containing both journal and data partitions or you can place the journal on a dedicated SSD. Using a SSD journal disk is highly recommended if you expect good performance. |

| − | '''Note:''' | + | '''Note:''' |

| − | In order to use a dedicated journal disk (SSD), the disk needs to have a GPT partition table. You can create this with 'gdisk /dev/sd(x)'. If there is no GPT, you cannot select the disk as journal. Currently the journal size is fixed to 5 GB. | + | In order to use a dedicated journal disk (SSD), the disk needs to have a GPT partition table. You can create this with 'gdisk /dev/sd(x)'. If there is no GPT, you cannot select the disk as journal. Currently the journal size is fixed to 5 GB. |

| − | = Ceph Pools = | + | = Ceph Pools = |

| − | |||

| − | |||

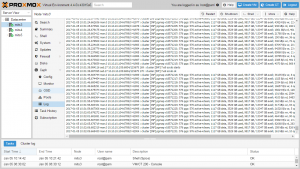

| − | You can then configure Proxmox VE to use such pools to store VM images, just use the GUI ("Add Storage": RBD). A typical entry in the Proxmox VE storage configuration looks like: | + | [[Image:Screen-Ceph-Pools.png|thumb]] [[Image:Screen-Ceph-Crush.png|thumb]] [[Image:Screen-Ceph-Log.png|thumb]] The standard installation creates some default pools, so you can either use the standard 'rbd' pool, or create your own pools using the GUI. |

| + | |||

| + | You can then configure Proxmox VE to use such pools to store VM images, just use the GUI ("Add Storage": RBD). A typical entry in the Proxmox VE storage configuration looks like: | ||

# from /etc/pve/storage.cfg | # from /etc/pve/storage.cfg | ||

rbd: my-ceph-pool | rbd: my-ceph-pool | ||

| − | + | monhost 10.10.10.1 10.10.10.2 10.10.10.3 | |

| − | + | pool rbd | |

| − | + | content images | |

| − | + | username admin | |

| − | You also need to copy the keyring to a predefined location | + | You also need to copy the keyring to a predefined location |

# cd /etc/pve/priv/ | # cd /etc/pve/priv/ | ||

| Line 179: | Line 188: | ||

# cp ceph.client.admin.keyring ceph/my-ceph-pool.keyring | # cp ceph.client.admin.keyring ceph/my-ceph-pool.keyring | ||

| − | =Why do we need a new command line tool (pveceph)?= | + | = Why do we need a new command line tool (pveceph)? = |

| − | Proxmox VE provides a distributed file system ([http://pve.proxmox.com/wiki/Proxmox_Cluster_file_system_%28pmxcfs%29 pmxcfs]) to store configuration files. | + | Proxmox VE provides a distributed file system ([http://pve.proxmox.com/wiki/Proxmox_Cluster_file_system_%28pmxcfs%29 pmxcfs]) to store configuration files. |

We use this to store the Ceph configuration. The advantage is that all nodes see the same file, and there is no need to copy configuration data around using ssh/scp. The tool can also use additional information from your Proxmox VE setup. | We use this to store the Ceph configuration. The advantage is that all nodes see the same file, and there is no need to copy configuration data around using ssh/scp. The tool can also use additional information from your Proxmox VE setup. | ||

| − | Tools like ceph-deploy cannot take advantage of that architecture. | + | Tools like ceph-deploy cannot take advantage of that architecture. |

| + | |||

| + | = Video Tutorials = | ||

| − | |||

*[http://youtu.be/ImyRUyMBrwo Managing Ceph Server] | *[http://youtu.be/ImyRUyMBrwo Managing Ceph Server] | ||

| − | =Further readings about Ceph= | + | = Further readings about Ceph = |

| − | Ceph comes with plenty of documentation [http://ceph.com/docs/master/ here]. Even better, the dissertation from the creator of Ceph - Sage A. Weil - is also [http://ceph.com/papers/weil-thesis.pdf available]. By reading this you can get a deep insight how it works. | + | Ceph comes with plenty of documentation [http://ceph.com/docs/master/ here]. Even better, the dissertation from the creator of Ceph - Sage A. Weil - is also [http://ceph.com/papers/weil-thesis.pdf available]. By reading this you can get a deep insight how it works. |

| − | *http://ceph.com/ | + | *http://ceph.com/ |

*http://www.inktank.com/, Commercial support services for Ceph | *http://www.inktank.com/, Commercial support services for Ceph | ||

| − | [[Category: HOWTO]] [[Category:Installation]] | + | [[Category:HOWTO]] [[Category:Installation]] |

Revision as of 18:38, 8 February 2014

Introduction

Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability - See more at: http://ceph.com.

Proxmox VE supports Ceph’s RADOS Block Device to be used for VM disks. The Ceph storage services are usually hosted on external, dedicated storage nodes. Such storage clusters can sum up to several hundreds of nodes, providing petabytes of storage capacity.

For smaller deployments, it is also possible to run Ceph services directly on your Proxmox VE nodes. Recent hardware has plenty of CPU power and RAM, so running storage services and VMs on same node is possible.

This articles describes how to setup and run Ceph storage services directly on Proxmox VE nodes.

Note:

Ceph Storage integration (CLI and GUI) will be introduced in Proxmox VE 3.2 as technology preview and is already available as beta in the pvetest repository.

To try the beta, just add the following line to your /etc/apt/sources.list:

## PVE test repository provided by proxmox.com, only use it for testing deb http://download.proxmox.com/ debian wheezy pvetest

Advantages

- Easy setup and management with CLI and GUI support on Proxmox VE

- Thin provisioning

- Snapshots support

- Self healing

- No single point of failure

- Scalable to the exabyte level

- Setup pools with different performance and redundancy characteristics

- Data is replicated, making it fault tolerant

- Runs on economical commodity hardware

- No need for hardware RAID controllers

- Easy management

- Open source

Recommended hardware

You need at least three identical servers for the redundant setup. Here is the specifications of one of our test lab clusters with Proxmox VE and Ceph (three nodes):

- Dual Xeon E5-2620v2, 32 GB RAM, Intel S2600CP mainboard, Intel RMM, Chenbro 2U chassis with eight 3.5” hot-swap drive bays, 2 fixed 2.5" SSD bays

- 10 GBit network for Ceph traffic (one Intel X540-T2 in each server, one 10Gb switch - Netgear XS712T)

- Single enterprise class SSD for the Proxmox VE installation (because we run Ceph monitors there and quite a lot of logs), we use one Intel DC S3500 80 GB per host.

- Single, fast and reliable enterprise class SSD for Ceph Journal. For this test lab cluster, we used Samsung SSD 840 PRO with 240 GB

- SATA disk for storing the data (OSDs), use at least 4 disks/OSDs per server, more OSD disks are faster. We use four Seagate Constellation ES.3 SATA 6Gb (4TB model) per server.

This setup delivers 48 TB storage. By using the redundancy of 3, you can store up to 16 TB (100%). But to be prepared for failed disks and hosts, you should never fill up your storage with 100 %.

By adding more disks, the storage can be expanded up to 96 TB just by plugging in additional disks into the free drive bays. Of course, you can add more servers too as soon as your business is growing.

If you do not want to run virtual machines and Ceph on the same host, you can just add more Proxmox VE nodes and use these for running the guests and the others just for the storage.

Installation of Proxmox VE

Before you start with Ceph, you need a working Proxmox VE cluster with 3 nodes (or more). We install Proxmox VE on a fast and reliable enterprise class SSD, so we can use all bays for OSD data. Just follow the well known instructions on Installation and Proxmox VE 2.0 Cluster.

Note:

Use ext4 if you install on SSD (at the boot prompt of the installation ISO you can specify parameters, e.g. "linux ext4 swapsize=4").

Network for Ceph

All nodes need access to a separate 10Gb network interface, exclusively used for Ceph. We use network 10.10.10.0/24 for this tutorial.

It is highly recommended to use 10Gb for that network to avoid performance problems. Bonding can be used to increase availability.

First node

The network setup (ceph private network) from our first node contains:

# from /etc/network/interfaces auto eth2 iface eth2 inet static address 10.10.10.1 netmask 255.255.255.0

Second node

The network setup (ceph private network) from our second node contains:

# from /etc/network/interfaces auto eth2 iface eth2 inet static address 10.10.10.2 netmask 255.255.255.0

Third node

The network setup (ceph private network) from our third node contains:

# from /etc/network/interfaces auto eth2 iface eth2 inet static address 10.10.10.3 netmask 255.255.255.0

Installation of Ceph packages

You now need to select 3 nodes and install the Ceph software packages there. We wrote a small command line utility called 'pveceph' which helps you performing this tasks. Login to all your nodes and execute the following on all:

node1# pveceph install

node2# pveceph install

node3# pveceph install

This sets up an 'apt' package repository in /etc/apt/sources.list.d/ceph.list and installs the required software.

Create initial Ceph configuration

After installation of packages, you need to create an initial Ceph configuration on just one node, based on your private network:

node1# pveceph init --network 10.10.10.0/24

This creates an initial config at /etc/pve/ceph.conf. That file is automatically distributed to all Proxmox VE nodes by using pmxcfs. The command also creates a symbolic link from /etc/ceph/ceph.conf pointing to that file. So you can simply run Ceph commands without the need to specify a configuration file.

Creating Ceph Monitors

After that you can create the first Ceph monitor service using:

node1# pveceph createmon

Continue with CLI or GUI

As soon as you have created the first monitor, you can start using the Proxmox GUI (see the video tutorial on Managing Ceph Server) to manage and view your Ceph configuration.

Of course, you can continue to use the command line tools (CLI). We continue with the CLI in this wiki article, but you should achieve the same results no matter which way you finish the remaining steps.

Creating more Ceph Monitors

You should run 3 monitors, one on each node. Create them via GUI or via CLI. So please login to the next node and run:

node2# pveceph createmon

And execute the same steps on the third node:

node3# pveceph createmon

Note:

If you add a node where you do not want to run a Ceph monitor, e.g. another node for OSDs, you need to install the Ceph packages with 'pveceph install' and you need to initialize Ceph with 'pveceph init'

Creating Ceph OSDs

First, please be careful when you initialize your OSD disks, because it basically removes all existing data from those disks. So it is important to select the correct device names. The Proxmox VE Ceph GUI displays a list of all disk, together with device names, usage information and serial numbers.

Creating OSDs can be done via GUI - self explaining - or via CLI, explained here:

Having that said, initializing an OSD can be done with:

# pveceph createosd /dev/sd[X]

If you want to use a dedicated SSD journal disk:

# pveceph createosd /dev/sd[X] -journal_dev dev/sd[X]

Example: /dev/sdf as data disk (4TB) and /dev/sdb is the dedicated SSD journal disk

# pveceph createosd /dev/sdf -journal_dev /dev/sdb

This partitions the disk (data and journal partition), creates filesystems, starts the OSD and add it to the existing crush map. So afterward the OSD is running and fully functional. Please create at least 12 OSDs, distributed among your nodes (4 on each node).

It should be noted that this command refuses to initialize disk when it detects existing data. So if you want to overwrite a disk you should remove existing data first. You can do that using:

# ceph-disk zap /dev/sd[X]

You can create OSDs containing both journal and data partitions or you can place the journal on a dedicated SSD. Using a SSD journal disk is highly recommended if you expect good performance.

Note:

In order to use a dedicated journal disk (SSD), the disk needs to have a GPT partition table. You can create this with 'gdisk /dev/sd(x)'. If there is no GPT, you cannot select the disk as journal. Currently the journal size is fixed to 5 GB.

Ceph Pools

The standard installation creates some default pools, so you can either use the standard 'rbd' pool, or create your own pools using the GUI.

You can then configure Proxmox VE to use such pools to store VM images, just use the GUI ("Add Storage": RBD). A typical entry in the Proxmox VE storage configuration looks like:

# from /etc/pve/storage.cfg

rbd: my-ceph-pool

monhost 10.10.10.1 10.10.10.2 10.10.10.3

pool rbd

content images

username admin

You also need to copy the keyring to a predefined location

# cd /etc/pve/priv/ # mkdir ceph # cp ceph.client.admin.keyring ceph/my-ceph-pool.keyring

Why do we need a new command line tool (pveceph)?

Proxmox VE provides a distributed file system (pmxcfs) to store configuration files.

We use this to store the Ceph configuration. The advantage is that all nodes see the same file, and there is no need to copy configuration data around using ssh/scp. The tool can also use additional information from your Proxmox VE setup.

Tools like ceph-deploy cannot take advantage of that architecture.

Video Tutorials

Further readings about Ceph

Ceph comes with plenty of documentation here. Even better, the dissertation from the creator of Ceph - Sage A. Weil - is also available. By reading this you can get a deep insight how it works.

- http://ceph.com/

- http://www.inktank.com/, Commercial support services for Ceph