Installation: Difference between revisions

(Describing how to revert from LVM-thin to ext4-based /var/lib/vz) |

m (Move section one step down) |

||

| Line 172: | Line 172: | ||

Templates and ISO images are stored on the Proxmox VE server (see /var/lib/vz/template/cache for openvz templates and /var/lib/vz/template/iso for ISO images). You can also transfer templates and ISO images via secure copy (scp) to these directories. If you work on a windows desktop, you can use a graphical scp client like [http://winscp.net winscp]. | Templates and ISO images are stored on the Proxmox VE server (see /var/lib/vz/template/cache for openvz templates and /var/lib/vz/template/iso for ISO images). You can also transfer templates and ISO images via secure copy (scp) to these directories. If you work on a windows desktop, you can use a graphical scp client like [http://winscp.net winscp]. | ||

== Optional: Reverting Thin-LVM to "old" Behavior of <code>/var/lib/vz</code> (Proxmox 4.2 and later) == | === Optional: Reverting Thin-LVM to "old" Behavior of <code>/var/lib/vz</code> (Proxmox 4.2 and later) === | ||

If you installed Proxmox 4.2 (or later), you see yourself confronted with a changed layout of your data. There is no mounted <code>/var/lib/vz</code> LVM volume anymore, instead you find a thin-provisioned volume. This is technically the right choice, but one sometimes want to get the old behavior back, which is described here. This section describes the steps to revert to the "old" layout on a freshly installed Proxmox 4.2: | If you installed Proxmox 4.2 (or later), you see yourself confronted with a changed layout of your data. There is no mounted <code>/var/lib/vz</code> LVM volume anymore, instead you find a thin-provisioned volume. This is technically the right choice, but one sometimes want to get the old behavior back, which is described here. This section describes the steps to revert to the "old" layout on a freshly installed Proxmox 4.2: | ||

Revision as of 12:57, 30 June 2016

Introduction

Proxmox VE installs the complete operating system and management tools in 3 to 5 minutes (depending on the hardware used).

Including the following:

- Complete operating system (Debian Linux, 64-bit)

- Partition the hard drive with ext4 (alternative ext3 or xfs) or ZFS

- Proxmox VE Kernel with LXC and KVM support

- Complete toolset

- Web based management interface

Please note, by default the complete server is used and all existing data is removed.

If you want to set custom options for the installer, or need to debug the installation process on your server, you can use some special boot options.

Video tutorials

- List of all official tutorials on our Proxmox VE YouTube Channel

- Tutorials in Spanish language on ITexperts.es YouTube Play List

System requirements

For production servers, high quality server equipment is needed. Keep in mind, if you run 10 Virtual Servers on one machine and you then experience a hardware failure, 10 services are lost. Proxmox VE supports clustering, this means that multiple Proxmox VE installations can be centrally managed thanks to the included cluster functionality.

Proxmox VE can use local storage (DAS), SAN, NAS and also distributed storage (Ceph RBD). For details see Storage Model

Minimum requirements, for evaluation

- CPU: 64bit (Intel EMT64 or AMD64), Intel VT/AMD-V capable CPU/Mainboard (for KVM Full Virtualization support)

- RAM: 1 GB RAM

- Hard drive

- One NIC

Recommended system requirements

- CPU: 64bit (Intel EMT64 or AMD64), Multi core CPU recommended, Intel VT/AMD-V capable CPU/Mainboard (for KVM Full Virtualization support)

- RAM: 8 GB is good, more is better

- Hardware RAID with batteries protected write cache (BBU) or flash based protection (Software RAID is not supported)

- Fast hard drives, best results with 15k rpm SAS, Raid10

- At least two NIC´s, depending on the used storage technology you need more

Certified hardware

Basically you can use any hardware supporting RHEL6, 64 bit. If you are unsure, post in the forum.

As the browser will be used to manage the Proxmox VE server, it would be prudent to follow safe browsing practices.

Steps to get your Proxmox VE up and running

Install Proxmox VE server

Proxmox VE installation (Video Tutorial)

If you need to install the outdated 1.9 release, check Installing Proxmox VE v1.9 post Lenny retirement

Optional: Install Proxmox VE on Debian 6 Squeeze (64 bit)

EOL.

See Install Proxmox VE on Debian Squeeze

Optional: Install Proxmox VE on Debian 7 Wheezy (64 bit)

EOL April 2016

See Install Proxmox VE on Debian Wheezy

Optional: Install Proxmox VE on Debian 8 Jessie (64 bit)

See Install Proxmox VE on Debian Jessie

Developer_Workstations_with_Proxmox_VE_and_X11

This page will cover the install of X11 and a basic Desktop on top of Proxmox. Optional:_Linux_Mint_Mate_Desktop is also available.

Optional: Install Proxmox VE over iSCSI

See Proxmox ISCSI installation

Proxmox VE web interface

Configuration is done via the Proxmox web interface, just point your browser to the IP address given during installation (https://youripaddress:8006). Please make sure that your browser has the latest Oracle Java browser plugin installed. Proxmox VE is tested for IE9, Firefox 10 and higher, Google Chrome (latest).

Default login is "root" and the root password is defined during the installation process.

Configure basic system setting

Please review the NIC setup, IP and hostname.

Note: changing IP or hostname after cluster creation is not possible (unless you know exactly what you do)

Web interface HTTPS(443) via nginx as proxy

Tested from Proxmox 3.4 - 4.2, still works fine!

Why do I need this?

Sometimes there is a firewall restriction that blocks port 8006 and since we shouldn't touch the port config in proxmox we'll just use nginx as proxy to provide the web interface available on default https port 443. Now let's beginn...

install nginx

apt-get install nginx

remove the default config file

rm /etc/nginx/conf.d/default

create a new config file

nano /etc/nginx/conf.d/proxmox

The following is an example config that works for the webinterface and noVNC:

upstream proxmox {

server "FQDN HOSTNAME";

}

server {

listen 80 default_server;

rewrite ^(.*) https://$host$1 permanent;

}

server {

listen 443;

server_name _;

ssl on;

ssl_certificate /etc/pve/local/pve-ssl.pem;

ssl_certificate_key /etc/pve/local/pve-ssl.key;

proxy_redirect off;

location / {

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_pass https://localhost:8006;

proxy_buffering off;

client_max_body_size 0;

proxy_connect_timeout 3600s;

proxy_read_timeout 3600s;

proxy_send_timeout 3600s;

send_timeout 3600s;

}

}

Reload and restart nginx

/etc/init.d/nginx reload; /etc/init.d/nginx restart

Enjoy the webinterface on HTTPS port 443!

Get Appliance Templates

Download

Just go to your content tab of your storage (e.g. "local") and download pre-built Virtual Appliances directly to your server. This list is maintained by the Proxmox VE team and more and more Appliances will be available. This is the easiest way and a good place to start.

If you have a NFS server you can use a NFS share for storing ISO images. To start, configure the NFS ISO store on the web interface (Configuration/Storage).

Upload from your desktop

If you already got Virtually Appliances you can upload them via the upload button. To install a virtual machine from an ISO image (using KVM full virtualization) just upload the ISO file via the upload button.

Directly to file system

Templates and ISO images are stored on the Proxmox VE server (see /var/lib/vz/template/cache for openvz templates and /var/lib/vz/template/iso for ISO images). You can also transfer templates and ISO images via secure copy (scp) to these directories. If you work on a windows desktop, you can use a graphical scp client like winscp.

Optional: Reverting Thin-LVM to "old" Behavior of /var/lib/vz (Proxmox 4.2 and later)

If you installed Proxmox 4.2 (or later), you see yourself confronted with a changed layout of your data. There is no mounted /var/lib/vz LVM volume anymore, instead you find a thin-provisioned volume. This is technically the right choice, but one sometimes want to get the old behavior back, which is described here. This section describes the steps to revert to the "old" layout on a freshly installed Proxmox 4.2:

- After the Installation your storage configuration in

/etc/pve/storage.cfgwill look like this:

dir: local

path /var/lib/vz

content iso,vztmpl,backup

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

- You can delete the thin-volume via GUI or manually and have to set the local directory to store images and container aswell. You should have such a config in the end:

dir: local

path /var/lib/vz

maxfiles 0

content backup,iso,vztmpl,rootdir,images

- Now you need to recreate

/var/lib/vz

root@pve-42 ~ > lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-a-tz-- 16.38g 0.00 0.49

root pve -wi-ao---- 7.75g

swap pve -wi-ao---- 3.88g

root@pve-42 ~ > lvremove pve/data

Do you really want to remove active logical volume data? [y/n]: y

Logical volume "data" successfully removed

root@pve-42 ~ > lvcreate --name data -l +100%FREE pve

Logical volume "data" created.

root@pve-42 ~ > mkfs.ext4 /dev/pve/data

mke2fs 1.42.12 (29-Aug-2014)

Discarding device blocks: done

Creating filesystem with 5307392 4k blocks and 1327104 inodes

Filesystem UUID: 310d346a-de4e-48ae-83d0-4119088af2e3

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

- Then add the new volume in your

/etc/fstab:

/dev/pve/data /var/lib/vz ext4 defaults 0 1

- Restart to check if everything survives a reboot.

You should end up with a working "old-style" configuration where you "see" your files as it was before Proxmox 4.2

Create Virtual Machines

Linux Containers (LXC)

See Linux Container for a detailed description.

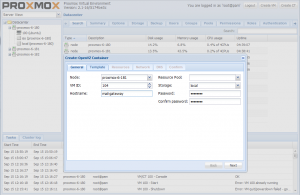

Container (OpenVZ)

First get the adequate(s) appliance(s) template(s).

Then just click "Create CT":

General

- Node: If you have several Proxmox VE servers, select the node where you want to create the new container

- VM ID: choose a virtual machine identification number, just use the given ID or overwrite the suggested one

- Hostname: give a unique server name for the new container

- Resource Pool: select the previously resource pool (optional)

- Storage: select the storage for your container

- Password: set the root password for your container

Template

- Storage: select your template data store (you need to download templates before you can select them here)

- Template: choose the template

Resources

- Memory (MB): set the memory (RAM)

- Swap (MB): set the swap

- Disk size (GB): set the total disk size

- CPUs: set the number of CPUs (if you run java inside your container, choose at least 2 here)

Network

- Routed mode (venet): default(venet) give a unique IP

- Briged mode

- in only some case you need Bridged Ethernet(veth) (see Differences_between_venet_and_veth on OpenVZ wiki for details) If you select Brigded Ethernet, the IP configuration has to be done in the container, like you would do it on a physical server.

DNS

- DNS Domain: e.g. yourdomain.com

- First/Second DNS Servers: enter DNS servers

Confirm

This tab shows a summary, please check if everything is done as needed. If you need to change a setting, you can jump to the previous tabs just by clicking.

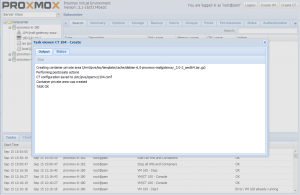

After you clicked "Finish", all settings are applied - wait for completion (this process can take between a view seconds and up to a minute, depends on the used template and your hardware).

CentOS 7

If you can't PING a CentOS 7 container: In order to get rid of problems with venet0 when running a CentOS 7 container (OpenVZ), just activate the patch redhat-add_ip.sh-patch as follows:

1. transfer the patchfile to your working directory into Proxmox VE host (i.e:/ root) and extract it (unzip) 2. perform this command:

# patch -p0 < redhat-add_ip.sh-patch

3. stop and start the respective container

Patch file: http://forum.proxmox.com/threads/22770-fix-for-centos-7-container-networking

Video Tutorials

Virtual Machines (KVM)

Just click "Create VM":

General

- Node: If you have several Proxmox VE servers, select the node where you want to create the new VM

- VM ID: choose a virtual machine identification number, just use the given ID or overwrite the suggested one

- Name: choose a name for your VM (this is not the hostname), can be changed any time

- Resource Pool: select the previously resource pool (optional)

OS

Select the Operating System (OS) of your VM

CD/DVD

- Use CD/DVD disc image file (iso): Select the storage where you previously uploaded your iso images and choose the file

- Use physical CD/DVD Drive: choose this to use the CD/DVD from your Proxmox VE node

- Do not use any media: choose this if you do not want any media

Hard disk

- Bus/Device: choose the bus type, as long as your guest supports go for virtio

- Storage: select the storage where you want to store the disk Disk size (GB): define the size

- Format: Define the disk image format. For good performance, go for raw. If you plan to use snapshots, go for qcow2.

- Cache: define the cache policy for the virtual disk

- Limits: (if necessary) set the maximum transfer speeds

CPU

- Sockets: set the number of CPU sockets

- Cores: set the number of CPU Cores per socket

- CPU type: select CPU type

- Total cores: never use more CPU cores than physical available on the Proxmox VE host

Memory

- Memory (MB): set the memory (RAM) for your VM

Network

- Briged mode: this is the default setting, just choose the Brigde where you want to connect your VM. If you want to use VLAN, you can define the VLAN tag for the VM

- NAT mode

- No network device

- Model: choose the emulated network device, as long as your guest support it, go for virtio

- MAC address: use 'auto' or overwrite with a valid and unique MAC address

- Rate limit (MB/s): set a speed limit for this network adapter

Confirm

This tab shows a summary, please check if everything is done as needed. If you need to change a setting, you can jump to the previous tabs just by clicking. After you clicked "Finish", all settings are applied - wait for completion (this process just takes a second).

Video Tutorials

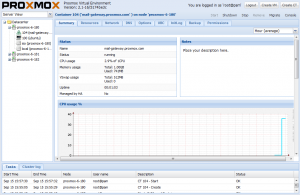

Managing Virtual Machines

Go to "VM Manager/Virtual Machines" to see a list of your Virtual Machines.

Basic tasks can be done by clicking on the red arrow - drop down menu:

- start, restart, shutdown, stop

- migrate: migrate a Virtual Machine to another physical host (you need at least two Proxmox VE servers - see Proxmox VE Cluster

- console: using the VNC console for container virtualization automatically logs in via root. For managing KVM Virtual Machine, the console shows the screen of the full virtualized machine)

For a detailed view and configuration changes just click on a Virtual Machine row in the list of VMs.

"Logs" on a container Virtual Machine:

- Boot/Init: shows the Boot/Init logs generated during start or stop

- Command: see the current/last executed task

- Syslog: see the real time syslog of the Virtual Machine

Networking and Firewall

See Network Model and Proxmox VE Firewall