Multicast notes: Difference between revisions

Brad House (talk | contribs) |

Brad House (talk | contribs) No edit summary |

||

| Line 105: | Line 105: | ||

== Testing multicast == | == Testing multicast == | ||

not all hosting companies allow multicast traffic. | Note: not all hosting companies allow multicast traffic. | ||

=== Using omping === | |||

Install on all nodes | |||

=== | |||

aptitude install omping | aptitude install omping | ||

| Line 117: | Line 116: | ||

omping node1 node2 node3 | omping node1 node2 node3 | ||

=== | === Using ssmping === | ||

Install | Install on all nodes | ||

aptitude install ssmping | aptitude install ssmping | ||

| Line 136: | Line 131: | ||

example output | example output | ||

< | <nowiki> | ||

asmping joined (S,G) = (*,224.0.2.234) | |||

pinging 192.168.8.6 from 192.168.8.5 | pinging 192.168.8.6 from 192.168.8.5 | ||

unicast from 192.168.8.6, seq=1 dist=0 time=0.221 ms | unicast from 192.168.8.6, seq=1 dist=0 time=0.221 ms | ||

| Line 151: | Line 147: | ||

unicast from 192.168.8.6, seq=7 dist=0 time=0.245 ms | unicast from 192.168.8.6, seq=7 dist=0 time=0.245 ms | ||

multicast from 192.168.8.6, seq=7 dist=0 time=0.260 ms | multicast from 192.168.8.6, seq=7 dist=0 time=0.260 ms | ||

</ | </nowiki> | ||

for more information see | for more information see | ||

| Line 162: | Line 159: | ||

=== ssmping notes === | === ssmping notes === | ||

There are a few other programs included in ssmping which may be of use. here is a list of the files in the package: | |||

apt-file list ssmping | apt-file list ssmping | ||

< | <nowiki> | ||

ssmping: /usr/bin/asmping | |||

ssmping: /usr/bin/mcfirst | ssmping: /usr/bin/mcfirst | ||

ssmping: /usr/bin/ssmping | ssmping: /usr/bin/ssmping | ||

| Line 176: | Line 174: | ||

ssmping: /usr/share/man/man1/ssmping.1.gz | ssmping: /usr/share/man/man1/ssmping.1.gz | ||

ssmping: /usr/share/man/man1/ssmpingd.1.gz | ssmping: /usr/share/man/man1/ssmpingd.1.gz | ||

</ | </nowiki> | ||

== Troubleshooting == | == Troubleshooting == | ||

=== cman & iptables === | === cman & iptables === | ||

In case ''cman'' crashes with ''cpg_send_message failed: 9'' add those to your rule set: | In case ''cman'' crashes with ''cpg_send_message failed: 9'' add those to your rule set: | ||

< | <nowiki> | ||

iptables -A INPUT -m addrtype --dst-type MULTICAST -j ACCEPT | |||

iptables -A INPUT -p udp -m state --state NEW -m multiport –dports 5404,5405 -j ACCEPT | iptables -A INPUT -p udp -m state --state NEW -m multiport –dports 5404,5405 -j ACCEPT | ||

</ | </nowiki> | ||

=== Use unicast instead of multicast (if all else fails) === | === Use unicast instead of multicast (if all else fails) === | ||

| Line 196: | Line 190: | ||

Unicast is a technology for sending messages to a single network destination. In corosync, unicast is implemented as UDP-unicast (UDPU). Due to increased network traffic (compared to multicast) the number of supported nodes is limited, do not use it with more that 4 cluster nodes. | Unicast is a technology for sending messages to a single network destination. In corosync, unicast is implemented as UDP-unicast (UDPU). Due to increased network traffic (compared to multicast) the number of supported nodes is limited, do not use it with more that 4 cluster nodes. | ||

*just create the cluster as usual (pvecm create ...) | * just create the cluster as usual (pvecm create ...) | ||

*follow this howto to create a cluster.conf.new [[Fencing#General_HowTo_for_editing_the_cluster.conf]] | * follow this howto to create a cluster.conf.new [[Fencing#General_HowTo_for_editing_the_cluster.conf]] | ||

*add the new '''transport="udpu"''' in /etc/pve/cluster.conf.new | * add the new '''transport="udpu"''' in /etc/pve/cluster.conf.new (don't forget to increment the version number) | ||

<source lang="xml"><cman keyfile="/var/lib/pve-cluster/corosync.authkey" transport="udpu"/></source> | <source lang="xml"><cman keyfile="/var/lib/pve-cluster/corosync.authkey" transport="udpu"/></source> | ||

*activate via GUI | * activate via GUI | ||

*add all nodes you want to join in /etc/hosts and reboot | * add all nodes you want to join in /etc/hosts and reboot | ||

*before you add a node, make sure you add all other nodes in /etc/hosts | * before you add a node, make sure you add all other nodes in /etc/hosts | ||

Revision as of 19:35, 13 October 2014

Introduction

Multicast allows a single transmission to be delivered to multiple servers at the same time.

This is the basis for cluster communications in Proxmox VE 2.0 and higher, which uses corosync and cman, and would apply to any other solution which utilizes those clustering tools.

If multicast does not work in your network infrastructure, you should fix it so that it does. If all else fails, use unicast instead, but beware of the node count limitations with unicast.

IGMP snooping

IGMP snooping prevents flooding multicast traffic to all ports in the broadcast domain by only allowing traffic destined for ports which have solicited such traffic. IGMP snooping is a feature offered by most major switch manufacturers and is often enabled by default on switches. In order for a switch to properly snoop the IGMP traffic, there must be an IGMP querier on the network. If no querier is present, IGMP snooping will actively prevent ALL IGMP/Multicast traffic from being delivered!

If IGMP snooping is disabled, all multicast traffic will be delivered to all ports which may add unnecessary load, potentially allowing a denial of service attack.

IGMP querier

An IGMP querier is a multicast router that generates IGMP queries. IGMP snooping relies on these queries which are unconditionally forwarded to all ports, as the replies from the destination ports is what builds the internal tables in the switch to allow it to know which traffic to forward.

IGMP querier can be enabled on your router, switch, or even linux bridges.

Configuring IGMP/Multicast

Ensuring IGMP Snooping and Querier are enabled on your network (recommended)

Juniper - JunOS

Juniper EX switches, by default, enable IGMP snooping on all vlans as can be seen by this config snippet:

[edit protocols] user@switch# show igmp-snooping vlan all;

However, IGMP querier is not enabled by default. If you are using RVIs (Routed Virtual Interfaces) on your switch already, you can enabled IGMP v2 on the interface which enables the querier. However, most administrators do not use RVIs in all vlans on their switches and should be configured instead on the router. The below config setting is the same on Juniper EX switches using RVIs as it is on Juniper SRX service gateways/routers, and effectively enables IGMP querier on the specified interface/vlan. Note you must set this on all vlans which require multicast!:

set protocols igmp $iface version 2

Cisco

Brocade

Linux: Enabling Multicast querier on bridges

If your router or switch does not support enabling a multicast querier, and you are using a classic linux bridge (not Open vSwitch), then you can enable the multicast querier on the Linux bridge by adding this statement to your /etc/network/interfaces bridge configuration:

post-up ( echo 1 > /sys/devices/virtual/net/$IFACE/bridge/multicast_querier )

Disabling IGMP Snooping (not recommended)

Juniper - JunOS

set protocols igmp-snooping vlan all disable

Cisco Managed Switches

# conf t # no ip igmp snooping

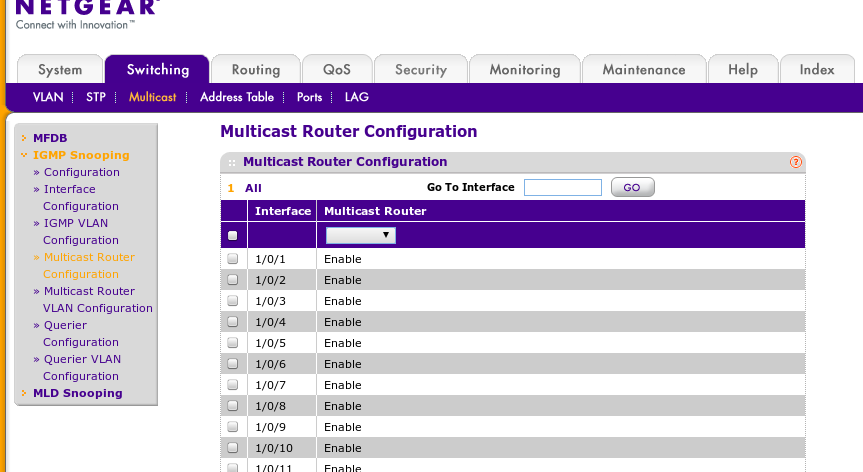

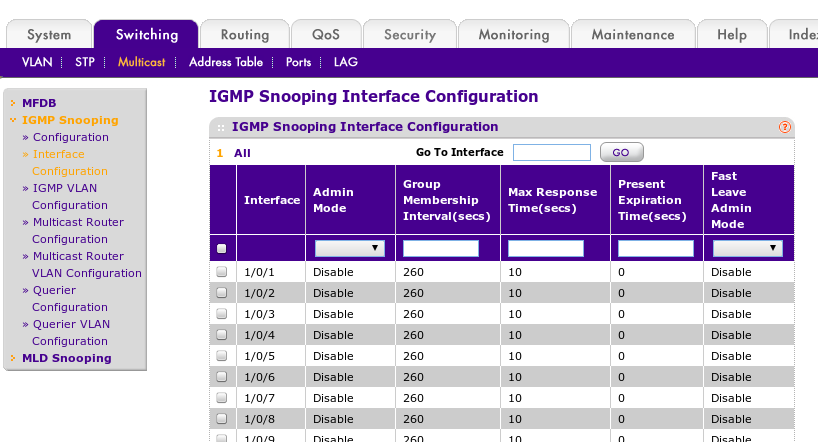

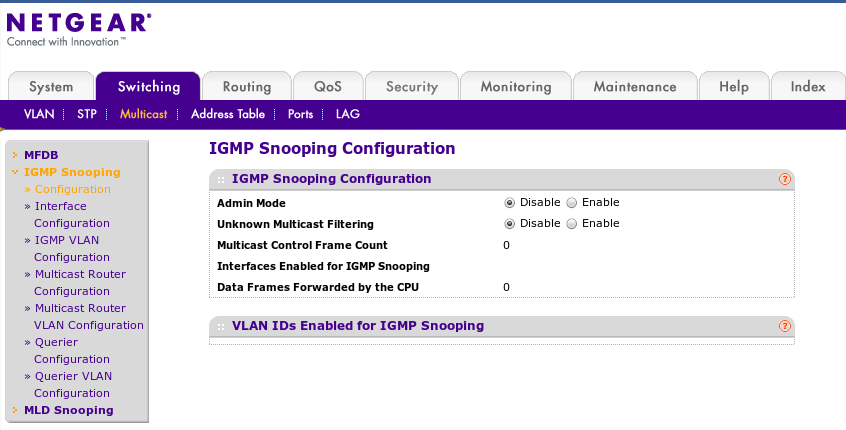

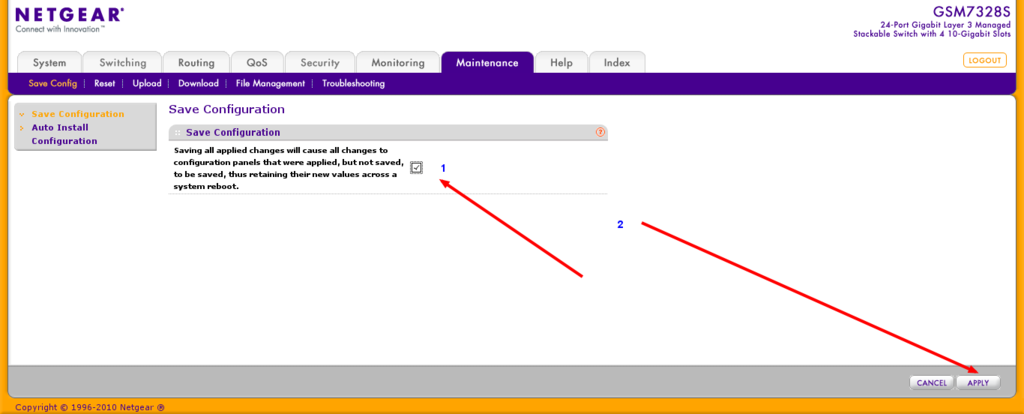

Netgear Managed Switches

the following are pics of setting to get multicast working on our netgear 7300 series switches. for more information see http://documentation.netgear.com/gs700at/enu/202-10360-01/GS700AT%20Series%20UG-06-18.html

Multicast with Infiniband

IP over Infiniband (IPoIB) supports Multicast but Multicast traffic is limited to 2044 Bytes when using connected mode even if you set a larger MTU on the IPoIB interface.

Corosync has a setting, netmtu, that defaults to 1500 making it compatible with connected mode Infiniband.

Changing netmtu

Changing the netmtu can increase throughput The following information is untested.

Edit the /etc/pve/cluster.conf file Add the section:

<totem netmtu="2044" />

<?xml version="1.0"?>

<cluster name="clustername" config_version="2">

<totem netmtu="2044" />

<cman keyfile="/var/lib/pve-cluster/corosync.authkey">

</cman>

<clusternodes>

<clusternode name="node1" votes="1" nodeid="1"/>

<clusternode name="node2" votes="1" nodeid="2"/>

<clusternode name="node3" votes="1" nodeid="3"/></clusternodes>

</cluster>

Testing multicast

Note: not all hosting companies allow multicast traffic.

Using omping

Install on all nodes

aptitude install omping

start omping on all nodes with the following command and check the output, e.g:

omping node1 node2 node3

Using ssmping

Install on all nodes

aptitude install ssmping

run this on Node A:

ssmpingd

then on Node B:

asmping 224.0.2.1 ip_for_NODE_A_here

example output

asmping joined (S,G) = (*,224.0.2.234) pinging 192.168.8.6 from 192.168.8.5 unicast from 192.168.8.6, seq=1 dist=0 time=0.221 ms unicast from 192.168.8.6, seq=2 dist=0 time=0.229 ms multicast from 192.168.8.6, seq=2 dist=0 time=0.261 ms unicast from 192.168.8.6, seq=3 dist=0 time=0.198 ms multicast from 192.168.8.6, seq=3 dist=0 time=0.213 ms unicast from 192.168.8.6, seq=4 dist=0 time=0.234 ms multicast from 192.168.8.6, seq=4 dist=0 time=0.248 ms unicast from 192.168.8.6, seq=5 dist=0 time=0.249 ms multicast from 192.168.8.6, seq=5 dist=0 time=0.263 ms unicast from 192.168.8.6, seq=6 dist=0 time=0.250 ms multicast from 192.168.8.6, seq=6 dist=0 time=0.264 ms unicast from 192.168.8.6, seq=7 dist=0 time=0.245 ms multicast from 192.168.8.6, seq=7 dist=0 time=0.260 ms

for more information see

man ssmping

and

less /usr/share/doc/ssmping/README.gz

ssmping notes

There are a few other programs included in ssmping which may be of use. here is a list of the files in the package:

apt-file list ssmping ssmping: /usr/bin/asmping ssmping: /usr/bin/mcfirst ssmping: /usr/bin/ssmping ssmping: /usr/bin/ssmpingd ssmping: /usr/share/doc/ssmping/README.gz ssmping: /usr/share/doc/ssmping/changelog.Debian.gz ssmping: /usr/share/doc/ssmping/copyright ssmping: /usr/share/man/man1/asmping.1.gz ssmping: /usr/share/man/man1/mcfirst.1.gz ssmping: /usr/share/man/man1/ssmping.1.gz ssmping: /usr/share/man/man1/ssmpingd.1.gz

Troubleshooting

cman & iptables

In case cman crashes with cpg_send_message failed: 9 add those to your rule set:

iptables -A INPUT -m addrtype --dst-type MULTICAST -j ACCEPT iptables -A INPUT -p udp -m state --state NEW -m multiport –dports 5404,5405 -j ACCEPT

Use unicast instead of multicast (if all else fails)

Unicast is a technology for sending messages to a single network destination. In corosync, unicast is implemented as UDP-unicast (UDPU). Due to increased network traffic (compared to multicast) the number of supported nodes is limited, do not use it with more that 4 cluster nodes.

- just create the cluster as usual (pvecm create ...)

- follow this howto to create a cluster.conf.new Fencing#General_HowTo_for_editing_the_cluster.conf

- add the new transport="udpu" in /etc/pve/cluster.conf.new (don't forget to increment the version number)

<cman keyfile="/var/lib/pve-cluster/corosync.authkey" transport="udpu"/>

- activate via GUI

- add all nodes you want to join in /etc/hosts and reboot

- before you add a node, make sure you add all other nodes in /etc/hosts