Difference between revisions of "Performance Tweaks"

(Update FreeBSD Info) |

A.lauterer (talk | contribs) (→Disk Cache: add image for each cache mode) |

||

| (11 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

| − | This page is intended to be a collection of various performance tips/tweaks to help you get the most from your virtual | + | This page is intended to be a collection of various performance tips/tweaks to help you get the most from your KVM virtual machines. |

| − | + | ||

| − | == | + | = General = |

| − | |||

| − | |||

| − | ==VirtIO== | + | === VirtIO === |

Use virtIO for disk and network for best performance. | Use virtIO for disk and network for best performance. | ||

| − | * Linux has the drivers built in | + | * Linux has the drivers built in since Linux 2.6.24 as experimental, and since Linux 3.8 as stable |

| − | |||

* [http://freebsd.org FreeBSD] has the drivers built in since 9.0 | * [http://freebsd.org FreeBSD] has the drivers built in since 9.0 | ||

| + | * Windows requires the [[Windows VirtIO Drivers]] to be downloaded and installed manually | ||

| − | ==Disk Cache== | + | ===Disk Cache=== |

{{Note|The information below is based on using raw volumes, other volume formats may behave differently.}} | {{Note|The information below is based on using raw volumes, other volume formats may behave differently.}} | ||

| − | cache=none seems to be the best performance and is the default since Proxmox 2.X. | + | ====Small Overview==== |

| − | + | ||

| − | + | Note: The overview below is dependent of the specific hardware used, for example a HW Raid with a BBU backed disk cache works just fine with 'writeback' mode, so take it just as an general overview. | |

| − | + | ||

| − | + | {| class="wikitable sortable" | |

| + | |- | ||

| + | ! Mode !! Host Page Cache !! Disk Write Cache !! Notes | ||

| + | |- | ||

| + | | none || disabled || enabled || balances performance and safety (better writes) | ||

| + | |- | ||

| + | | writethrough || enabled || disabled || balances performance and safety (better reads) | ||

| + | |- | ||

| + | | writeback || enabled || enabled || fast, can loose data on power outage depending on hardware used | ||

| + | |- | ||

| + | | directsync || disabled || disabled || safest but slowest (relative to the others) | ||

| + | |- | ||

| + | | unsafe || enabled || enabled || doesn't flush data, fastest and unsafest | ||

| + | |} | ||

| + | [[File:Cache mode none.png|150px|border|right]] | ||

| + | '''cache=none''' seems to be the best performance and is the default since Proxmox 2.X. | ||

| + | |||

| + | * host page cache is not used | ||

| + | * guest disk cache is set to writeback | ||

| + | * Warning: like writeback, you can lose data in case of a power failure | ||

| + | * You need to use the barrier option in your Linux guest's fstab if kernel < 2.6.37 to avoid FS corruption in case of power failure. | ||

| − | + | <blockquote> | |

| − | + | This mode causes qemu-kvm to interact with the disk image file or block device with O_DIRECT semantics, so the host page cache is bypassed and I/O happens directly between the qemu-kvm userspace buffers and the storage device. Because the actual storage device may report a write as completed when placed in its write queue only, the guest's virtual storage adapter is informed that there is a writeback cache, so the guest would be expected to send down flush commands as needed to manage data integrity. Equivalent to direct access to your hosts' disk, performance wise. | |

| − | + | </blockquote> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <br clear=all> | |

| + | [[File:Cache mode writethrough.png|150px|border|right]] | ||

| + | '''cache=writethrough''' | ||

| + | * host page cache is used as read cache | ||

| + | * guest disk cache mode is writethrough | ||

| + | * Writethrough causes an fsync for each write. It's the more secure cache mode, you can't lose data but it is also slower. | ||

| − | + | <blockquote> | |

| − | + | This mode causes qemu-kvm to interact with the disk image file or block device with O_DSYNC semantics, where writes are reported as completed only when the data has been committed to the storage device. The host page cache is used in what can be termed a writethrough caching mode. Guest virtual storage adapter is informed that there is no writeback cache, so the guest would not need to send down flush commands to manage data integrity. The storage behaves as if there is a writethrough cache. | |

| − | + | </blockquote> | |

| − | |||

| − | + | <br clear=all> | |

| − | + | [[File:Cache mode direct.png|150px|border|right]] | |

| − | + | '''cache=directsync''' | |

| − | + | * host page cache is not used | |

| + | * guest disk cache mode is writethrough | ||

| + | * similar to writethrough, an fsync is made for each write. | ||

| − | + | <blockquote> | |

| + | This mode causes qemu-kvm to interact with the disk image file or block device with both O_DSYNC and O_DIRECT semantics, where writes are reported as completed only when the data has been committed to the storage device, and when it is also desirable to bypass the host page cache. Like cache=writethrough, it is helpful to guests that do not send flushes when needed. It was the last cache mode added, completing the possible combinations of caching and direct access semantics. | ||

| + | </blockquote> | ||

| − | cache=writeback | + | <br clear=all> |

| − | + | [[File:Cache mode writeback.png|150px|border|right]] | |

| − | + | '''cache=writeback''' | |

| − | + | * host page cache is used as read & write cache | |

| − | + | * guest disk cache mode is writeback | |

| + | * Warning: you can lose data in case of a power failure | ||

| + | * You need to use the barrier option in your Linux guest's fstab if kernel < 2.6.37 to avoid FS corruption in case of power failure. | ||

| − | + | <blockquote> | |

| − | + | This mode causes qemu-kvm to interact with the disk image file or block device with neither O_DSYNC nor O_DIRECT semantics, so the host page cache is used and writes are reported to the guest as completed when placed in the host page cache, and the normal page cache management will handle commitment to the storage device. Additionally, the guest's virtual storage adapter is informed of the writeback cache, so the guest would be expected to send down flush commands as needed to manage data integrity. Analogous to a raid controller with RAM cache. | |

| − | + | </blockquote> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | '''cache=writeback (unsafe)''' | |

| + | * as '''writeback''', but ignores flush commands from the guest! | ||

| + | * Warning: No data integrity even if the guest is sending flush commands. Not recommended for production use. | ||

| + | ---- | ||

For read cache memory: try to add more memory in your guest, they already do the job with their buffer cache | For read cache memory: try to add more memory in your guest, they already do the job with their buffer cache | ||

| − | cache=directsync | + | cache=writethrough or directsync can be also quite fast if you have a SAN or HW raid controller with battery backed cache. |

| − | + | Using qcow2 backed disks and either cache=directsync or writethrough can make things slower. | |

some interestings articles : | some interestings articles : | ||

| − | + | cache mode and fsync : http://www.ilsistemista.net/index.php/virtualization/23-kvm-storage-performance-and-cache-settings-on-red-hat-enterprise-linux-62.html?start=2 | |

| − | + | =OS Specific = | |

| + | == Windows == | ||

| − | = | + | === USB Tablet Device === |

| − | == | + | Disabling the USB tablet device in windows VMs can reduce idle CPU usage and reduce context switches. This can be done on the GUI. You can use vmmouse to get the pointer in sync (load drivers inside your VM). |

| + | [http://forum.proxmox.com/threads/9981-disable-quot-usbdevice-tablet-quot-for-all-VM] | ||

| − | ===Use raw and not qcow2=== | + | === Use raw disk image and not qcow2 === |

| − | Consider using raw image or partition for a partition with Microsoft SQL database files because qcow2 can be very slow under such type of load. | + | Consider using raw image or partition for a partition, especially with Microsoft SQL database files because qcow2 can be very slow under such type of load. |

| − | ===Trace Flag T8038=== | + | === Trace Flag T8038 with Microsoft SQL Server === |

Setting the trace flag -T8038 will drastically reduce the number of context switches when running SQL 2005 or 2008. | Setting the trace flag -T8038 will drastically reduce the number of context switches when running SQL 2005 or 2008. | ||

| Line 99: | Line 115: | ||

For additional references see: [http://forum.proxmox.com/threads/5770-Windows-guest-high-context-switch-rate-when-idle Proxmox forum] | For additional references see: [http://forum.proxmox.com/threads/5770-Windows-guest-high-context-switch-rate-when-idle Proxmox forum] | ||

| − | = | + | === Do not use the Virtio Balloon Driver === |

| − | + | The Balloon driver has been a source of performance problems on Windows, you should avoid it. | |

| − | + | (see http://forum.proxmox.com/threads/20265-SOLVED-Hyper-Threading-vs-No-Hyper-Threading-Fixed-vs-Variable-Memory for the discussion thread) | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 08:44, 30 August 2022

Introduction

This page is intended to be a collection of various performance tips/tweaks to help you get the most from your KVM virtual machines.

General

VirtIO

Use virtIO for disk and network for best performance.

- Linux has the drivers built in since Linux 2.6.24 as experimental, and since Linux 3.8 as stable

- FreeBSD has the drivers built in since 9.0

- Windows requires the Windows VirtIO Drivers to be downloaded and installed manually

Disk Cache

| Note: The information below is based on using raw volumes, other volume formats may behave differently. |

Small Overview

Note: The overview below is dependent of the specific hardware used, for example a HW Raid with a BBU backed disk cache works just fine with 'writeback' mode, so take it just as an general overview.

| Mode | Host Page Cache | Disk Write Cache | Notes |

|---|---|---|---|

| none | disabled | enabled | balances performance and safety (better writes) |

| writethrough | enabled | disabled | balances performance and safety (better reads) |

| writeback | enabled | enabled | fast, can loose data on power outage depending on hardware used |

| directsync | disabled | disabled | safest but slowest (relative to the others) |

| unsafe | enabled | enabled | doesn't flush data, fastest and unsafest |

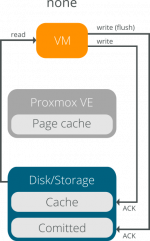

cache=none seems to be the best performance and is the default since Proxmox 2.X.

- host page cache is not used

- guest disk cache is set to writeback

- Warning: like writeback, you can lose data in case of a power failure

- You need to use the barrier option in your Linux guest's fstab if kernel < 2.6.37 to avoid FS corruption in case of power failure.

This mode causes qemu-kvm to interact with the disk image file or block device with O_DIRECT semantics, so the host page cache is bypassed and I/O happens directly between the qemu-kvm userspace buffers and the storage device. Because the actual storage device may report a write as completed when placed in its write queue only, the guest's virtual storage adapter is informed that there is a writeback cache, so the guest would be expected to send down flush commands as needed to manage data integrity. Equivalent to direct access to your hosts' disk, performance wise.

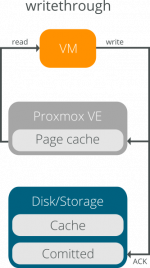

cache=writethrough

- host page cache is used as read cache

- guest disk cache mode is writethrough

- Writethrough causes an fsync for each write. It's the more secure cache mode, you can't lose data but it is also slower.

This mode causes qemu-kvm to interact with the disk image file or block device with O_DSYNC semantics, where writes are reported as completed only when the data has been committed to the storage device. The host page cache is used in what can be termed a writethrough caching mode. Guest virtual storage adapter is informed that there is no writeback cache, so the guest would not need to send down flush commands to manage data integrity. The storage behaves as if there is a writethrough cache.

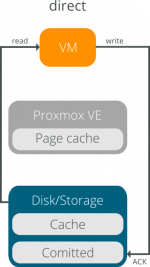

cache=directsync

- host page cache is not used

- guest disk cache mode is writethrough

- similar to writethrough, an fsync is made for each write.

This mode causes qemu-kvm to interact with the disk image file or block device with both O_DSYNC and O_DIRECT semantics, where writes are reported as completed only when the data has been committed to the storage device, and when it is also desirable to bypass the host page cache. Like cache=writethrough, it is helpful to guests that do not send flushes when needed. It was the last cache mode added, completing the possible combinations of caching and direct access semantics.

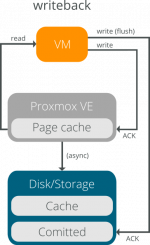

cache=writeback

- host page cache is used as read & write cache

- guest disk cache mode is writeback

- Warning: you can lose data in case of a power failure

- You need to use the barrier option in your Linux guest's fstab if kernel < 2.6.37 to avoid FS corruption in case of power failure.

This mode causes qemu-kvm to interact with the disk image file or block device with neither O_DSYNC nor O_DIRECT semantics, so the host page cache is used and writes are reported to the guest as completed when placed in the host page cache, and the normal page cache management will handle commitment to the storage device. Additionally, the guest's virtual storage adapter is informed of the writeback cache, so the guest would be expected to send down flush commands as needed to manage data integrity. Analogous to a raid controller with RAM cache.

cache=writeback (unsafe)

- as writeback, but ignores flush commands from the guest!

- Warning: No data integrity even if the guest is sending flush commands. Not recommended for production use.

For read cache memory: try to add more memory in your guest, they already do the job with their buffer cache

cache=writethrough or directsync can be also quite fast if you have a SAN or HW raid controller with battery backed cache.

Using qcow2 backed disks and either cache=directsync or writethrough can make things slower.

some interestings articles :

cache mode and fsync : http://www.ilsistemista.net/index.php/virtualization/23-kvm-storage-performance-and-cache-settings-on-red-hat-enterprise-linux-62.html?start=2

OS Specific

Windows

USB Tablet Device

Disabling the USB tablet device in windows VMs can reduce idle CPU usage and reduce context switches. This can be done on the GUI. You can use vmmouse to get the pointer in sync (load drivers inside your VM). [1]

Use raw disk image and not qcow2

Consider using raw image or partition for a partition, especially with Microsoft SQL database files because qcow2 can be very slow under such type of load.

Trace Flag T8038 with Microsoft SQL Server

Setting the trace flag -T8038 will drastically reduce the number of context switches when running SQL 2005 or 2008.

To change the trace flag:

- Open the SQL server Configuration Manager

- Open the properties for the SQL service typically named MSSQLSERVER

- Go to the advanced tab

- Append ;-T8038 to the end of the startup parameters option

For additional references see: Proxmox forum

Do not use the Virtio Balloon Driver

The Balloon driver has been a source of performance problems on Windows, you should avoid it. (see http://forum.proxmox.com/threads/20265-SOLVED-Hyper-Threading-vs-No-Hyper-Threading-Fixed-vs-Variable-Memory for the discussion thread)