Two-Node High Availability Cluster: Difference between revisions

No edit summary |

(archive) |

||

| (41 intermediate revisions by 9 users not shown) | |||

| Line 1: | Line 1: | ||

{{Note|Article about the old stable Proxmox VE 3.x releases}} | |||

This is a work in | == Introduction == | ||

This article explores how to build a two-node cluster with HA enabled under Proxmox 3.4. HA is generally recommended to be deployed on at least three nodes to prevent strange behaviors and potentially lethal data incoherence (for further info look for "Quorum"). | |||

Although in the case of two-node clusters it is recommended to use a third, shared quorum disk partition, Proxmox VE 3.4 allows to build the cluster without it. Let's see how. | |||

'''Note:''' This is NOT possible for Proxmox VE 4.0 and not intended for any Proxmox VE distributions and the better way is to add at least a third node to get HA. | |||

== System requirements == | |||

If you run HA, only high end server hardware with no single point of failure should be used. This includes redundant disks (Hardware Raid), redundant power supply, UPS systems, network bonding. | |||

*Fully configured [[Proxmox_VE_2.0_Cluster]], with 2 nodes. | |||

*Shared storage (SAN for Virtual Disk Image Store for HA KVM). In this case, no external storage was used. Instead, a cheaper alternative ([[DRBD]]) was tested. | |||

*Reliable network, suitable configured | |||

*[[Fencing]] device(s) - reliable and TESTED!. We will use HP's iLO for this example. | |||

== What is DRBD used for? == | |||

For this testing configuration, two [[DRBD]] resources were created, one for VM images an another one for VMs users data. Thanks to DRBD (if properly configured), a mirror raid is created through the network (be aware that, although possible, using WANs would mean high latencies). As VMs and data is replicated synchronously in both nodes, if one of them fails, it will be possible to restart "dead" machines on the other node without data loss. | |||

== Configuring Fencing == | |||

Fencing is vital for Proxmox to manage a node loss and thus provide effective HA. [[Fencing]] is the mechanism used to prevent data incoherences between nodes in a cluster by ensuring that a node reported as "dead" is really down. If it is not, a reboot or power-off signal is sent to force it to go to a safe state and prevent multiple instances of the same virtual machine run concurrently on different nodes. | |||

Many different methods can be used for fencing a node, so just a few changes would be needed to make this work under different scenarios. | |||

First, login to the CLI in any of your properly configure cluster machines. | |||

Then, create a copy of cluster.conf (append it .new): | |||

<pre> | |||

cp /etc/pve/cluster.conf /etc/pve/cluster.conf.new | |||

</pre> | |||

go and edit cluster.conf.new. In one of the first lines you will see this: | |||

<pre> | |||

<cluster alias="hpiloclust" config_version="12" name="hpiloclust"> | |||

</pre> | |||

Be sure to increase the number "config_version" each time you plan to apply new configurations as this is the internal mechanism used by the cluster configuration tools to detect new changes. | |||

To be able to create and manage a two-node cluster, edit the cman configuration part to include this: | |||

<pre> | |||

<cman two_node="1" expected_votes="1"> </cman> | |||

</pre> | |||

Now, add the available fencing devices to the config files by adding this lines (it is ok right after </clusternodes>): | |||

<pre> | |||

<fencedevices> | |||

<fencedevice agent="fence_ilo" hostname="nodeA.your.domain" login="hpilologin" name="fenceNodeA" passwd="hpilopwordA"/> | |||

<fencedevice agent="fence_ilo" hostname="nodeB.your.domain" login="hpilologin" name="fenceNodeB" passwd="hpilopwordB"/> | |||

</fencedevices> | |||

</pre> | |||

If you prefer to use IPs instead of host names, substitute: | |||

<pre> | |||

hostname="nodeA" | |||

</pre> | |||

for | |||

<pre> | |||

ipaddr="192.168.1.2" | |||

</pre> | |||

or whatever IP belongs to the iLO interface on that node. | |||

Next, you need to tell the cluster what fencing device is used to fence which host. Therefore, within the "<clusternode></clusternode>" tags, add the following accordingly to each node (do not care about the "method" name, choose whatever): | |||

<pre> | |||

<clusternode name="nodeA.your.domain" nodeid="1" votes="1"> | |||

<fence> | |||

<method name="1"> | |||

<device name="fenceNodeA" action="reboot"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

<clusternode name="nodeB.your.domain" nodeid="2" votes="1"> | |||

<fence> | |||

<method name="1"> | |||

<device name="fenceNodeB" action="reboot"/> | |||

</method> | |||

</fence> | |||

</clusternode> | |||

</pre> | |||

Choose the "action" you prefer. "reboot" will fence the node and reboot it to wait for a proper state (i.e. waiting for network to be operative again). Also "off" could be used but this will require human intervention to boot the node again. | |||

So far, so good. The required fencing is now properly configured and must be tested prior to enter a production environment. | |||

=== Applying changes === | |||

Once the cluster.conf.new is properly modified and saved, it is time to apply changes to propagate them across all cluster nodes. This is done through the GUI and connected to the machine where that file has been modified. | |||

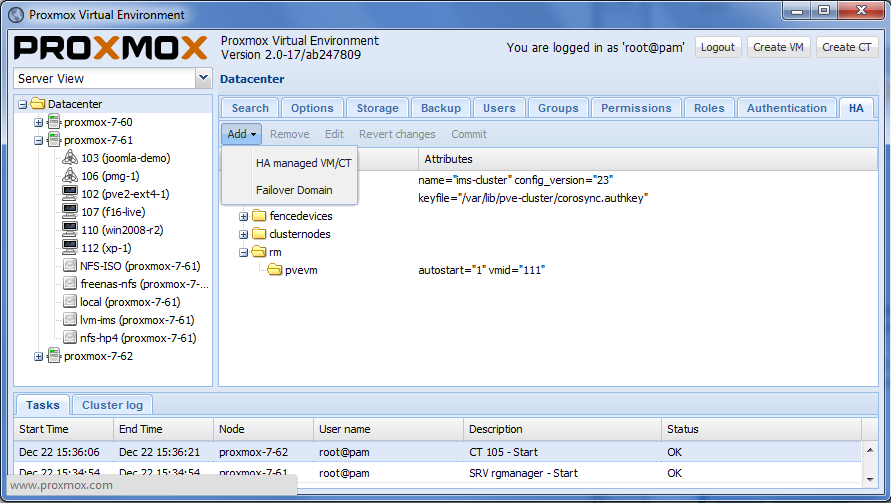

On the left of the screen select your cluster. Now, on the top right, select tab named "HA" (see next image). | |||

[[Image:Screen-Add-HA-managed_VM-CT.png|Screen-Add-HA-managed_VM-CT]] | |||

Now, on the bottom of the screen changes to apply will appear. If everything is correct, click "commit" and acknowledge the operation. Now two scenarios are possible: | |||

*Everything goes smoothly: no errors were detected, changes were applied and the new configuration was propagated across all cluster nodes. Now, the configuration tree should show the new elements (fencing devices) properly configured. | |||

*Errors appeared: check your cluster.conf.new syntax and parameters of the fencing devices. An incorrect password/hostname/username will make tests fail and will prevent the new configuration to take effect. It is recommended to previously check that fencing works by using the fence_ilo (or whatever you use) command from the CLI. | |||

=== Checking everything works === | |||

Create some virtual machines and/or containers in one of your nodes. Start them and enable HA | |||

*See [[High_Availability_Cluster#Enable_a_KVM_VM_or_a_Container_for_HA]] | |||

Now that everything is going smooth on your cluster, just power-off the node were machines are running (press power) or pull the network cables used for data and synchronization (keep that used for the fencing mechanism). From your web manager (connected to the other node), you can see how things evolve by clicking on the "healthy" node and looking at the scrolling "syslog" tab. It should detect the other node is down, create a new quorum and fence it. If fencing fails, you should re-check your fencing configuration as it MUST work in order to automatically restar machines. | |||

== Quorum Disk Configuration == | |||

=== Setup the Block Device === | |||

A small (about 10MB) block device needs to be shared from a third computer to the two Proxmox nodes. It is not necessary that this third computer provide a highly available device, simply mostly available would be good. In essence, any one of your three devices will be able to be down at a given time, one of your two nodes, or your block device. Having two down at the same time could cause problems. | |||

In this example, a simple LVM backed iSCSI target is shared from a CentOS 6 VM. Please consult this URL for more details: http://www.linuxjournal.com/content/creating-software-backed-iscsi-targets-red-hat-enterprise-linux-6. | |||

A short step-by-step: | |||

*On the third CentOS 6 system, you may need some free space in your Volume Group, if so, try shrinking the lv_swap like this: | |||

<pre> | |||

swapoff -a | |||

lvreduce -L -100M /dev/vg_SOMETHING/lv_swap | |||

mkswap /dev/vg_onsitebackup/lv_swap | |||

swapon -a | |||

</pre> | |||

Now you should have 100MB free in your Volume Group | |||

*Create the LV to be shared via iSCSI: | |||

<pre> | |||

lvcreate -n proxmox2quorumdisk -L 10M vg_SOMETHING | |||

lvdisplay # Should list it | |||

</pre> | |||

*Install the iSCSI software: | |||

<pre> | |||

yum -y install scsi-target-utils | |||

</pre> | |||

*Open port 3260 for the IPs of the clients `vi /etc/sysconfig/iptables`: | |||

<pre> | |||

# Example: | |||

-A RH-Firewall-1-INPUT -s IP -p tcp -m tcp --dport 3260 -j ACCEPT | |||

</pre> | |||

* Now load the rules: | |||

<pre> | |||

iptables-restore < /etc/sysconfig/iptables | |||

</pre> | |||

*Setup the iSCSI target `vi /etc/tgt/targets.conf` | |||

<pre> | |||

<target iqn.2012-06.com.domain.hostname:server.p1qdisk> | |||

backing-store /dev/vg_SOMETHING/proxmox2quorumdisk | |||

initiator-address IPofNODEA | |||

initiator-address IPofNODEB | |||

</target> | |||

</pre> | |||

*Start the tgtd service: | |||

<pre> | |||

service tgtd start | |||

chkconfig tgtd on | |||

tgt-admin -s | |||

# You should see your volume listed | |||

</pre> | |||

*Now you need to mount the iSCSI target on the Proxmox cluster members: | |||

<pre> | |||

aptitude install tgt | |||

vi /etc/iscsi/iscsid.conf # change node.startup to automatic | |||

/etc/init.d/open-iscsi restart | |||

</pre> | |||

*See what targets are available | |||

<pre> | |||

iscsiadm --mode discovery --type sendtargets --portal IPOFiSCSISERVER | |||

</pre> | |||

*Login, using the iqn and ip from the above command | |||

<pre> | |||

iscsiadm -m node -T iqn.BLAHBLAH -p IPOFiSCSISERVER -l | |||

</pre> | |||

*Find the name of the block device that was added | |||

<pre> | |||

dmesg | tail | |||

</pre> | |||

=== Create the Quorum Disk === | |||

See the following URL for more details: http://magazine.redhat.com/2007/12/19/enhancing-cluster-quorum-with-qdisk/. | |||

WARNING: Make sure you use the correct block device in the commands below. The output from `dmesg | tail` above should give you the device you are working with. I use the example of "/dev/sdc" below, but you must verify on your server which block device relates to the iSCSI devices. | |||

On one node: | |||

*Create the partition: | |||

<pre> | |||

fdisk /dev/sdc # assuming this is the block device from above | |||

p # verify the size of the disk | |||

n # new partition | |||

p # primary | |||

1 # 1st one | |||

w # Write it out | |||

</pre> | |||

*Create the quorum disk, the argument to -l is the label that you want to give to this qdisk: | |||

<pre> | |||

mkqdisk -c /dev/sdc1 -l proxmox1_qdisk | |||

</pre> | |||

On all other nodes: | |||

*Logout and login again to iscsi target to see the changes: | |||

<pre> | |||

iscsiadm --mode node --target <IQN> --portal x.x.x.x --logout | |||

iscsiadm --mode node --target <IQN> --portal x.x.x.x --login | |||

</pre> | |||

=== Add the qdisk to the Cluster === | |||

On one node: | |||

*Copy the cluster.conf file | |||

<pre> | |||

cp /etc/pve/cluster.conf /etc/pve/cluster.conf.new | |||

</pre> | |||

*Edit and make the appropriate changes to the file, a) increment the "config_version" by one number, b) remove 2node="1", c) add the quorumd definition and totem timeout, something like this: | |||

<pre> | |||

<cman expected_votes="3" keyfile="/var/lib/pve-cluster/corosync.authkey"/> | |||

<quorumd votes="1" allow_kill="0" interval="1" label="proxmox1_qdisk" tko="10"/> | |||

<totem token="54000"/> | |||

</pre> | |||

Activate: | |||

*Go to the web interface of Proxmox and select Datacenter in the upper left. Select the HA tab. You should see the changes that you just made in the interface. Verify in the diff section that you did increment the cluster version number. | |||

*Click Activate. | |||

*On the command line, run `pvecm s` and verify that both nodes are running the cluster version that you just pushed out. | |||

*On each node, one at a time: | |||

<pre> | |||

/etc/init.d/rgmanager stop # This will restart any VMs that are HA enabled onto the other node. | |||

/etc/init.d/cman reload # This will activate the qdisk | |||

</pre> | |||

*Verify that rgmanager is running, if not, start it | |||

Verify: | |||

*Run `clustat` on each node and you should see output similar to: | |||

<pre> | |||

Cluster Status for proxmox1 @ Thu Jun 28 12:23:10 2012 | |||

Member Status: Quorate | |||

Member Name ID Status | |||

------ ---- ---- ------ | |||

proxmox1a 1 Online, Local, rgmanager | |||

proxmox1b 2 Online, rgmanager | |||

/dev/block/8:33 0 Online, Quorum Disk | |||

</pre> | |||

*You can also run `pvecm s` again and you should see output similar to: | |||

<pre> | |||

--snip-- | |||

Nodes: 2 | |||

Expected votes: 3 | |||

Quorum device votes: 1 | |||

Total votes: 3 | |||

Node votes: 1 | |||

Quorum: 2 | |||

--snip-- | |||

</pre> | |||

=== Testing === | |||

Now is the time to run the cluster through a barrage of testing. First, ensure that you have some HA enabled VMs for TESTING running on each host before running each of these. Second, ensure that you have a remote power on method in case one of the nodes is powered off. | |||

Some example tests to try: | |||

*`ifdown eth1` | |||

*`/etc/init.d/networking stop` | |||

*`fence_node proxmox1a` | |||

*Restart the server that is serving your quorum disk to ensure that the cluster remains stable when the quorum device is gone. | |||

The VMs should only experience up to a maximum of 5 minutes of downtime when running these tests. | |||

== Problems and workarounds == | |||

=== DRBD split-brain === | |||

Under some cirumstances, data consistence between both nodes on DRBD partitions can be lost. In this case, the only efficient way to solve this is by manually intervention. The cluster administrator must decide which node data must be preserved and which one is discarded to gain data coherence again. Under DRBD, split-brain situations will probably occur when data connection is lost for longer than a few seconds. | |||

Lets consider a failure scenario where node A is the node where we had the most machines running and thus the one we want to conserve data. Therefore, node B changes made to DRBD partition while the split-brain situation lasted, must be discarded. We consider a Primary/Primary configuration for DRBD. The procedure to follow would be : | |||

*Go to a node B terminal (repeat for each DRBD resource where quorum is lost): | |||

<pre> | |||

drbdadm secondary [resource name] | |||

drbdadm disconnect [resource name] | |||

drbdadm -- --discard-my-data connect [resource name] | |||

</pre> | |||

*Go to a node A terminal (repeat for each DRBD resource where quorum is lost): | |||

<pre> | |||

drbdadm connect [resource name] | |||

</pre> | |||

*Go back to node B terminal (repeat for each DRBD resource where quorum is lost): | |||

<pre> | |||

drbdadm primary [resource name] | |||

</pre> | |||

=== Fencing keeps on failing === | |||

Fencing MUST work properly when a node fails to have HA working. Therefore, you must ensure that the fencing mechanism is reachable (there exists network connection) and that it is powered (if for testing purposes you just pulled the power cord, things will go wrong). Therefore, '''It is strongly encouraged to have network switches and power supplies correctly protected and replicated using SAIs (Switch Abstraction Interface) to prevent a lethal blackout that would make HA unusable'''. What is more, if the HA mechanism fails, you won't be able to manually restart affected VMs on the other host. | |||

[[Category:Archive]] | |||

Latest revision as of 15:39, 18 July 2019

| Note: Article about the old stable Proxmox VE 3.x releases |

Introduction

This article explores how to build a two-node cluster with HA enabled under Proxmox 3.4. HA is generally recommended to be deployed on at least three nodes to prevent strange behaviors and potentially lethal data incoherence (for further info look for "Quorum").

Although in the case of two-node clusters it is recommended to use a third, shared quorum disk partition, Proxmox VE 3.4 allows to build the cluster without it. Let's see how.

Note: This is NOT possible for Proxmox VE 4.0 and not intended for any Proxmox VE distributions and the better way is to add at least a third node to get HA.

System requirements

If you run HA, only high end server hardware with no single point of failure should be used. This includes redundant disks (Hardware Raid), redundant power supply, UPS systems, network bonding.

- Fully configured Proxmox_VE_2.0_Cluster, with 2 nodes.

- Shared storage (SAN for Virtual Disk Image Store for HA KVM). In this case, no external storage was used. Instead, a cheaper alternative (DRBD) was tested.

- Reliable network, suitable configured

- Fencing device(s) - reliable and TESTED!. We will use HP's iLO for this example.

What is DRBD used for?

For this testing configuration, two DRBD resources were created, one for VM images an another one for VMs users data. Thanks to DRBD (if properly configured), a mirror raid is created through the network (be aware that, although possible, using WANs would mean high latencies). As VMs and data is replicated synchronously in both nodes, if one of them fails, it will be possible to restart "dead" machines on the other node without data loss.

Configuring Fencing

Fencing is vital for Proxmox to manage a node loss and thus provide effective HA. Fencing is the mechanism used to prevent data incoherences between nodes in a cluster by ensuring that a node reported as "dead" is really down. If it is not, a reboot or power-off signal is sent to force it to go to a safe state and prevent multiple instances of the same virtual machine run concurrently on different nodes.

Many different methods can be used for fencing a node, so just a few changes would be needed to make this work under different scenarios.

First, login to the CLI in any of your properly configure cluster machines.

Then, create a copy of cluster.conf (append it .new):

cp /etc/pve/cluster.conf /etc/pve/cluster.conf.new

go and edit cluster.conf.new. In one of the first lines you will see this:

<cluster alias="hpiloclust" config_version="12" name="hpiloclust">

Be sure to increase the number "config_version" each time you plan to apply new configurations as this is the internal mechanism used by the cluster configuration tools to detect new changes.

To be able to create and manage a two-node cluster, edit the cman configuration part to include this:

<cman two_node="1" expected_votes="1"> </cman>

Now, add the available fencing devices to the config files by adding this lines (it is ok right after </clusternodes>):

<fencedevices>

<fencedevice agent="fence_ilo" hostname="nodeA.your.domain" login="hpilologin" name="fenceNodeA" passwd="hpilopwordA"/>

<fencedevice agent="fence_ilo" hostname="nodeB.your.domain" login="hpilologin" name="fenceNodeB" passwd="hpilopwordB"/>

</fencedevices>

If you prefer to use IPs instead of host names, substitute:

hostname="nodeA"

for

ipaddr="192.168.1.2"

or whatever IP belongs to the iLO interface on that node.

Next, you need to tell the cluster what fencing device is used to fence which host. Therefore, within the "<clusternode></clusternode>" tags, add the following accordingly to each node (do not care about the "method" name, choose whatever):

<clusternode name="nodeA.your.domain" nodeid="1" votes="1">

<fence>

<method name="1">

<device name="fenceNodeA" action="reboot"/>

</method>

</fence>

</clusternode>

<clusternode name="nodeB.your.domain" nodeid="2" votes="1">

<fence>

<method name="1">

<device name="fenceNodeB" action="reboot"/>

</method>

</fence>

</clusternode>

Choose the "action" you prefer. "reboot" will fence the node and reboot it to wait for a proper state (i.e. waiting for network to be operative again). Also "off" could be used but this will require human intervention to boot the node again.

So far, so good. The required fencing is now properly configured and must be tested prior to enter a production environment.

Applying changes

Once the cluster.conf.new is properly modified and saved, it is time to apply changes to propagate them across all cluster nodes. This is done through the GUI and connected to the machine where that file has been modified.

On the left of the screen select your cluster. Now, on the top right, select tab named "HA" (see next image).

Now, on the bottom of the screen changes to apply will appear. If everything is correct, click "commit" and acknowledge the operation. Now two scenarios are possible:

- Everything goes smoothly: no errors were detected, changes were applied and the new configuration was propagated across all cluster nodes. Now, the configuration tree should show the new elements (fencing devices) properly configured.

- Errors appeared: check your cluster.conf.new syntax and parameters of the fencing devices. An incorrect password/hostname/username will make tests fail and will prevent the new configuration to take effect. It is recommended to previously check that fencing works by using the fence_ilo (or whatever you use) command from the CLI.

Checking everything works

Create some virtual machines and/or containers in one of your nodes. Start them and enable HA

Now that everything is going smooth on your cluster, just power-off the node were machines are running (press power) or pull the network cables used for data and synchronization (keep that used for the fencing mechanism). From your web manager (connected to the other node), you can see how things evolve by clicking on the "healthy" node and looking at the scrolling "syslog" tab. It should detect the other node is down, create a new quorum and fence it. If fencing fails, you should re-check your fencing configuration as it MUST work in order to automatically restar machines.

Quorum Disk Configuration

Setup the Block Device

A small (about 10MB) block device needs to be shared from a third computer to the two Proxmox nodes. It is not necessary that this third computer provide a highly available device, simply mostly available would be good. In essence, any one of your three devices will be able to be down at a given time, one of your two nodes, or your block device. Having two down at the same time could cause problems.

In this example, a simple LVM backed iSCSI target is shared from a CentOS 6 VM. Please consult this URL for more details: http://www.linuxjournal.com/content/creating-software-backed-iscsi-targets-red-hat-enterprise-linux-6.

A short step-by-step:

- On the third CentOS 6 system, you may need some free space in your Volume Group, if so, try shrinking the lv_swap like this:

swapoff -a lvreduce -L -100M /dev/vg_SOMETHING/lv_swap mkswap /dev/vg_onsitebackup/lv_swap swapon -a

Now you should have 100MB free in your Volume Group

- Create the LV to be shared via iSCSI:

lvcreate -n proxmox2quorumdisk -L 10M vg_SOMETHING lvdisplay # Should list it

- Install the iSCSI software:

yum -y install scsi-target-utils

- Open port 3260 for the IPs of the clients `vi /etc/sysconfig/iptables`:

# Example: -A RH-Firewall-1-INPUT -s IP -p tcp -m tcp --dport 3260 -j ACCEPT

- Now load the rules:

iptables-restore < /etc/sysconfig/iptables

- Setup the iSCSI target `vi /etc/tgt/targets.conf`

<target iqn.2012-06.com.domain.hostname:server.p1qdisk>

backing-store /dev/vg_SOMETHING/proxmox2quorumdisk

initiator-address IPofNODEA

initiator-address IPofNODEB

</target>

- Start the tgtd service:

service tgtd start chkconfig tgtd on tgt-admin -s # You should see your volume listed

- Now you need to mount the iSCSI target on the Proxmox cluster members:

aptitude install tgt vi /etc/iscsi/iscsid.conf # change node.startup to automatic /etc/init.d/open-iscsi restart

- See what targets are available

iscsiadm --mode discovery --type sendtargets --portal IPOFiSCSISERVER

- Login, using the iqn and ip from the above command

iscsiadm -m node -T iqn.BLAHBLAH -p IPOFiSCSISERVER -l

- Find the name of the block device that was added

dmesg | tail

Create the Quorum Disk

See the following URL for more details: http://magazine.redhat.com/2007/12/19/enhancing-cluster-quorum-with-qdisk/.

WARNING: Make sure you use the correct block device in the commands below. The output from `dmesg | tail` above should give you the device you are working with. I use the example of "/dev/sdc" below, but you must verify on your server which block device relates to the iSCSI devices.

On one node:

- Create the partition:

fdisk /dev/sdc # assuming this is the block device from above p # verify the size of the disk n # new partition p # primary 1 # 1st one w # Write it out

- Create the quorum disk, the argument to -l is the label that you want to give to this qdisk:

mkqdisk -c /dev/sdc1 -l proxmox1_qdisk

On all other nodes:

- Logout and login again to iscsi target to see the changes:

iscsiadm --mode node --target <IQN> --portal x.x.x.x --logout iscsiadm --mode node --target <IQN> --portal x.x.x.x --login

Add the qdisk to the Cluster

On one node:

- Copy the cluster.conf file

cp /etc/pve/cluster.conf /etc/pve/cluster.conf.new

- Edit and make the appropriate changes to the file, a) increment the "config_version" by one number, b) remove 2node="1", c) add the quorumd definition and totem timeout, something like this:

<cman expected_votes="3" keyfile="/var/lib/pve-cluster/corosync.authkey"/> <quorumd votes="1" allow_kill="0" interval="1" label="proxmox1_qdisk" tko="10"/> <totem token="54000"/>

Activate:

- Go to the web interface of Proxmox and select Datacenter in the upper left. Select the HA tab. You should see the changes that you just made in the interface. Verify in the diff section that you did increment the cluster version number.

- Click Activate.

- On the command line, run `pvecm s` and verify that both nodes are running the cluster version that you just pushed out.

- On each node, one at a time:

/etc/init.d/rgmanager stop # This will restart any VMs that are HA enabled onto the other node. /etc/init.d/cman reload # This will activate the qdisk

- Verify that rgmanager is running, if not, start it

Verify:

- Run `clustat` on each node and you should see output similar to:

Cluster Status for proxmox1 @ Thu Jun 28 12:23:10 2012 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ proxmox1a 1 Online, Local, rgmanager proxmox1b 2 Online, rgmanager /dev/block/8:33 0 Online, Quorum Disk

- You can also run `pvecm s` again and you should see output similar to:

--snip-- Nodes: 2 Expected votes: 3 Quorum device votes: 1 Total votes: 3 Node votes: 1 Quorum: 2 --snip--

Testing

Now is the time to run the cluster through a barrage of testing. First, ensure that you have some HA enabled VMs for TESTING running on each host before running each of these. Second, ensure that you have a remote power on method in case one of the nodes is powered off.

Some example tests to try:

- `ifdown eth1`

- `/etc/init.d/networking stop`

- `fence_node proxmox1a`

- Restart the server that is serving your quorum disk to ensure that the cluster remains stable when the quorum device is gone.

The VMs should only experience up to a maximum of 5 minutes of downtime when running these tests.

Problems and workarounds

DRBD split-brain

Under some cirumstances, data consistence between both nodes on DRBD partitions can be lost. In this case, the only efficient way to solve this is by manually intervention. The cluster administrator must decide which node data must be preserved and which one is discarded to gain data coherence again. Under DRBD, split-brain situations will probably occur when data connection is lost for longer than a few seconds.

Lets consider a failure scenario where node A is the node where we had the most machines running and thus the one we want to conserve data. Therefore, node B changes made to DRBD partition while the split-brain situation lasted, must be discarded. We consider a Primary/Primary configuration for DRBD. The procedure to follow would be :

- Go to a node B terminal (repeat for each DRBD resource where quorum is lost):

drbdadm secondary [resource name] drbdadm disconnect [resource name] drbdadm -- --discard-my-data connect [resource name]

- Go to a node A terminal (repeat for each DRBD resource where quorum is lost):

drbdadm connect [resource name]

- Go back to node B terminal (repeat for each DRBD resource where quorum is lost):

drbdadm primary [resource name]

Fencing keeps on failing

Fencing MUST work properly when a node fails to have HA working. Therefore, you must ensure that the fencing mechanism is reachable (there exists network connection) and that it is powered (if for testing purposes you just pulled the power cord, things will go wrong). Therefore, It is strongly encouraged to have network switches and power supplies correctly protected and replicated using SAIs (Switch Abstraction Interface) to prevent a lethal blackout that would make HA unusable. What is more, if the HA mechanism fails, you won't be able to manually restart affected VMs on the other host.