Migrate to Proxmox VE: Difference between revisions

m (→Getting Help) |

(add new OVF/OVA import via gui (via h.duerr) →Migration) |

||

| (13 intermediate revisions by 5 users not shown) | |||

| Line 100: | Line 100: | ||

* Set up LVM on top of one large LUN: | * Set up LVM on top of one large LUN: | ||

** Easier management as creating a disk image is handled by Proxmox VE alone | ** Easier management as creating a disk image is handled by Proxmox VE alone | ||

** No snapshots | ** No snapshots, see [[Migrate to Proxmox VE#Alternatives to Snapshots|Alternatives to Snapshots]] | ||

There are often multiple redundant connections to the iSCSI target or FC LUN. In this case, multipath should be configured as well. See [https://pve.proxmox.com/wiki/ISCSI_Multipath ISCSI_Multipath] for a generic tutorial. Consult the documentation of the storage box vendor for any specifics in the multipath configuration. Ideally, the storage box vendor would provide a storage plugin. | There are often multiple redundant connections to the iSCSI target or FC LUN. In this case, multipath should be configured as well. See [https://pve.proxmox.com/wiki/ISCSI_Multipath ISCSI_Multipath] for a generic tutorial. Consult the documentation of the storage box vendor for any specifics in the multipath configuration. Ideally, the storage box vendor would provide a storage plugin. | ||

===== Alternatives to Snapshots ===== | |||

If an existing iSCSI or FC storage needs to be repurposed for a Proxmox VE cluster, the following alternative approaches might fulfil the same goal. | |||

* Use a network share (NFS or CIFS) instead of iSCSI. When using <code>qcow2</code> files for VM disk images, snapshots can be used. | |||

* Rethink the overall strategy; if you plan to use a [https://pbs.proxmox.com/wiki/index.php/Main_Page Proxmox Backup Server], then you could use backups and live restore of VMs instead of snapshots. | |||

*: Backups of running VMs will be quick thanks to dirty bitmap (aka changed block tracking) and the downtime of a VM on restore can also be minimized if the [https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_live_restore live-restore] option is used, where the VM is powered on while the backup is restored. | |||

=== High Availability === | === High Availability === | ||

| Line 145: | Line 153: | ||

== Migration == | == Migration == | ||

There are two methods for migrating Virtual Machines: manual or automatic import of the full VM. It is highly recommended to test the migration with one or more test VMs and become familiar with the processes before moving the production environment. | |||

=== Target VM configuration === | === Target VM configuration === | ||

| Line 153: | Line 161: | ||

The configuration of the target VM on Proxmox VE should follow best practices. They are: | The configuration of the target VM on Proxmox VE should follow best practices. They are: | ||

* CPU type should match the underlying hardware closely while still allowing live-migration. | * CPU: the cpu type should match the underlying hardware closely while still allowing live-migration. | ||

** if all nodes in the cluster have the exact same CPU model you can use the <code>host</code> CPU type. | ** if all nodes in the cluster have the exact same CPU model you can use the <code>host</code> CPU type. | ||

** if some nodes in the cluster have different CPU models, or you plan to extend the cluster using different CPUs in the future, we recommend using one of the generic <code>x86-64-v<X></code> models. | ** if some nodes in the cluster have different CPU models, or you plan to extend the cluster using different CPUs in the future, we recommend using one of the generic <code>x86-64-v<X></code> models. | ||

| Line 164: | Line 172: | ||

** ''IO thread'' enabled to delegate disk IO to a separate thread. | ** ''IO thread'' enabled to delegate disk IO to a separate thread. | ||

: See the [https://pve.proxmox.com/pve-docs/pve-admin-guide.html#qm_hard_disk VM Disk documentation] for details. | : See the [https://pve.proxmox.com/pve-docs/pve-admin-guide.html#qm_hard_disk VM Disk documentation] for details. | ||

*Qemu: Installing the QEMU guest agent in VMs is recommended to improve communication between the host and guest and facilitate command execution in the guest. Additional information can be found [https://pve.proxmox.com/wiki/Qemu-guest-agent here]. | |||

==== VirtIO Guest Drivers ==== | ==== VirtIO Guest Drivers ==== | ||

| Line 188: | Line 198: | ||

* Power down the source VM. | * Power down the source VM. | ||

=== Post Migration === | === Automatic Import of Full VM === | ||

Proxmox VE provides an integrated VM importer using the storage plugin system for native integration into the API and web-based user interface. | |||

You can use this to import the VM as a whole, with most of its config mapped to Proxmox VE's config model and reduced downtime. | |||

==== Supported Import Sources ==== | |||

At time of writing (November 2024), VMware ESXi and OVAs/OVFs are supported as import source, however, further sources may be added as well using this modern mechanism in the future. | |||

Import was tested from ESXi version 6.5 up to version 8.0. | |||

Importing a VM with disks backed by a VMware vSAN storage does not work. A workaround may be to move the hard disks of the VM to another storage first. | |||

Importing from a datastore with special characters like '+' might not work. A workaround may be to rename the datastore. | |||

Importing a VM from VMware ESXi can be significantly slower if the VM has snapshots. | |||

Encrypted VM disks, for example via a Storage Policy, cannot be imported. Remove the encryption policy to remove the encryption first. | |||

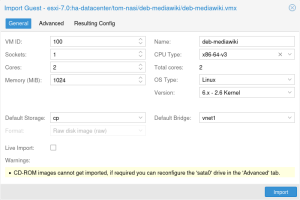

[[File:Gui-import-wizard-general.png|thumb]] | |||

==== Automatic ESXi Import: Step by Step ==== | |||

To import VMs from an ESXi instance, you can follow these steps: | |||

# Make sure that your Proxmox VE is on version 8 (or above) and has the latest available system updates applied. | |||

# Add an "ESXi" import-source storage, through the ''Datacenter -> Storage -> Add'' menu. | |||

#: Enter the domain or IP-address and the credentials of an admin account here. | |||

#: If your ESXi instance has a self-signed certificate you need to add the CA to your system trust store or check the ''Skip Certificate Verification'' checkbox. | |||

#: Note: While one can also import through a vCenter instance, doing so will dramatically reduce performance. | |||

# Select the storage in the resource tree, which is located on the left. | |||

# Check the content of the import panel to verify that you can see all available VMs. | |||

# Select a VM you want to import and click on the <code>Import</code> button at the top. | |||

# Select at least the target storage for the VMs disks and network bridge that the VMs network device should connect to. | |||

# Use the <code>Advanced</code> tab for more fine-grained selection, for example: | |||

#* choose a new ISO image for CD-ROM drives | |||

#* select different storage targets to use for each disk if there are multiple ones | |||

#* configure different network hardware models or bridges for multiple network devices | |||

#* disable importing some disks, CD-ROM drives or network devices | |||

#: Please note that you can edit and extend even more options and details of the VM hardware after creation. | |||

# Optionally check the <code>Resulting Config</code> tab for the full list of key value pairs that will be used to create the VM. | |||

# Make sure you have completed the [[#Preparation|preparations for the VM]], then power down the VM on the source side to ensure a consistent state. | |||

# Start the actual import on the Proxmox VE side. | |||

# Boot the VM and then check if any [[#Post Migration|post-migration changes]] are required. | |||

==== Live-Import ==== | |||

Proxmox VE also supports live import, which means the VM is started during the import process. This is achieved by importing the hard disk data required by the running guest operating system at an early stage and importing the remaining data asynchronously in the background. | |||

This option reduces downtime. Please note that the VM on the ESXi is still switched off, so there will be some downtime. | |||

The IO performance will get slightly reduced initially. | |||

How big the impact is depends on many variables, like source and target storage performance, network bandwidth, but also the guest OS and workload running in the VM on start-up. | |||

Most often using live-import can reduce the total downtime of a service by a significant amount of time. | |||

'''Note:''' should the import fail, all data written since the start of the import will be lost. | |||

That's why we recommend testing this mechanism on a test VM, and to avoid using it in networks with low bandwidth and/or high error rates. | |||

==== Considerations for Mass Import ==== | |||

When importing many virtual machines, one might be tempted to start as many imports in parallel as possible. | |||

However, while the FUSE filesystem that provides the interface between Proxmox VE and VMware ESXi can handle multiple imports, it's still recommended to serialize import tasks as much as possible. | |||

The main limiting factors are: | |||

* The connection limit of the ESXi API. The ESXi API has a relatively low limit on the number of available connections. Once the limit is reached, clients are blocked for roughly 30 seconds. | |||

*: Starting multiple imports at the same time means that the ESXi API is more likely to be overloaded. In that case, it will start blocking all requests, including all other running imports, which can result in hanging IO for guests that get live-imported. | |||

*: To reduce the possibility of an overloaded ESXi, the Proxmox `esxi-folder-fuse` service limits parallel connections to four and serializes retries of requests after getting rate-limited. | |||

*: If you still see rate-limiting or <code>503 Service Unavailable</code> errors <i>you can try to adapt the session limit options</i>, either increase it significantly or set it to <code>0</code>, to disable it completely. Depending on your ESXi version, you can change this by: | |||

*:* ESXi 7.0: Adapting the <code>Config.HostAgent.vmacore.soap.maxSessionCount</code> option, located on the web UI under Host -> Manage -> System -> Advanced Settings | |||

*:* ESXi 6.5/6.7: Set the <code><sessionTimeout></code> and <code><maxSessionCount></code> values to <code>0</code> in the <code>/etc/vmware/hostd/config.xml</code> configuration and either restart <i>hostd</i> (<code>/etc/init.d/hostd restart</code>) or reboot your ESXi. | |||

*:*: If you cannot find those options you can add them by inserting <code><soap><sessionTimeout>0</sessionTimeout><maxSessionCount>0</maxSessionCount></soap></code> inside the <code><config></code> section (restart <i>hostd</i> afterwards). | |||

* The memory usage of the read-ahead cache, used to cope with small read operations. Each disk has a cache consisting of 8 blocks of 128 MiB each. | |||

*: Running many import tasks at the same time could lead to out-of-memory situations on your Proxmox VE host. | |||

In general, it is advised to limit imports such that no more than four VM disks are imported at the same time. | |||

This could mean four VMs with one disk each, or one VM with four disks. Depending on your specific setup, it might be only possible to run just one import at a time, but it might be also feasible to run several parallel imports simultaneously. | |||

=== | === Manual Migration === | ||

Several methods are possible to migrate the disks of the powered-down source VM to the new target VM. They will have different downtimes. For large VM disks, it may be worth the effort to choose a more involved method, as the VM downtime can be reduced considerably. | Several methods are possible to migrate the disks of the powered-down source VM to the new target VM. They will have different downtimes. For large VM disks, it may be worth the effort to choose a more involved method, as the VM downtime can be reduced considerably. | ||

| Line 207: | Line 288: | ||

==== Import OVF ==== | ==== Import OVF ==== | ||

If the VM | If the VM was exported as OVF, you can import it either with the [https://pve.proxmox.com/pve-docs/pve-admin-guide.html#qm_import_virtual_machines import function in the GUI] or with the command <code>qm importovf</code>. | ||

One way to export a VM from VMware, even directly to the Proxmox VE host, is via the <code>ovftool</code> which can be [https://developer.vmware.com/web/tool/4.6.2/ovf-tool/ downloaded] from VMware. Make sure the version is new enough to support your used version of ESXi. | One way to export a VM from VMware, even directly to the Proxmox VE host, is via the <code>ovftool</code> which can be [https://developer.vmware.com/web/tool/4.6.2/ovf-tool/ downloaded] from VMware. Make sure the version is new enough to support your used version of ESXi. | ||

| Line 316: | Line 397: | ||

* Power on the VM in Proxmox VE. The VM should boot normally. | * Power on the VM in Proxmox VE. The VM should boot normally. | ||

* While the VM is running, move the disk to the target storage. For this, select it in the hardware panel of the VM and then use the "Disk Action -> Move Storage" menu. The "Delete source" option can be enabled. It will only remove the edited vmdk file. | * While the VM is running, move the disk to the target storage. For this, select it in the hardware panel of the VM and then use the "Disk Action -> Move Storage" menu. The "Delete source" option can be enabled. It will only remove the edited vmdk file. | ||

=== Post Migration === | |||

* Update network settings. The name of the network adapter will most likely have changed. | |||

* Install missing drivers. This is mostly relevant for Windows VMs; [https://pve.proxmox.com/wiki/Windows_VirtIO_Drivers download and attach the VirtIO ISO]. How to switch the boot disk to VirtIO after the driver is installed [https://pve.proxmox.com/wiki/Paravirtualized_Block_Drivers_for_Windows is explained here]. | |||

== Getting Help == | == Getting Help == | ||

Revision as of 16:40, 22 November 2024

This article aims to assist users in transitioning to Proxmox Virtual Environment. The first part explains the core concepts of Proxmox VE, while the second part outlines several methods for migrating VMs to Proxmox VE. Although it was written with VMware as the source in mind, most sections should apply to other source hypervisors as well.

Concepts

Architecture

Proxmox VE is based on Debian GNU/Linux and uses a customized Linux kernel based on Ubuntu kernels. The Proxmox VE specific software packages are provided by Proxmox Server Solutions.

Standard configuration files and mechanisms are used whenever possible, such as the network configuration located at /etc/network/interfaces.

Proxmox VE provides multiple options for managing a node. The central web-based graphical user interface (GUI), command-line interface (CLI) tools, and REST API allow configuring node resources, virtual machines, containers, storage access, disk management, Software Defined Networking (SDN), firewalls, and more.

Proxmox VE specific configuration files (storage, guest config, cluster, …) are located in the /etc/pve directory. It is backed by the Proxmox Cluster File System (pmxcfs) which synchronizes the contents across all nodes in a cluster.

Virtual guests, such as virtual machines (VMs) or LXC containers, are identified by a unique numerical ID called the VMID. This ID is used in many places, such as the name of the configuration file or disk image name. Lower and upper limits for VMIDs can be defined in a Proxmox VE cluster under Datacenter -> Options -> Next free VMID range. VMIDs can also be manually assigned outside these limits when a new VM is created.

License

The Proxmox VE source code is freely available, released under the GNU Affero General Public License, v3 (GNU AGPLv3). Other license types for Proxmox VE are not available; for example, OEM licensing is not an option. However, you can bundle Proxmox VE with other software packages as long as the terms of AGPLv3 are closely followed.

Subscriptions

Proxmox Server Solutions, the company behind the Proxmox VE project, offers additional services that are available with a subscription. While the Proxmox VE solution is entirely free/libre open-source software (FLOSS) and all its features are accessible at no cost, the subscription provides access to enterprise-class services:

- A subscription gives access to the Enterprise repository, which is the default, stable, and recommended repository for Proxmox VE. This special repository includes only updates which are extensively tested and thus considered stable, ensuring optimal system performance and reliability. It is recommended for production environments.

- The subscription levels Basic, Standard or Premium include enterprise-grade technical support. Technical support is available via the Proxmox Customer Portal, where you get a direct channel to the engineers developing Proxmox VE.

In a Proxmox VE cluster, each node requires its own subscription, and all nodes need to have the same subscription level.

For more details, see the Proxmox Server Solutions website.

Cluster

Proxmox VE clusters are multi-master clusters that operate via a quorum (majority of votes). Each node contributes one vote to the cluster. At least 3 votes are needed for a stable cluster, meaning that a Proxmox VE cluster should preferably consist of at least 3 nodes. For two-node clusters, the QDevice mechanism can be used to provide an additional vote.

The management of the cluster can be performed by connecting to any of the cluster nodes, as each node provides the web GUI and CLI tools.

The Proxmox VE cluster communication uses the Corosync protocol, which can support up to 8 networks and automatically switches between them if one becomes unusable.

Note: For the Proxmox VE cluster network (Corosync), it is critical to have a reliable and stable connection with low latency. To avoid congestion from other services, it is considered best practice to set up a dedicated physical network for Corosync. Additional redundant Corosync networks should be configured to ensure connectivity in case the dedicated network experiences issues.

Network

Proxmox VE utilizes the Linux network stack. The vmbr{X} interfaces are the node's virtual switches. These interfaces are technically Linux bridges and can handle VLANs.

Note: When using Software Defined Networking (SDN), the names for the virtual switches can be defined very freely.

Link aggregation (LAGs) are called network bonds.

The different network layers can be stacked. For instance, two physical interfaces can be combined into a bond, which can then be used as bridge-port for a vmbr.

VLANs can be configured in multiple ways:

- per guest NIC

- in any layer of the network stack, using the dot notation. For example, a

bridge-portfor avmbrcan bebond0.20to use VLAN 20 onbond0 - dedicated VLAN interface (that could be used as the

bridge-portfor avmbrinterface) - cluster-wide, using SDN VLAN zones

For more complex network setups, please refer to the Software Defined Networking (SDN).

Note: Although Open vSwitch (OVS) can be used, it is rarely necessary. Historically, the Linux bridge lacked some features, but it has caught up with OVS in most areas, making it hardly necessary to use OVS.

Storage

Proxmox VE utilizes storage plugins. The storage plugins interact on a higher level with Proxmox VE (for example: create & delete disk images, take snapshot, …) and handle the low-level implementation for the individual storage types. Proxmox VE comes with a number of native storage plugins. Additionally, it is possible for third parties to provide their plugins to integrate with Proxmox VE.

Storages are defined at the cluster level. Content types define what a storage can be used for. The documentation has more details.

File or block level storage

Storage falls into roughly two categories: file level storage and block level storage.

- On a file level storage, Proxmox VE expects a specific directory structure and will create it if it is not present. Disk images are stored in files, and various file formats can be selected for VM disks, although

qcow2is preferred for full functionality. A file level storage can also store additional content types, such as ISO images, container templates or backups. Examples: Local directory, NFS, CIFS, … - On block level storage, the underlying storage layer provides block devices (similar to actual disks) which are used for disk images. Functionality like snapshots are provided by the storage layer itself. Examples: ZFS, Ceph RBD, thin LVM, …

Local storage

A local storage is considered to be available on all nodes but physically different on each node. This means that a local storage can have different content on each node. In case some nodes do not have the local storage present, use the list of nodes in the storage configuration to restrict the storage to the nodes on which it is present.

Examples of local storage types are: ZFS, Directory*, LVM*, BTRFS.

Note: *Directory and LVM (non-thin) are usually local, but can be shared in some circumstances.

A shared storage is considered to be available with the same contents on all nodes. Some storage types are shared by default, for example any network share or Ceph RBD.

Some storage types are local by default, but can be marked as shared. The shared flag tells the Proxmox VE cluster that an external mechanism ensures that all nodes access the same data.

In case you want to use a network share or clustered file system that is not supported by the native storage plugins, you can install the necessary packages manually (Proxmox VE is based on Debian) and make the file system available at some mount path. Then, you can add a directory storage for the mount path in Proxmox VE and mark it as shared.

Note: Add the is_mountpoint flag to such a directory storage so that Proxmox VE only uses it once it detects a file system mounted at the specified path: pvesm set {storage} --is_mountpoint 1. Without the flag, Proxmox VE may write to the file system of the host OS if the mount is not available.

Another common use case is to have iSCSI or Fibre Channel (FC) LUNs that should be accessed by all nodes in the cluster. There are currently two options supported out of the box:

- Create one LUN per disk image:

- High management overhead when creating new VMs & many LUNs

- Snapshots need to be done on the iSCSI target

- Set up LVM on top of one large LUN:

- Easier management as creating a disk image is handled by Proxmox VE alone

- No snapshots, see Alternatives to Snapshots

There are often multiple redundant connections to the iSCSI target or FC LUN. In this case, multipath should be configured as well. See ISCSI_Multipath for a generic tutorial. Consult the documentation of the storage box vendor for any specifics in the multipath configuration. Ideally, the storage box vendor would provide a storage plugin.

Alternatives to Snapshots

If an existing iSCSI or FC storage needs to be repurposed for a Proxmox VE cluster, the following alternative approaches might fulfil the same goal.

- Use a network share (NFS or CIFS) instead of iSCSI. When using

qcow2files for VM disk images, snapshots can be used. - Rethink the overall strategy; if you plan to use a Proxmox Backup Server, then you could use backups and live restore of VMs instead of snapshots.

- Backups of running VMs will be quick thanks to dirty bitmap (aka changed block tracking) and the downtime of a VM on restore can also be minimized if the live-restore option is used, where the VM is powered on while the backup is restored.

High Availability

HA Overview

Here is how high availability (HA) works in a Proxmox VE cluster:

Every node running HA guests reports its presence to the cluster every 10 seconds.

If a node fails or loses network connectivity, the rest of the cluster waits for some time (~2 minutes) in case the node comes back (for example if the network problems are quickly resolved).

If the node does not come back, the HA guests that were running on the failed node are recovered (started) in the remaining cluster. This requires that all resources for the guest are available. This primarily means disk images must be available on a shared storage. Passed-through devices must also be considered for the recovery to work.

Once HA guests are running on a node (Datacenter -> HA shows the node as "active"), a stable Corosync connection is important! Therefore, it is best practice to have at least one dedicated network just for Corosync. Nodes use the Corosync network to communicate with the Proxmox VE cluster and report their presence. You can configure additional Corosync links during cluster creation or afterwards. Corosync can switch between multiple networks and will do so if one of the networks becomes unusable.

If a node is running but loses the Corosync connection to the quorate (majority) part of the cluster, it will wait for about a minute to see if it can reconnect. If it can, everything is fine. If not, the node will fence itself. This is basically the same as hitting the reset button on the machine. This is necessary to ensure that the HA guests on that node are definitely powered off and there is no risk of data corruption when the (hopefully) remaining rest of the cluster recovers them.

Corosync will consider a network unusable if it is completely down or if the latency is too high. Latency can become too high due to other services congesting the physical link. For example, anything related to storage or backup has a good chance of saturating a network link. These two factors can even be combined, for example if the same physical link is used to access the disk images and write the data to the backup target.

If all or most nodes in the cluster experience the same Corosync connection problems and fence themselves, it will look like the cluster has rebooted out of the blue.

There is currently (as of 2024) no fault tolerance available where two VMs on different nodes run in lockstep. The feature is called COLO in QEMU/KVM and currently under development.

Configuration

To use HA, the guest disk images need to be available on the nodes. Usually, this means that they should be stored on a shared storage. In smaller clusters, ZFS and Replication may be an alternative, but it has the caveat that it is asynchronous and could therefore result in some data loss, depending on when the last successful replication took place.

For passed-through devices in an HA environment, resource mappings can be used to simplify the allocation.

HA groups are not always necessary, but can help to control the behavior of the HA functionality. This can be useful if certain guests can be recovered only on a subset of nodes, for example if a certain storage or passed-through device is not available on all nodes. If HA is handled on the application layer (in-guest), the HA groups can be utilized to make sure that VMs will never run on the same node.

Backup

Proxmox VE has backup integration using either the archive-based Proxmox vzdump tool or the Proxmox Backup Server. Both solutions create full backups.

Proxmox Backup Server provides deduplication independent of the file system. In addition, only incremental changes since the last backup are sent when backing up running VMs. This results in fast backups of VMs and minimal storage usage on the Proxmox Backup Server. Additionally, Proxmox Backup Server supports live-restore of VMs, which means the VM can be started right away while the restore is still ongoing.

There might be other third-party backup software that integrates with Proxmox VE.

Migration

There are two methods for migrating Virtual Machines: manual or automatic import of the full VM. It is highly recommended to test the migration with one or more test VMs and become familiar with the processes before moving the production environment.

Target VM configuration

Best practices

The configuration of the target VM on Proxmox VE should follow best practices. They are:

- CPU: the cpu type should match the underlying hardware closely while still allowing live-migration.

- if all nodes in the cluster have the exact same CPU model you can use the

hostCPU type. - if some nodes in the cluster have different CPU models, or you plan to extend the cluster using different CPUs in the future, we recommend using one of the generic

x86-64-v<X>models.

- if all nodes in the cluster have the exact same CPU model you can use the

- The Virtual Machine CPU type documentation has more details.

- Network: VirtIO has the least overhead and is preferable. Other NIC models can be chosen for older operating systems that do not have drivers for the paravirtualized VirtIO NIC. See the VM Network documentation for details.

- Memory: Enable the "Ballooning Device". Even if you do not need memory ballooning, it is used to gather detailed memory usage information from the guest OS. See the VM Memory documentation for details.

- Disks:

- Bus type: SCSI with the SCSI controller set to VirtIO SCSI single to use the efficient VirtIO-SCSI driver that also allows IO threads. For this to work, the guest needs to support VirtIO (see below).

- Discard enabled to pass through trim/discard commands to the storage layer (useful when thin provisioned).

- IO thread enabled to delegate disk IO to a separate thread.

- See the VM Disk documentation for details.

- Qemu: Installing the QEMU guest agent in VMs is recommended to improve communication between the host and guest and facilitate command execution in the guest. Additional information can be found here.

VirtIO Guest Drivers

Linux-based VMs will support the paravirtualized VirtIO drivers out of the box, unless they are ancient. Support has been present since kernel 2.6.x.

Since Windows does not come with VirtIO driver support out of the box, use IDE or SATA for the disk bus type at first when migrating existing Windows VMs. After the migration, additional steps are necessary for Windows to switch the (boot) disk to VirtIO SCSI.

When creating new Windows VMs, you can directly install the VirtIO drivers in the Windows installation setup wizard by adding an extra CDROM drive with an ISO containing the Windows VirtIO drivers.

BIOS / UEFI

The BIOS setting depends on the source VM. If it boots in legacy BIOS mode, it needs to be set to SeaBIOS. If it boots in UEFI mode, set it to OVMF (UEFI).

Some operating systems will not set up the default boot path in UEFI mode (/EFI/BOOT/BOOTX64.EFI) but only their own custom one. In such a situation, the VM will not boot even when the BIOS is set correctly. You will have to enter the UEFI BIOS and add the custom boot entry. An EFI Disk needs to be configured in the Hardware panel of the VM to persist these settings.

Preparation

- Before the migration, remove any guest tools specific to the old hypervisor, as it might be difficult to remove them after.

- Note down the guest network configuration, so you can manually restore it.

- On Windows, consider removing the static network configuration, if there is any. After the migration, the network adapter will change and Windows will show a warning if you configure the same IP address on another network adapter, even if the previous one is not present anymore.

- In case of DHCP reservations, either adapt the reservation to the new MAC address of the target VM NIC, or set the MAC address on the target VM NIC manually.

- If full-disk encryption is used in the VM and the keys are stored in a virtual TPM device, consider disabling it. It is currently not possible to migrate the vTPM state to Proxmox VE from VMware. Make sure to have the manual keys to decrypt the VM available, just in case.

- Power down the source VM.

Automatic Import of Full VM

Proxmox VE provides an integrated VM importer using the storage plugin system for native integration into the API and web-based user interface. You can use this to import the VM as a whole, with most of its config mapped to Proxmox VE's config model and reduced downtime.

Supported Import Sources

At time of writing (November 2024), VMware ESXi and OVAs/OVFs are supported as import source, however, further sources may be added as well using this modern mechanism in the future.

Import was tested from ESXi version 6.5 up to version 8.0.

Importing a VM with disks backed by a VMware vSAN storage does not work. A workaround may be to move the hard disks of the VM to another storage first.

Importing from a datastore with special characters like '+' might not work. A workaround may be to rename the datastore.

Importing a VM from VMware ESXi can be significantly slower if the VM has snapshots.

Encrypted VM disks, for example via a Storage Policy, cannot be imported. Remove the encryption policy to remove the encryption first.

Automatic ESXi Import: Step by Step

To import VMs from an ESXi instance, you can follow these steps:

- Make sure that your Proxmox VE is on version 8 (or above) and has the latest available system updates applied.

- Add an "ESXi" import-source storage, through the Datacenter -> Storage -> Add menu.

- Enter the domain or IP-address and the credentials of an admin account here.

- If your ESXi instance has a self-signed certificate you need to add the CA to your system trust store or check the Skip Certificate Verification checkbox.

- Note: While one can also import through a vCenter instance, doing so will dramatically reduce performance.

- Select the storage in the resource tree, which is located on the left.

- Check the content of the import panel to verify that you can see all available VMs.

- Select a VM you want to import and click on the

Importbutton at the top. - Select at least the target storage for the VMs disks and network bridge that the VMs network device should connect to.

- Use the

Advancedtab for more fine-grained selection, for example:- choose a new ISO image for CD-ROM drives

- select different storage targets to use for each disk if there are multiple ones

- configure different network hardware models or bridges for multiple network devices

- disable importing some disks, CD-ROM drives or network devices

- Please note that you can edit and extend even more options and details of the VM hardware after creation.

- Optionally check the

Resulting Configtab for the full list of key value pairs that will be used to create the VM. - Make sure you have completed the preparations for the VM, then power down the VM on the source side to ensure a consistent state.

- Start the actual import on the Proxmox VE side.

- Boot the VM and then check if any post-migration changes are required.

Live-Import

Proxmox VE also supports live import, which means the VM is started during the import process. This is achieved by importing the hard disk data required by the running guest operating system at an early stage and importing the remaining data asynchronously in the background. This option reduces downtime. Please note that the VM on the ESXi is still switched off, so there will be some downtime.

The IO performance will get slightly reduced initially. How big the impact is depends on many variables, like source and target storage performance, network bandwidth, but also the guest OS and workload running in the VM on start-up. Most often using live-import can reduce the total downtime of a service by a significant amount of time.

Note: should the import fail, all data written since the start of the import will be lost. That's why we recommend testing this mechanism on a test VM, and to avoid using it in networks with low bandwidth and/or high error rates.

Considerations for Mass Import

When importing many virtual machines, one might be tempted to start as many imports in parallel as possible. However, while the FUSE filesystem that provides the interface between Proxmox VE and VMware ESXi can handle multiple imports, it's still recommended to serialize import tasks as much as possible.

The main limiting factors are:

- The connection limit of the ESXi API. The ESXi API has a relatively low limit on the number of available connections. Once the limit is reached, clients are blocked for roughly 30 seconds.

- Starting multiple imports at the same time means that the ESXi API is more likely to be overloaded. In that case, it will start blocking all requests, including all other running imports, which can result in hanging IO for guests that get live-imported.

- To reduce the possibility of an overloaded ESXi, the Proxmox `esxi-folder-fuse` service limits parallel connections to four and serializes retries of requests after getting rate-limited.

- If you still see rate-limiting or

503 Service Unavailableerrors you can try to adapt the session limit options, either increase it significantly or set it to0, to disable it completely. Depending on your ESXi version, you can change this by:- ESXi 7.0: Adapting the

Config.HostAgent.vmacore.soap.maxSessionCountoption, located on the web UI under Host -> Manage -> System -> Advanced Settings - ESXi 6.5/6.7: Set the

<sessionTimeout>and<maxSessionCount>values to0in the/etc/vmware/hostd/config.xmlconfiguration and either restart hostd (/etc/init.d/hostd restart) or reboot your ESXi.- If you cannot find those options you can add them by inserting

<soap><sessionTimeout>0</sessionTimeout><maxSessionCount>0</maxSessionCount></soap>inside the<config>section (restart hostd afterwards).

- If you cannot find those options you can add them by inserting

- ESXi 7.0: Adapting the

- The memory usage of the read-ahead cache, used to cope with small read operations. Each disk has a cache consisting of 8 blocks of 128 MiB each.

- Running many import tasks at the same time could lead to out-of-memory situations on your Proxmox VE host.

In general, it is advised to limit imports such that no more than four VM disks are imported at the same time. This could mean four VMs with one disk each, or one VM with four disks. Depending on your specific setup, it might be only possible to run just one import at a time, but it might be also feasible to run several parallel imports simultaneously.

Manual Migration

Several methods are possible to migrate the disks of the powered-down source VM to the new target VM. They will have different downtimes. For large VM disks, it may be worth the effort to choose a more involved method, as the VM downtime can be reduced considerably.

Restore from backup

If you already have backups of the VMs, check if the backup solution provides an integration with Proxmox VE. Alternatively, it might be possible to boot the VM from a live medium and do an in-place restore from a backup, similar as if you would restore to bare-metal.

Clone directly

By using tools like Clonezilla, it is possible to clone the source VM disks directly to the newly created target VM. This usually means booting the source and target VM with the live medium. Then it is typically possible to either clone the disk directly to the target VM or store it into an image on a network share and clone it from there to the target VM.

Import OVF

If the VM was exported as OVF, you can import it either with the import function in the GUI or with the command qm importovf.

One way to export a VM from VMware, even directly to the Proxmox VE host, is via the ovftool which can be downloaded from VMware. Make sure the version is new enough to support your used version of ESXi.

Download the Linux 64-bit version. To unzip the ZIP archive on a Proxmox VE host, the unzip utility needs to be installed with apt install unzip first.

To run the ovftool, navigate to the location it was extracted to and run it with the following parameters when connecting to an ESXi host.

./ovftool vi://root@{IP or FQDN of ESXi host}/{VM name} /path/to/export/location

When connecting to a vCenter instance, the command will look like this:

./ovftool vi://{user}:{password}@{IP or FQDN of vCenter}/{Datacenter}/vm/{VM name} /path/to/export/location

If you are unsure about the location of the source VM, you can run the ovftool without any additional source location and without a path to export to. It will then list the possible next steps. For example:

./ovftool vi://{user}:{password}@{IP or FQDN of vCenter}/{Datacenter}/

Once exported, navigate to the export directory that contains the {VM name}.ovf and matching vmdk file.

The command to import is:

qm importovf {vmid} {VM name}.ovf {target storage}

For example:

qm importovf 100 Server.ovf local-zfs

Since the VM will be created by this command, choose an free VMID or press Tab for auto-completion of the next free VMID. The target storage can be any storage on the node for which the content type "Disk Image" is enabled.

Note: If the target storage stores the disk images as files, the --format parameter can be used to define the file format. Available are the following options: raw, vmdk, qcow2. The native format for QEMU/KVM is qcow2.

When the import is finished, the VM configuration needs to be finalized:

- Add a network card

- Adjust settings to follow best practices. For example, you can use the CLI to set the CPU type and SCSI controller:

qm set {vmid} --cpu x86-64-v2-AES --scsihw virtio-scsi-single - When the guest OS is Windows, the disk bus type needs to be switched from the default SCSI to IDE or SATA.

- Detach the disk. It will show up as "Unused" disk.

- Attach the disk by double-clicking the "Unused" disk, or selecting it and clicking "Edit". Then set the bus type to either IDE or SATA. Additional steps are necessary to switch to VirtIO.

Import Disk

Here, the idea is to import the disk images directly with the qm disk import command. Proxmox VE needs to be able to access the *.vmdk and *-flat.vmdk files:

- The easiest way to set this up is a network share which can be accessed by both the source VMware cluster and the target Proxmox VE cluster. If the network share type is supported by Proxmox VE, you can directly add it as a storage.

- Alternatively, you can copy the files to a location accessible for Proxmox VE.

The qm disk import command will convert the disk image to the correct target format on the fly. We assume that the source VM is called "Server".

- Run through the preparation steps of the source VM.

- Create a new VM on the Proxmox VE host with the configuration needed.

- In the "Disk" tab, remove the default disk.

- On the Proxmox VE host, open a shell, either via the web GUI or SSH.

- Go to the directory where the

vmdkfiles are located. For example, if you configured a storage for the network share:cd /mnt/pve/{storage name}/{VM name}. Use thelscommand to list the directory contents to verify that the needed files are present. - The parameters for the command are:

qm disk import {target VMID} {vmdk file} {target storage}. For example:qm disk import 104 Server.vmdk local-zfs

Note: If the target storage stores the disk images as files, the --format parameter can be used to define the file format. Available are the following options: raw, vmdk, qcow2. The native format for QEMU/KVM is qcow2

- Once the import is done, the disk will show up as

unusedXdisk in the hardware panel of the VM. - Attach the disk image to a bus. For this, double click or select it and hit the Edit button.

- Keep in mind that for a Windows VM, only IDE or SATA will work out of the box. After the migration, additional steps are necessary to switch to VirtIO.

- If the disk image is the boot disk, enable it and choose the correct boot order in the Options -> Boot Order panel of the VM.

Attach Disk & Move Disk (minimal downtime)

If the source VM is accessible by both the VMware and Proxmox VE clusters (ideally via a network share), Proxmox VE can use the *.vmdk disk image to start the VM right away. Then, the disk image can be moved to the final target storage in the Proxmox VE cluster while the VM is running. The resulting downtime will be minimal. This method is more involved than the other methods, but the very short downtime can be worth it.

We assume that the source VM is called "Server".

- On the Proxmox VE cluster, create a new storage pointing to the network share. The content type "Disk Image" needs to be enabled. Proxmox VE will mount the share under

/mnt/pve/{storage name}. Check that the*.vmdkand*-flat.vmdkfiles from VMware are available under that path. - Create a target VM that matches the source VM as closely as possible.

- EFI disks (visible in the UI when selecting Microsoft Windows as OS or OVMF/UEFI as BIOS) and TPM devices should be stored in the final target storage.

- For the OS disk, select the network share as storage and as format

vmdk. With this setting, Proxmox VE will create a new*.vmdkfile under/mnt/pve/{storage name}/images/{vmid}/. - Keep in mind that for a Windows VM, only IDE or SATA will work out of the box. After the migration, additional steps are necessary to switch to VirtIO.

- Copy the source

*.vmdkfile and overwrite the one that was created by Proxmox VE. Leave the source*-flat.vmdkas it is. Verify the name of the disk image in the hardware panel of the VM. For example, for a VM with the VMID 103, navigate to/mnt/pve/{storage name}and run:cp Server/Server.vmdk images/103/vm-103-disk-0.vmdk - Edit the copied file, for example:

nano images/103/vm-103-disk-0.vmdk. It will look similar to the following:

# Disk DescriptorFile version=1 encoding="UTF-8" CID=0ae39a16 parentCID=ffffffff createType="vmfs" # Extent description RW 104857600 VMFS "Server-flat.vmdk" # The Disk Data Base #DDB ddb.adapterType = "lsilogic" ddb.geometry.cylinders = "6527" ddb.geometry.heads = "255" ddb.geometry.sectors = "63" ddb.longContentID = "b53ce19184ef44b66d5350320ae39a16" ddb.thinProvisioned = "1" ddb.toolsInstallType = "-1" ddb.toolsVersion = "0" ddb.uuid = "60 00 C2 9e 0b 21 85 f5-bc f9 da cc 5d 21 a4 dc" ddb.virtualHWVersion = "14"

- Edit the section below

# Extent descriptionto point to the location of the source*-flat.vmdk. Most likely the section will look similar to this:

RW 104857600 VMFS "Server-flat.vmdk"

Change the path to a relative path pointing to the source *-flat.vmdk. As the *.vmdk is located in images/{vmid}/, the path needs to go two levels up, and then into the directory of the source VM:

RW 104857600 VMFS "../../Server/Server-flat.vmdk"

- Power on the VM in Proxmox VE. The VM should boot normally.

- While the VM is running, move the disk to the target storage. For this, select it in the hardware panel of the VM and then use the "Disk Action -> Move Storage" menu. The "Delete source" option can be enabled. It will only remove the edited vmdk file.

Post Migration

- Update network settings. The name of the network adapter will most likely have changed.

- Install missing drivers. This is mostly relevant for Windows VMs; download and attach the VirtIO ISO. How to switch the boot disk to VirtIO after the driver is installed is explained here.

Getting Help

If you require technical assistance and have an active subscription (at Basic, Standard or Premium level), please contact our support engineers through our Customer portal. Subscriptions can be purchased at the Proxmox online shop. For any purchase-related inquiries, register in the shop and open a ticket so that our customer service team can assist you.

If you are seeking local support, training, and consulting services in your region or language, please refer to our partner ecosystem.

Our community forum and user mailing list are excellent resources for connecting with the worldwide Proxmox community, and can be used to ask for help if you do not have any subscription eligible for enterprise support.

For general inquiries you can contact us at office@proxmox.com.