NAME

pvesr - Proxmox VE Storage Replication

SYNOPSIS

pvesr <COMMAND> [ARGS] [OPTIONS]

pvesr create-local-job <id> <target> [OPTIONS]

Create a new replication job

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

- <target>: <string>

-

Target node.

- --comment <string>

-

Description.

- --disable <boolean>

-

Flag to disable/deactivate the entry.

- --rate <number> (1 - N)

-

Rate limit in mbps (megabytes per second) as floating point number.

- --remove_job <full | local>

-

Mark the replication job for removal. The job will remove all local replication snapshots. When set to full, it also tries to remove replicated volumes on the target. The job then removes itself from the configuration file.

- --schedule <string> (default = */15)

-

Storage replication schedule. The format is a subset of systemd calendar events.

- --source <string>

-

For internal use, to detect if the guest was stolen.

pvesr delete <id> [OPTIONS]

Mark replication job for removal.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

- --force <boolean> (default = 0)

-

Will remove the jobconfig entry, but will not cleanup.

- --keep <boolean> (default = 0)

-

Keep replicated data at target (do not remove).

pvesr disable <id>

Disable a replication job.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

pvesr enable <id>

Enable a replication job.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

pvesr finalize-local-job <id> [<extra-args>] [OPTIONS]

Finalize a replication job. This removes all replications snapshots with timestamps different than <last_sync>.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

- <extra-args>: <array>

-

The list of volume IDs to consider.

- --last_sync <integer> (0 - N)

-

Time (UNIX epoch) of last successful sync. If not specified, all replication snapshots gets removed.

pvesr help [OPTIONS]

Get help about specified command.

- --extra-args <array>

-

Shows help for a specific command

- --verbose <boolean>

-

Verbose output format.

pvesr list

List replication jobs.

pvesr prepare-local-job <id> [<extra-args>] [OPTIONS]

Prepare for starting a replication job. This is called on the target node before replication starts. This call is for internal use, and return a JSON object on stdout. The method first test if VM <vmid> reside on the local node. If so, stop immediately. After that the method scans all volume IDs for snapshots, and removes all replications snapshots with timestamps different than <last_sync>. It also removes any unused volumes. Returns a hash with boolean markers for all volumes with existing replication snapshots.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

- <extra-args>: <array>

-

The list of volume IDs to consider.

- --force <boolean> (default = 0)

-

Allow to remove all existion volumes (empty volume list).

- --last_sync <integer> (0 - N)

-

Time (UNIX epoch) of last successful sync. If not specified, all replication snapshots get removed.

- --parent_snapname <string>

-

The name of the snapshot.

- --scan <string>

-

List of storage IDs to scan for stale volumes.

pvesr read <id>

Read replication job configuration.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

pvesr run [OPTIONS]

This method is called by the systemd-timer and executes all (or a specific) sync jobs.

- --id [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

- --mail <boolean> (default = 0)

-

Send an email notification in case of a failure.

- --verbose <boolean> (default = 0)

-

Print more verbose logs to stdout.

pvesr schedule-now <id>

Schedule replication job to start as soon as possible.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

pvesr set-state <vmid> <state>

Set the job replication state on migration. This call is for internal use. It will accept the job state as ja JSON obj.

- <vmid>: <integer> (1 - N)

-

The (unique) ID of the VM.

- <state>: <string>

-

Job state as JSON decoded string.

pvesr status [OPTIONS]

List status of all replication jobs on this node.

- --guest <integer> (1 - N)

-

Only list replication jobs for this guest.

pvesr update <id> [OPTIONS]

Update replication job configuration.

- <id>: [1-9][0-9]{2,8}-\d{1,9}

-

Replication Job ID. The ID is composed of a Guest ID and a job number, separated by a hyphen, i.e. <GUEST>-<JOBNUM>.

- --comment <string>

-

Description.

- --delete <string>

-

A list of settings you want to delete.

- --digest <string>

-

Prevent changes if current configuration file has different SHA1 digest. This can be used to prevent concurrent modifications.

- --disable <boolean>

-

Flag to disable/deactivate the entry.

- --rate <number> (1 - N)

-

Rate limit in mbps (megabytes per second) as floating point number.

- --remove_job <full | local>

-

Mark the replication job for removal. The job will remove all local replication snapshots. When set to full, it also tries to remove replicated volumes on the target. The job then removes itself from the configuration file.

- --schedule <string> (default = */15)

-

Storage replication schedule. The format is a subset of systemd calendar events.

- --source <string>

-

For internal use, to detect if the guest was stolen.

DESCRIPTION

The pvesr command line tool manages the Proxmox VE storage replication framework. Storage replication brings redundancy for guests using local storage and reduces migration time.

It replicates guest volumes to another node so that all data is available without using shared storage. Replication uses snapshots to minimize traffic sent over the network. Therefore, new data is sent only incrementally after the initial full sync. In the case of a node failure, your guest data is still available on the replicated node.

The replication is done automatically in configurable intervals. The minimum replication interval is one minute, and the maximal interval once a week. The format used to specify those intervals is a subset of systemd calendar events, see Schedule Format section:

It is possible to replicate a guest to multiple target nodes, but not twice to the same target node.

Each replications bandwidth can be limited, to avoid overloading a storage or server.

Guests with replication enabled can currently only be migrated offline. Only changes since the last replication (so-called deltas) need to be transferred if the guest is migrated to a node to which it already is replicated. This reduces the time needed significantly. The replication direction automatically switches if you migrate a guest to the replication target node.

For example: VM100 is currently on nodeA and gets replicated to nodeB. You migrate it to nodeB, so now it gets automatically replicated back from nodeB to nodeA.

If you migrate to a node where the guest is not replicated, the whole disk data must send over. After the migration, the replication job continues to replicate this guest to the configured nodes.

|

|

High-Availability is allowed in combination with storage replication, but it has the following implications:

|

Supported Storage Types

| Description | PVE type | Snapshots | Stable |

|---|---|---|---|

ZFS (local) |

zfspool |

yes |

yes |

Schedule Format

Proxmox VE has a very flexible replication scheduler. It is based on the systemd time calendar event format.[1] Calendar events may be used to refer to one or more points in time in a single expression.

Such a calendar event uses the following format:

[day(s)] [[start-time(s)][/repetition-time(s)]]

This format allows you to configure a set of days on which the job should run. You can also set one or more start times. It tells the replication scheduler the moments in time when a job should start. With this information we, can create a job which runs every workday at 10 PM: 'mon,tue,wed,thu,fri 22' which could be abbreviated to: 'mon..fri 22', most reasonable schedules can be written quite intuitive this way.

|

|

Hours are formatted in 24-hour format. |

To allow a convenient and shorter configuration, one or more repeat times per guest can be set. They indicate that replications are done on the start-time(s) itself and the start-time(s) plus all multiples of the repetition value. If you want to start replication at 8 AM and repeat it every 15 minutes until 9 AM you would use: '8:00/15'

Here you see that if no hour separation (:), is used the value gets interpreted as minute. If such a separation is used, the value on the left denotes the hour(s), and the value on the right denotes the minute(s). Further, you can use * to match all possible values.

To get additional ideas look at more Examples below.

Detailed Specification

- days

-

Days are specified with an abbreviated English version: sun, mon, tue, wed, thu, fri and sat. You may use multiple days as a comma-separated list. A range of days can also be set by specifying the start and end day separated by “..”, for example mon..fri. These formats can be mixed. If omitted '*' is assumed.

- time-format

-

A time format consists of hours and minutes interval lists. Hours and minutes are separated by ':'. Both hour and minute can be list and ranges of values, using the same format as days. First are hours, then minutes. Hours can be omitted if not needed. In this case '*' is assumed for the value of hours. The valid range for values is 0-23 for hours and 0-59 for minutes.

Examples:

| Schedule String | Alternative | Meaning |

|---|---|---|

mon,tue,wed,thu,fri |

mon..fri |

Every working day at 0:00 |

sat,sun |

sat..sun |

Only on weekends at 0:00 |

mon,wed,fri |

— |

Only on Monday, Wednesday and Friday at 0:00 |

12:05 |

12:05 |

Every day at 12:05 PM |

*/5 |

0/5 |

Every five minutes |

mon..wed 30/10 |

mon,tue,wed 30/10 |

Monday, Tuesday, Wednesday 30, 40 and 50 minutes after every full hour |

mon..fri 8..17,22:0/15 |

— |

Every working day every 15 minutes between 8 AM and 6 PM and between 10 PM and 11 PM |

fri 12..13:5/20 |

fri 12,13:5/20 |

Friday at 12:05, 12:25, 12:45, 13:05, 13:25 and 13:45 |

12,14,16,18,20,22:5 |

12/2:5 |

Every day starting at 12:05 until 22:05, every 2 hours |

* |

*/1 |

Every minute (minimum interval) |

Error Handling

If a replication job encounters problems, it is placed in an error state. In this state, the configured replication intervals get suspended temporarily. The failed replication is repeatedly tried again in a 30 minute interval. Once this succeeds, the original schedule gets activated again.

Possible issues

Some of the most common issues are in the following list. Depending on your setup there may be another cause.

-

Network is not working.

-

No free space left on the replication target storage.

-

Storage with same storage ID available on the target node

|

|

You can always use the replication log to find out what is causing the problem. |

Migrating a guest in case of Error

In the case of a grave error, a virtual guest may get stuck on a failed node. You then need to move it manually to a working node again.

Example

Let’s assume that you have two guests (VM 100 and CT 200) running on node A and replicate to node B. Node A failed and can not get back online. Now you have to migrate the guest to Node B manually.

-

connect to node B over ssh or open its shell via the WebUI

-

check if that the cluster is quorate

# pvecm status

-

If you have no quorum, we strongly advise to fix this first and make the node operable again. Only if this is not possible at the moment, you may use the following command to enforce quorum on the current node:

# pvecm expected 1

|

|

Avoid changes which affect the cluster if expected votes are set (for example adding/removing nodes, storages, virtual guests) at all costs. Only use it to get vital guests up and running again or to resolve the quorum issue itself. |

-

move both guest configuration files form the origin node A to node B:

# mv /etc/pve/nodes/A/qemu-server/100.conf /etc/pve/nodes/B/qemu-server/100.conf # mv /etc/pve/nodes/A/lxc/200.conf /etc/pve/nodes/B/lxc/200.conf

-

Now you can start the guests again:

# qm start 100 # pct start 200

Remember to replace the VMIDs and node names with your respective values.

Managing Jobs

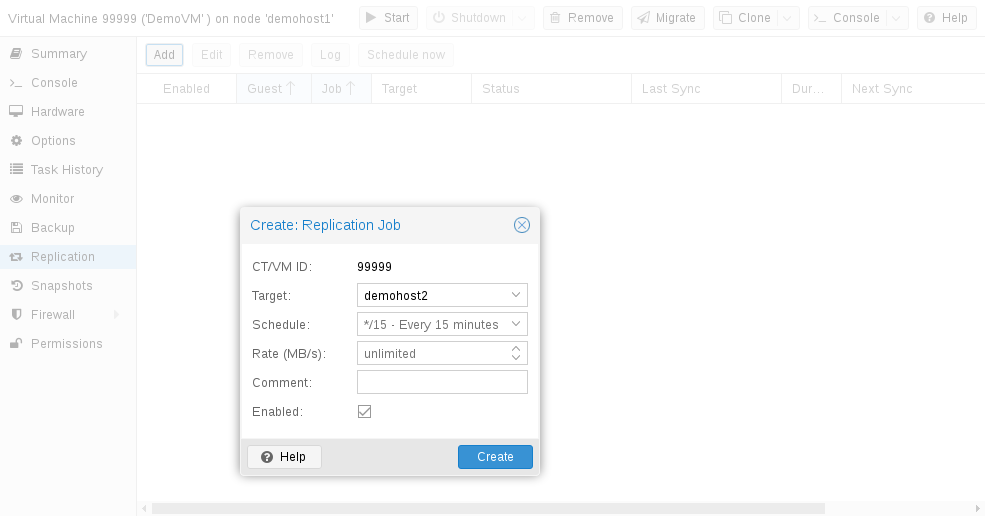

You can use the web GUI to create, modify, and remove replication jobs easily. Additionally, the command line interface (CLI) tool pvesr can be used to do this.

You can find the replication panel on all levels (datacenter, node, virtual guest) in the web GUI. They differ in which jobs get shown: all, node- or guest-specific jobs.

When adding a new job, you need to specify the guest if not already selected as well as the target node. The replication schedule can be set if the default of all 15 minutes is not desired. You may impose a rate-limit on a replication job. The rate limit can help to keep the load on the storage acceptable.

A replication job is identified by a cluster-wide unique ID. This ID is composed of the VMID in addition to a job number. This ID must only be specified manually if the CLI tool is used.

Command Line Interface Examples

Create a replication job which runs every 5 minutes with a limited bandwidth of 10 Mbps (megabytes per second) for the guest with ID 100.

# pvesr create-local-job 100-0 pve1 --schedule "*/5" --rate 10

Disable an active job with ID 100-0.

# pvesr disable 100-0

Enable a deactivated job with ID 100-0.

# pvesr enable 100-0

Change the schedule interval of the job with ID 100-0 to once per hour.

# pvesr update 100-0 --schedule '*/00'

Copyright and Disclaimer

Copyright © 2007-2019 Proxmox Server Solutions GmbH

This program is free software: you can redistribute it and/or modify it under the terms of the GNU Affero General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public License for more details.

You should have received a copy of the GNU Affero General Public License along with this program. If not, see https://www.gnu.org/licenses/