SYNOPSIS

pveceph <COMMAND> [ARGS] [OPTIONS]

pveceph createmgr

An alias for pveceph mgr create.

pveceph createmon

An alias for pveceph mon create.

pveceph createosd

An alias for pveceph osd create.

pveceph createpool

An alias for pveceph pool create.

pveceph destroymgr

An alias for pveceph mgr destroy.

pveceph destroymon

An alias for pveceph mon destroy.

pveceph destroyosd

An alias for pveceph osd destroy.

pveceph destroypool

An alias for pveceph pool destroy.

pveceph fs create [OPTIONS]

Create a Ceph filesystem

- --add-storage <boolean> (default = 0)

-

Configure the created CephFS as storage for this cluster.

- --name (?^:^[^:/\s]+$) (default = cephfs)

-

The ceph filesystem name.

- --pg_num <integer> (8 - 32768) (default = 128)

-

Number of placement groups for the backing data pool. The metadata pool will use a quarter of this.

pveceph fs destroy <name> [OPTIONS]

Destroy a Ceph filesystem

- <name>: <string>

-

The ceph filesystem name.

- --remove-pools <boolean> (default = 0)

-

Remove data and metadata pools configured for this fs.

- --remove-storages <boolean> (default = 0)

-

Remove all pveceph-managed storages configured for this fs.

pveceph help [OPTIONS]

Get help about specified command.

- --extra-args <array>

-

Shows help for a specific command

- --verbose <boolean>

-

Verbose output format.

pveceph init [OPTIONS]

Create initial ceph default configuration and setup symlinks.

- --cluster-network <string>

-

Declare a separate cluster network, OSDs will routeheartbeat, object replication and recovery traffic over it

Requires option(s): network - --disable_cephx <boolean> (default = 0)

-

Disable cephx authentication.

cephx is a security feature protecting against man-in-the-middle attacks. Only consider disabling cephx if your network is private! - --min_size <integer> (1 - 7) (default = 2)

-

Minimum number of available replicas per object to allow I/O

- --network <string>

-

Use specific network for all ceph related traffic

- --pg_bits <integer> (6 - 14) (default = 6)

-

Placement group bits, used to specify the default number of placement groups.

Depreacted. This setting was deprecated in recent Ceph versions.

- --size <integer> (1 - 7) (default = 3)

-

Targeted number of replicas per object

pveceph install [OPTIONS]

Install ceph related packages.

- --allow-experimental <boolean> (default = 0)

-

Allow experimental versions. Use with care!

- --repository <enterprise | no-subscription | test> (default = enterprise)

-

Ceph repository to use.

- --version <squid> (default = squid)

-

Ceph version to install.

pveceph lspools

An alias for pveceph pool ls.

pveceph mds create [OPTIONS]

Create Ceph Metadata Server (MDS)

- --hotstandby <boolean> (default = 0)

-

Determines whether a ceph-mds daemon should poll and replay the log of an active MDS. Faster switch on MDS failure, but needs more idle resources.

- --name [a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])? (default = nodename)

-

The ID for the mds, when omitted the same as the nodename

pveceph mds destroy <name>

Destroy Ceph Metadata Server

- <name>: [a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?

-

The name (ID) of the mds

pveceph mgr create [OPTIONS]

Create Ceph Manager

- --id [a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?

-

The ID for the manager, when omitted the same as the nodename

pveceph mgr destroy <id>

Destroy Ceph Manager.

- <id>: [a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?

-

The ID of the manager

pveceph mon create [OPTIONS]

Create Ceph Monitor and Manager

- --mon-address <string>

-

Overwrites autodetected monitor IP address(es). Must be in the public network(s) of Ceph.

- --monid [a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?

-

The ID for the monitor, when omitted the same as the nodename

pveceph mon destroy <monid>

Destroy Ceph Monitor and Manager.

- <monid>: [a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?

-

Monitor ID

pveceph osd create <dev> [OPTIONS]

Create OSD

- <dev>: <string>

-

Block device name.

- --crush-device-class <string>

-

Set the device class of the OSD in crush.

- --db_dev <string>

-

Block device name for block.db.

- --db_dev_size <number> (1 - N) (default = bluestore_block_db_size or 10% of OSD size)

-

Size in GiB for block.db.

Requires option(s): db_dev - --encrypted <boolean> (default = 0)

-

Enables encryption of the OSD.

- --osds-per-device <integer> (1 - N)

-

OSD services per physical device. Only useful for fast NVMe devices" ." to utilize their performance better.

- --wal_dev <string>

-

Block device name for block.wal.

- --wal_dev_size <number> (0.5 - N) (default = bluestore_block_wal_size or 1% of OSD size)

-

Size in GiB for block.wal.

Requires option(s): wal_dev

pveceph osd destroy <osdid> [OPTIONS]

Destroy OSD

- <osdid>: <integer>

-

OSD ID

- --cleanup <boolean> (default = 0)

-

If set, we remove partition table entries.

pveceph osd details <osdid> [OPTIONS] [FORMAT_OPTIONS]

Get OSD details.

- <osdid>: <string>

-

ID of the OSD

- --verbose <boolean> (default = 0)

-

Print verbose information, same as json-pretty output format.

pveceph pool create <name> [OPTIONS]

Create Ceph pool

- <name>: (?^:^[^:/\s]+$)

-

The name of the pool. It must be unique.

- --add_storages <boolean> (default = 0; for erasure coded pools: 1)

-

Configure VM and CT storage using the new pool.

- --application <cephfs | rbd | rgw> (default = rbd)

-

The application of the pool.

- --crush_rule <string>

-

The rule to use for mapping object placement in the cluster.

- --erasure-coding k=<integer> ,m=<integer> [,device-class=<class>] [,failure-domain=<domain>] [,profile=<profile>]

-

Create an erasure coded pool for RBD with an accompaning replicated pool for metadata storage. With EC, the common ceph options size, min_size and crush_rule parameters will be applied to the metadata pool.

- --min_size <integer> (1 - 7) (default = 2)

-

Minimum number of replicas per object

- --pg_autoscale_mode <off | on | warn> (default = warn)

-

The automatic PG scaling mode of the pool.

- --pg_num <integer> (1 - 32768) (default = 128)

-

Number of placement groups.

- --pg_num_min <integer> (-N - 32768)

-

Minimal number of placement groups.

- --size <integer> (1 - 7) (default = 3)

-

Number of replicas per object

- --target_size ^(\d+(\.\d+)?)([KMGT])?$

-

The estimated target size of the pool for the PG autoscaler.

- --target_size_ratio <number>

-

The estimated target ratio of the pool for the PG autoscaler.

pveceph pool destroy <name> [OPTIONS]

Destroy pool

- <name>: <string>

-

The name of the pool. It must be unique.

- --force <boolean> (default = 0)

-

If true, destroys pool even if in use

- --remove_ecprofile <boolean> (default = 1)

-

Remove the erasure code profile. Defaults to true, if applicable.

- --remove_storages <boolean> (default = 0)

-

Remove all pveceph-managed storages configured for this pool

pveceph pool get <name> [OPTIONS] [FORMAT_OPTIONS]

Show the current pool status.

- <name>: <string>

-

The name of the pool. It must be unique.

- --verbose <boolean> (default = 0)

-

If enabled, will display additional data(eg. statistics).

pveceph pool ls [FORMAT_OPTIONS]

List all pools and their settings (which are settable by the POST/PUT endpoints).

pveceph pool set <name> [OPTIONS]

Change POOL settings

- <name>: (?^:^[^:/\s]+$)

-

The name of the pool. It must be unique.

- --application <cephfs | rbd | rgw>

-

The application of the pool.

- --crush_rule <string>

-

The rule to use for mapping object placement in the cluster.

- --min_size <integer> (1 - 7)

-

Minimum number of replicas per object

- --pg_autoscale_mode <off | on | warn>

-

The automatic PG scaling mode of the pool.

- --pg_num <integer> (1 - 32768)

-

Number of placement groups.

- --pg_num_min <integer> (-N - 32768)

-

Minimal number of placement groups.

- --size <integer> (1 - 7)

-

Number of replicas per object

- --target_size ^(\d+(\.\d+)?)([KMGT])?$

-

The estimated target size of the pool for the PG autoscaler.

- --target_size_ratio <number>

-

The estimated target ratio of the pool for the PG autoscaler.

pveceph purge [OPTIONS]

Destroy ceph related data and configuration files.

- --crash <boolean>

-

Additionally purge Ceph crash logs, /var/lib/ceph/crash.

- --logs <boolean>

-

Additionally purge Ceph logs, /var/log/ceph.

pveceph start [OPTIONS]

Start ceph services.

- --service (ceph|mon|mds|osd|mgr)(\.[a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?)? (default = ceph.target)

-

Ceph service name.

pveceph status

Get Ceph Status.

pveceph stop [OPTIONS]

Stop ceph services.

- --service (ceph|mon|mds|osd|mgr)(\.[a-zA-Z0-9]([a-zA-Z0-9\-]*[a-zA-Z0-9])?)? (default = ceph.target)

-

Ceph service name.

DESCRIPTION

Proxmox VE unifies your compute and storage systems, that is, you can use the same physical nodes within a cluster for both computing (processing VMs and containers) and replicated storage. The traditional silos of compute and storage resources can be wrapped up into a single hyper-converged appliance. Separate storage networks (SANs) and connections via network attached storage (NAS) disappear. With the integration of Ceph, an open source software-defined storage platform, Proxmox VE has the ability to run and manage Ceph storage directly on the hypervisor nodes.

Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability.

-

Easy setup and management via CLI and GUI

-

Thin provisioning

-

Snapshot support

-

Self healing

-

Scalable to the exabyte level

-

Provides block, file system, and object storage

-

Setup pools with different performance and redundancy characteristics

-

Data is replicated, making it fault tolerant

-

Runs on commodity hardware

-

No need for hardware RAID controllers

-

Open source

For small to medium-sized deployments, it is possible to install a Ceph server for using RADOS Block Devices (RBD) or CephFS directly on your Proxmox VE cluster nodes (see Ceph RADOS Block Devices (RBD)). Recent hardware has a lot of CPU power and RAM, so running storage services and virtual guests on the same node is possible.

To simplify management, Proxmox VE provides you native integration to install and manage Ceph services on Proxmox VE nodes either via the built-in web interface, or using the pveceph command line tool.

Terminology

-

Ceph Monitor (ceph-mon, or MON)

-

Ceph Manager (ceph-mgr, or MGS)

-

Ceph Metadata Service (ceph-mds, or MDS)

-

Ceph Object Storage Daemon (ceph-osd, or OSD)

Recommendations for a Healthy Ceph Cluster

To build a hyper-converged Proxmox + Ceph Cluster, you must use at least three (preferably) identical servers for the setup.

Check also the recommendations from Ceph’s website.

|

|

The recommendations below should be seen as a rough guidance for choosing hardware. Therefore, it is still essential to adapt it to your specific needs. You should test your setup and monitor health and performance continuously. |

Ceph services can be classified into two categories:

-

Intensive CPU usage, benefiting from high CPU base frequencies and multiple cores. Members of that category are:

-

Object Storage Daemon (OSD) services

-

Meta Data Service (MDS) used for CephFS

-

-

Moderate CPU usage, not needing multiple CPU cores. These are:

-

Monitor (MON) services

-

Manager (MGR) services

-

As a simple rule of thumb, you should assign at least one CPU core (or thread) to each Ceph service to provide the minimum resources required for stable and durable Ceph performance.

For example, if you plan to run a Ceph monitor, a Ceph manager and 6 Ceph OSDs services on a node you should reserve 8 CPU cores purely for Ceph when targeting basic and stable performance.

Note that OSDs CPU usage depend mostly from the disks performance. The higher the possible IOPS (IO Operations per Second) of a disk, the more CPU can be utilized by a OSD service. For modern enterprise SSD disks, like NVMe’s that can permanently sustain a high IOPS load over 100’000 with sub millisecond latency, each OSD can use multiple CPU threads, e.g., four to six CPU threads utilized per NVMe backed OSD is likely for very high performance disks.

Especially in a hyper-converged setup, the memory consumption needs to be carefully planned out and monitored. In addition to the predicted memory usage of virtual machines and containers, you must also account for having enough memory available for Ceph to provide excellent and stable performance.

As a rule of thumb, for roughly 1 TiB of data, 1 GiB of memory will be used by an OSD. While the usage might be less under normal conditions, it will use most during critical operations like recovery, re-balancing or backfilling. That means that you should avoid maxing out your available memory already on normal operation, but rather leave some headroom to cope with outages.

The OSD service itself will use additional memory. The Ceph BlueStore backend of the daemon requires by default 3-5 GiB of memory (adjustable).

We recommend a network bandwidth of at least 10 Gbps, or more, to be used exclusively for Ceph traffic. A meshed network setup [4] is also an option for three to five node clusters, if there are no 10+ Gbps switches available.

|

|

The volume of traffic, especially during recovery, will interfere with other services on the same network, especially the latency sensitive Proxmox VE corosync cluster stack can be affected, resulting in possible loss of cluster quorum. Moving the Ceph traffic to dedicated and physical separated networks will avoid such interference, not only for corosync, but also for the networking services provided by any virtual guests. |

For estimating your bandwidth needs, you need to take the performance of your disks into account.. While a single HDD might not saturate a 1 Gb link, multiple HDD OSDs per node can already saturate 10 Gbps too. If modern NVMe-attached SSDs are used, a single one can already saturate 10 Gbps of bandwidth, or more. For such high-performance setups we recommend at least a 25 Gpbs, while even 40 Gbps or 100+ Gbps might be required to utilize the full performance potential of the underlying disks.

If unsure, we recommend using three (physical) separate networks for high-performance setups:

-

one very high bandwidth (25+ Gbps) network for Ceph (internal) cluster traffic.

-

one high bandwidth (10+ Gpbs) network for Ceph (public) traffic between the ceph server and ceph client storage traffic. Depending on your needs this can also be used to host the virtual guest traffic and the VM live-migration traffic.

-

one medium bandwidth (1 Gbps) exclusive for the latency sensitive corosync cluster communication.

When planning the size of your Ceph cluster, it is important to take the recovery time into consideration. Especially with small clusters, recovery might take long. It is recommended that you use SSDs instead of HDDs in small setups to reduce recovery time, minimizing the likelihood of a subsequent failure event during recovery.

In general, SSDs will provide more IOPS than spinning disks. With this in mind, in addition to the higher cost, it may make sense to implement a class based separation of pools. Another way to speed up OSDs is to use a faster disk as a journal or DB/Write-Ahead-Log device, see creating Ceph OSDs. If a faster disk is used for multiple OSDs, a proper balance between OSD and WAL / DB (or journal) disk must be selected, otherwise the faster disk becomes the bottleneck for all linked OSDs.

Aside from the disk type, Ceph performs best with an evenly sized, and an evenly distributed amount of disks per node. For example, 4 x 500 GB disks within each node is better than a mixed setup with a single 1 TB and three 250 GB disk.

You also need to balance OSD count and single OSD capacity. More capacity allows you to increase storage density, but it also means that a single OSD failure forces Ceph to recover more data at once.

As Ceph handles data object redundancy and multiple parallel writes to disks (OSDs) on its own, using a RAID controller normally doesn’t improve performance or availability. On the contrary, Ceph is designed to handle whole disks on it’s own, without any abstraction in between. RAID controllers are not designed for the Ceph workload and may complicate things and sometimes even reduce performance, as their write and caching algorithms may interfere with the ones from Ceph.

|

|

Avoid RAID controllers. Use host bus adapter (HBA) instead. |

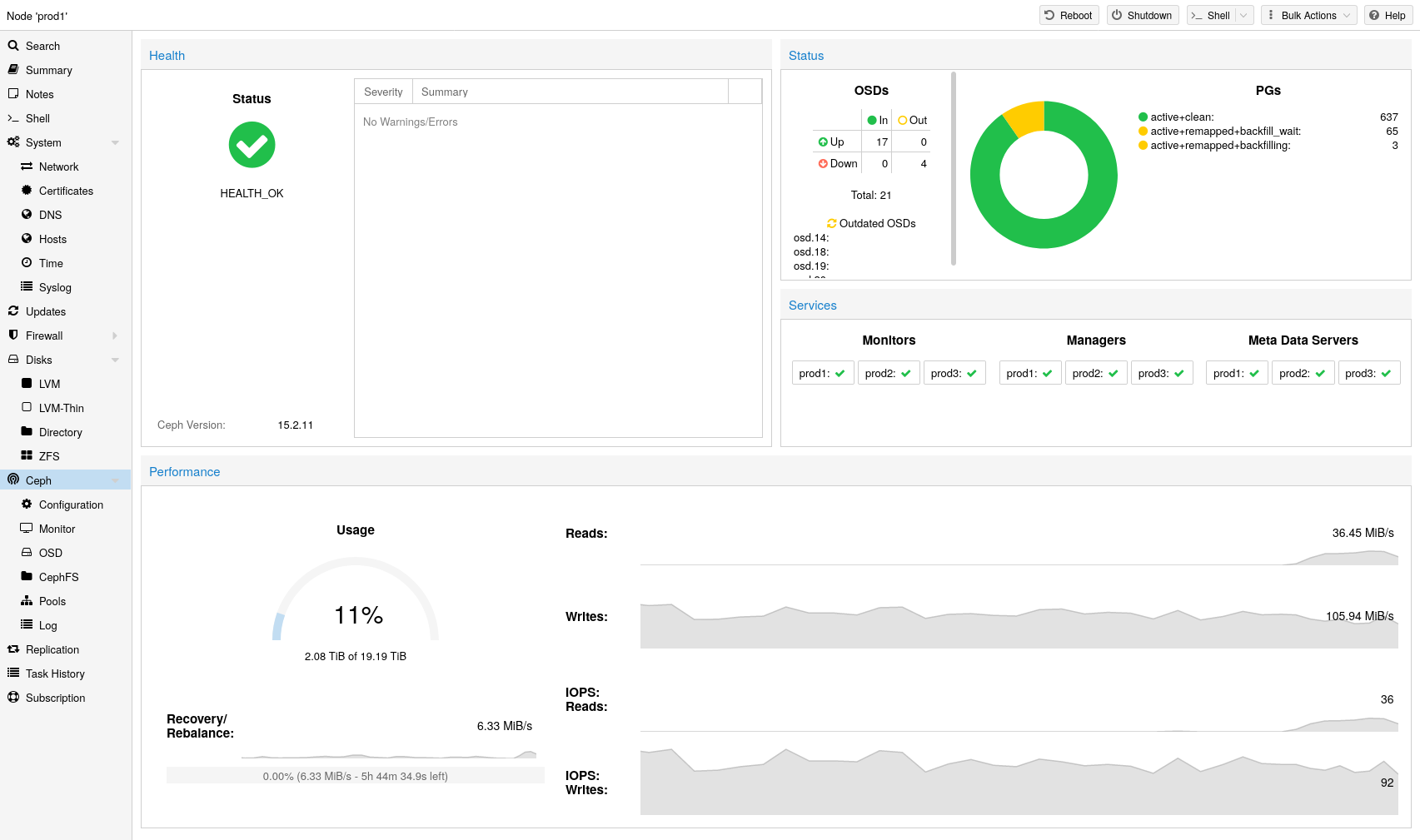

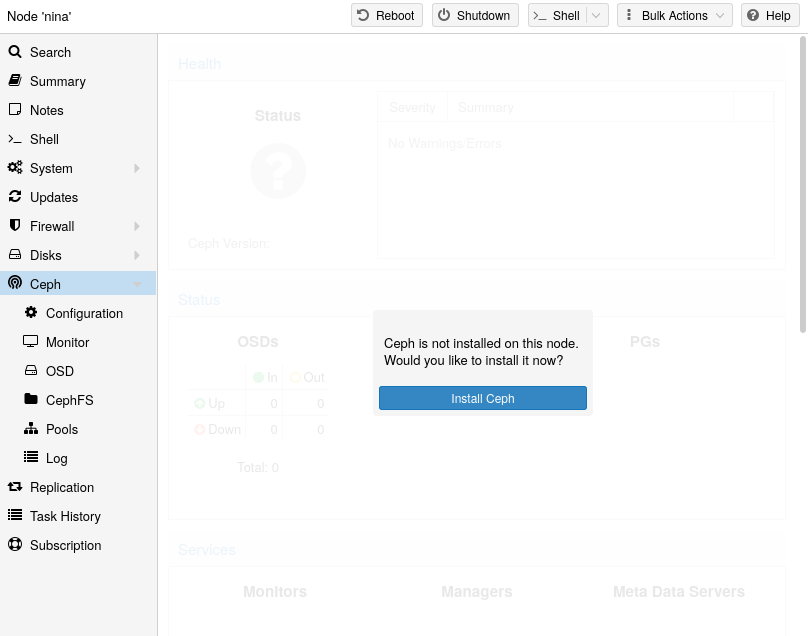

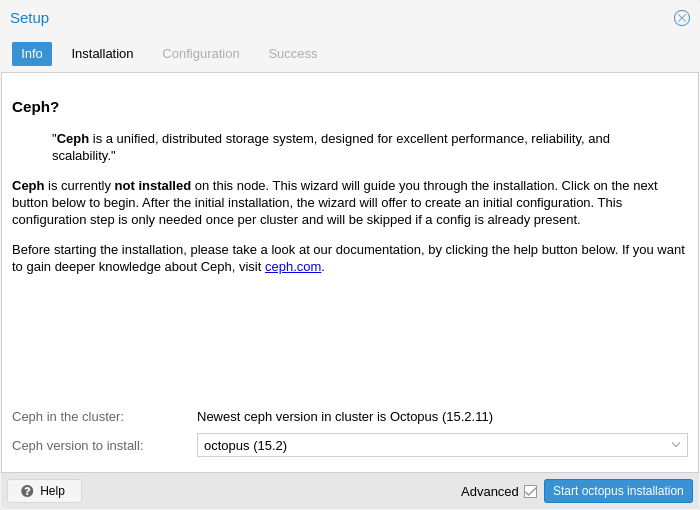

Initial Ceph Installation & Configuration

Using the Web-based Wizard

With Proxmox VE you have the benefit of an easy to use installation wizard for Ceph. Click on one of your cluster nodes and navigate to the Ceph section in the menu tree. If Ceph is not already installed, you will see a prompt offering to do so.

The wizard is divided into multiple sections, where each needs to finish successfully, in order to use Ceph.

First you need to chose which Ceph version you want to install. Prefer the one from your other nodes, or the newest if this is the first node you install Ceph.

After starting the installation, the wizard will download and install all the required packages from Proxmox VE’s Ceph repository.

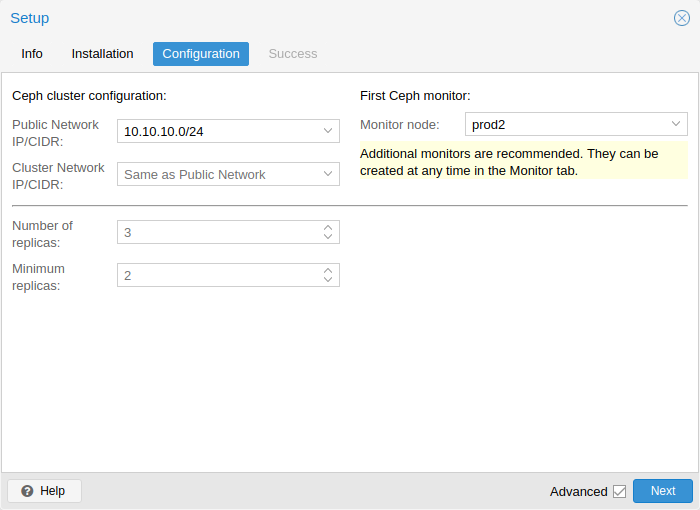

After finishing the installation step, you will need to create a configuration. This step is only needed once per cluster, as this configuration is distributed automatically to all remaining cluster members through Proxmox VE’s clustered configuration file system (pmxcfs).

The configuration step includes the following settings:

-

Public Network: This network will be used for public storage communication (e.g., for virtual machines using a Ceph RBD backed disk, or a CephFS mount), and communication between the different Ceph services. This setting is required.

Separating your Ceph traffic from the Proxmox VE cluster communication (corosync), and possible the front-facing (public) networks of your virtual guests, is highly recommended. Otherwise, Ceph’s high-bandwidth IO-traffic could cause interference with other low-latency dependent services. -

Cluster Network: Specify to separate the OSD replication and heartbeat traffic as well. This setting is optional.

Using a physically separated network is recommended, as it will relieve the Ceph public and the virtual guests network, while also providing a significant Ceph performance improvements.

The Ceph cluster network can be configured and moved to another physically separated network at a later time.

You have two more options which are considered advanced and therefore should only changed if you know what you are doing.

-

Number of replicas: Defines how often an object is replicated.

-

Minimum replicas: Defines the minimum number of required replicas for I/O to be marked as complete.

Additionally, you need to choose your first monitor node. This step is required.

That’s it. You should now see a success page as the last step, with further instructions on how to proceed. Your system is now ready to start using Ceph. To get started, you will need to create some additional monitors, OSDs and at least one pool.

The rest of this chapter will guide you through getting the most out of your Proxmox VE based Ceph setup. This includes the aforementioned tips and more, such as CephFS, which is a helpful addition to your new Ceph cluster.

CLI Installation of Ceph Packages

Alternatively to the the recommended Proxmox VE Ceph installation wizard available in the web interface, you can use the following CLI command on each node:

pveceph install

This sets up an apt package repository in /etc/apt/sources.list.d/ceph.sources and installs the required software.

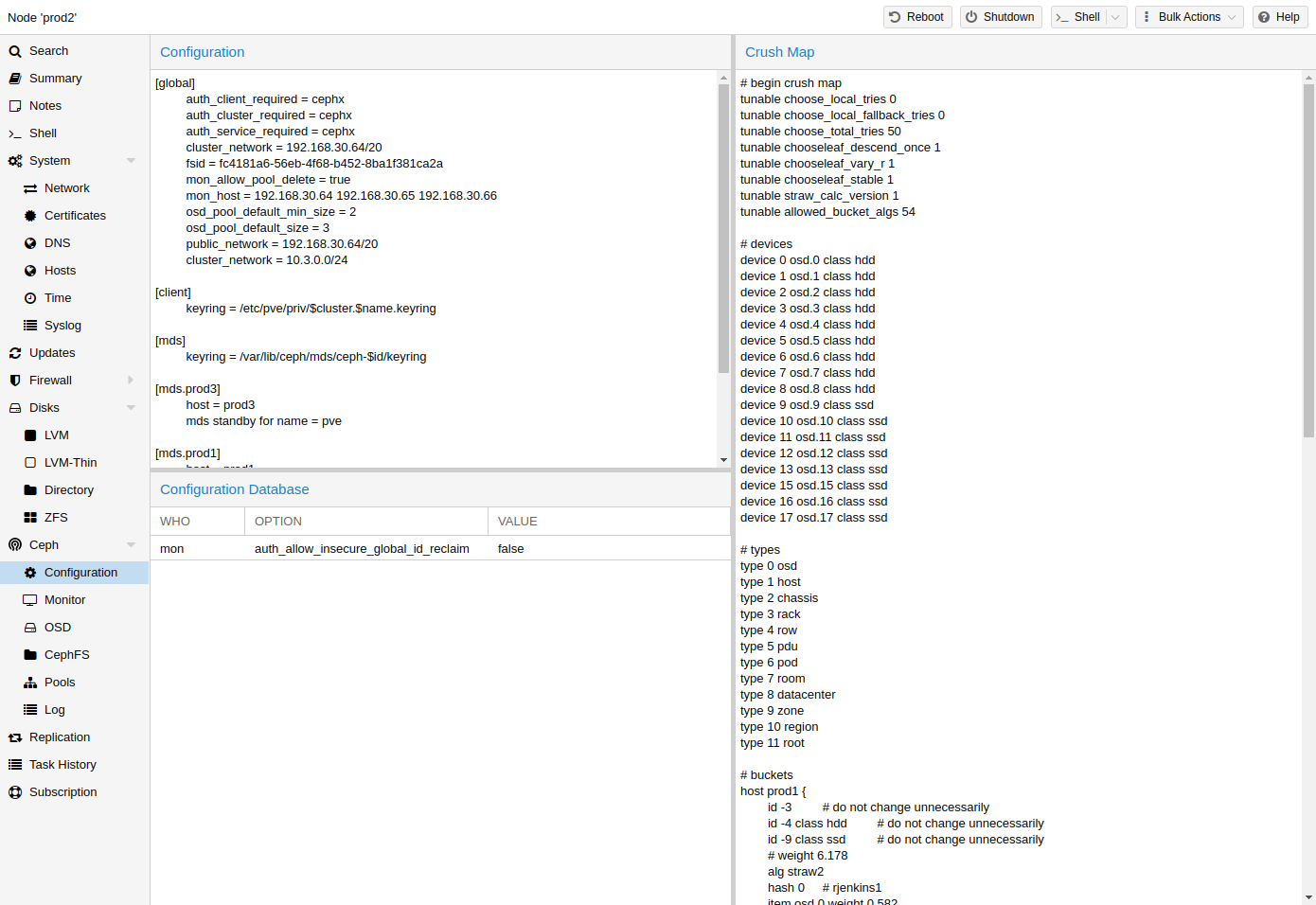

Initial Ceph configuration via CLI

Use the Proxmox VE Ceph installation wizard (recommended) or run the following command on one node:

pveceph init --network 10.10.10.0/24

This creates an initial configuration at /etc/pve/ceph.conf with a dedicated network for Ceph. This file is automatically distributed to all Proxmox VE nodes, using pmxcfs. The command also creates a symbolic link at /etc/ceph/ceph.conf, which points to that file. Thus, you can simply run Ceph commands without the need to specify a configuration file.

Ceph Monitor

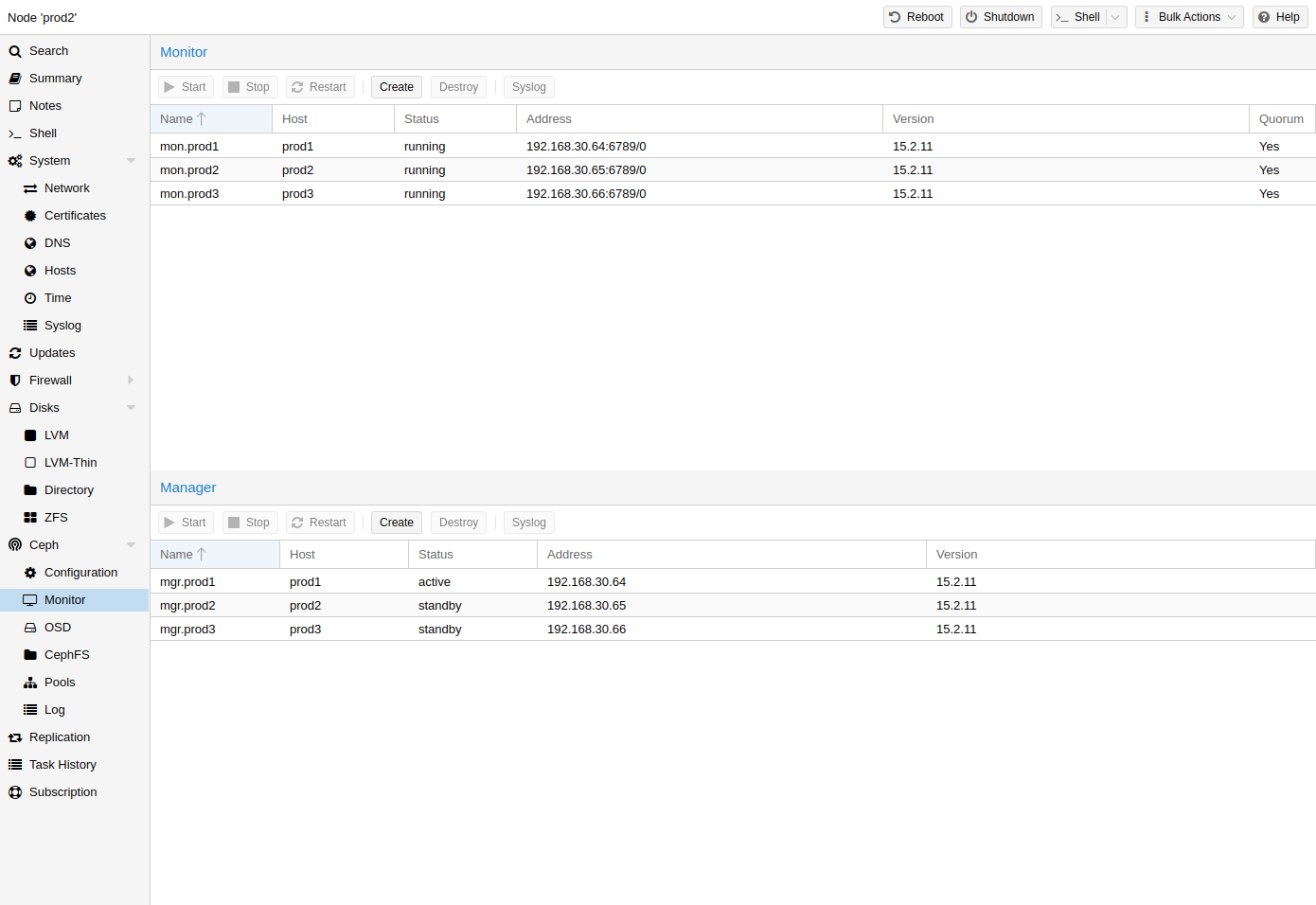

The Ceph Monitor (MON) [5] maintains a master copy of the cluster map. For high availability, you need at least 3 monitors. One monitor will already be installed if you used the installation wizard. You won’t need more than 3 monitors, as long as your cluster is small to medium-sized. Only really large clusters will require more than this.

Create Monitors

On each node where you want to place a monitor (three monitors are recommended), create one by using the Ceph → Monitor tab in the GUI or run:

pveceph mon create

Destroy Monitors

To remove a Ceph Monitor via the GUI, first select a node in the tree view and go to the Ceph → Monitor panel. Select the MON and click the Destroy button.

To remove a Ceph Monitor via the CLI, first connect to the node on which the MON is running. Then execute the following command:

pveceph mon destroy

|

|

At least three Monitors are needed for quorum. |

Ceph Manager

The Manager daemon runs alongside the monitors. It provides an interface to monitor the cluster. Since the release of Ceph luminous, at least one ceph-mgr [6] daemon is required.

Create Manager

Multiple Managers can be installed, but only one Manager is active at any given time.

pveceph mgr create

|

|

It is recommended to install the Ceph Manager on the monitor nodes. For high availability install more then one manager. |

Destroy Manager

To remove a Ceph Manager via the GUI, first select a node in the tree view and go to the Ceph → Monitor panel. Select the Manager and click the Destroy button.

To remove a Ceph Monitor via the CLI, first connect to the node on which the Manager is running. Then execute the following command:

pveceph mgr destroy

|

|

While a manager is not a hard-dependency, it is crucial for a Ceph cluster, as it handles important features like PG-autoscaling, device health monitoring, telemetry and more. |

Ceph OSDs

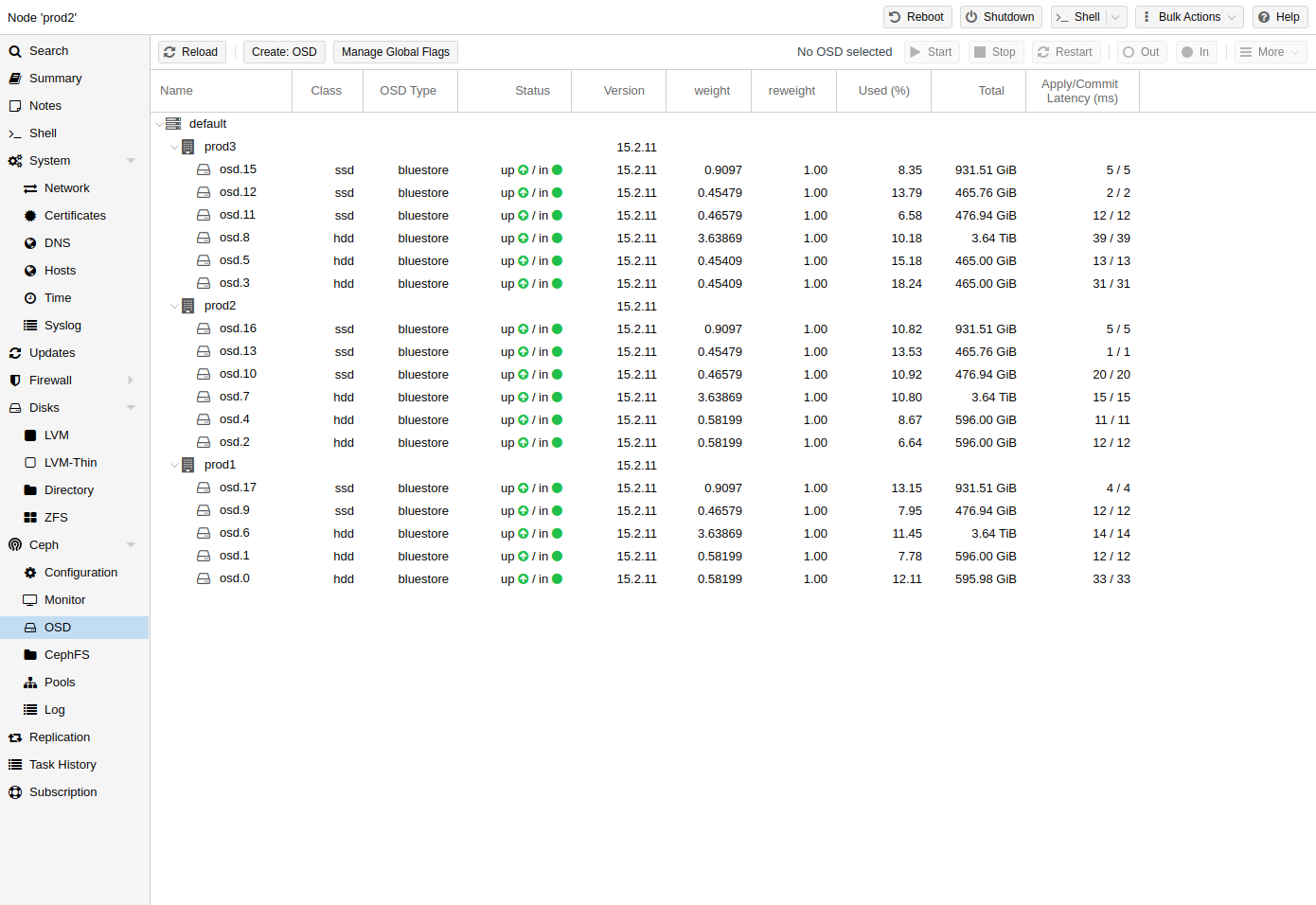

Ceph Object Storage Daemons store objects for Ceph over the network. It is recommended to use one OSD per physical disk.

Create OSDs

You can create an OSD either via the Proxmox VE web interface or via the CLI using pveceph. For example:

pveceph osd create /dev/sd[X]

|

|

We recommend a Ceph cluster with at least three nodes and at least 12 OSDs, evenly distributed among the nodes. |

If the disk was in use before (for example, for ZFS or as an OSD) you first need to zap all traces of that usage. To remove the partition table, boot sector and any other OSD leftover, you can use the following command:

ceph-volume lvm zap /dev/sd[X] --destroy

|

|

The above command will destroy all data on the disk! |

Starting with the Ceph Kraken release, a new Ceph OSD storage type was introduced called Bluestore [7]. This is the default when creating OSDs since Ceph Luminous.

pveceph osd create /dev/sd[X]

If you want to use a separate DB/WAL device for your OSDs, you can specify it through the -db_dev and -wal_dev options. The WAL is placed with the DB, if not specified separately.

pveceph osd create /dev/sd[X] -db_dev /dev/sd[Y] -wal_dev /dev/sd[Z]

You can directly choose the size of those with the -db_size and -wal_size parameters respectively. If they are not given, the following values (in order) will be used:

-

bluestore_block_{db,wal}_size from Ceph configuration…

-

… database, section osd

-

… database, section global

-

… file, section osd

-

… file, section global

-

-

10% (DB)/1% (WAL) of OSD size

|

|

The DB stores BlueStore’s internal metadata, and the WAL is BlueStore’s internal journal or write-ahead log. It is recommended to use a fast SSD or NVRAM for better performance. |

Before Ceph Luminous, Filestore was used as the default storage type for Ceph OSDs. Starting with Ceph Nautilus, Proxmox VE does not support creating such OSDs with pveceph anymore. If you still want to create filestore OSDs, use ceph-volume directly.

ceph-volume lvm create --filestore --data /dev/sd[X] --journal /dev/sd[Y]

Destroy OSDs

If you experience problems with an OSD or its disk, try to troubleshoot them first to decide if a replacement is needed.

To destroy an OSD, navigate to the <Node> → Ceph → OSD panel or use the mentioned CLI commands on the node where the OSD is located.

-

Make sure the cluster has enough space to handle the removal of the OSD. In the Ceph → OSD panel,if the to-be destroyed OSD is still up and in (non-zero value at AVAIL), make sure that all OSDs have their Used (%) value well below the nearfull_ratio of default 85%.

This way you can reduce the risk from the upcoming rebalancing, which may cause OSDs to run full and thereby blocking I/O on Ceph pools.

Use the following command to get the same information on the CLI:

ceph osd df tree

-

If the to-be destroyed OSD is not out yet, select the OSD and click on Out. This will exclude it from data distribution and start a rebalance.

The following command does the same:

ceph osd out <id>

-

If you can, wait until Ceph has finished the rebalance to always have enough replicas. The OSD will be empty; once it is, it will show 0 PGs.

-

Click on Stop. If stopping is not safe yet, a warning will appear, and you should click on Cancel. Try it again in a few moments.

The following commands can be used to check if it is safe to stop and stop the OSD:

ceph osd ok-to-stop <id> pveceph stop --service osd.<id>

-

Finally:

To remove the OSD from Ceph and delete all disk data, first click on More → Destroy. Enable the cleanup option to clean up the partition table and other structures. This makes it possible to immediately reuse the disk in Proxmox VE. Then, click on Remove.

The CLI command to destroy the OSD is:

pveceph osd destroy <id> [--cleanup]

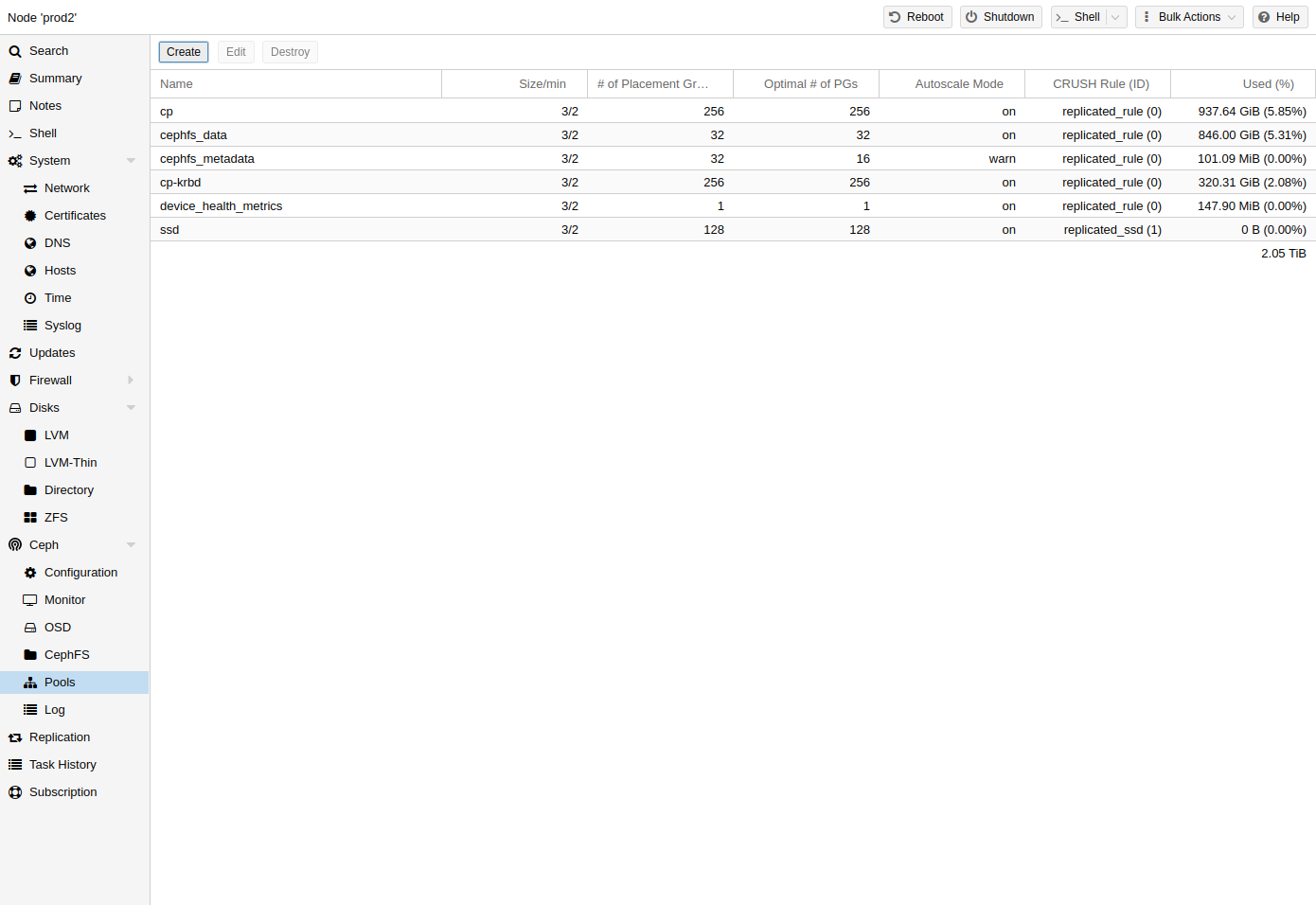

Ceph Pools

A pool is a logical group for storing objects. It holds a collection of objects, known as Placement Groups (PG, pg_num).

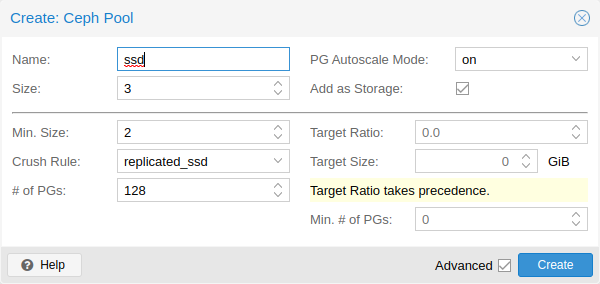

Create and Edit Pools

You can create and edit pools from the command line or the web interface of any Proxmox VE host under Ceph → Pools.

When no options are given, we set a default of 128 PGs, a size of 3 replicas and a min_size of 2 replicas, to ensure no data loss occurs if any OSD fails.

|

|

Do not set a min_size of 1. A replicated pool with min_size of 1 allows I/O on an object when it has only 1 replica, which could lead to data loss, incomplete PGs or unfound objects. |

It is advised that you either enable the PG-Autoscaler or calculate the PG number based on your setup. You can find the formula and the PG calculator [8] online. From Ceph Nautilus onward, you can change the number of PGs [9] after the setup.

The PG autoscaler [10] can automatically scale the PG count for a pool in the background. Setting the Target Size or Target Ratio advanced parameters helps the PG-Autoscaler to make better decisions.

pveceph pool create <pool-name> --add_storages

|

|

If you would also like to automatically define a storage for your pool, keep the ‘Add as Storage’ checkbox checked in the web interface, or use the command-line option --add_storages at pool creation. |

Pool Options

- Name

-

The name of the pool. This must be unique and can’t be changed afterwards.

- Size

-

The number of replicas per object. Ceph always tries to have this many copies of an object. Default: 3.

- PG Autoscale Mode

-

The automatic PG scaling mode [10] of the pool. If set to warn, it produces a warning message when a pool has a non-optimal PG count. Default: warn.

- Add as Storage

-

Configure a VM or container storage using the new pool. Default: true (only visible on creation).

- Min. Size

-

The minimum number of replicas per object. Ceph will reject I/O on the pool if a PG has less than this many replicas. Default: 2.

- Crush Rule

-

The rule to use for mapping object placement in the cluster. These rules define how data is placed within the cluster. See Ceph CRUSH & device classes for information on device-based rules.

- # of PGs

-

The number of placement groups [9] that the pool should have at the beginning. Default: 128.

- Target Ratio

-

The ratio of data that is expected in the pool. The PG autoscaler uses the ratio relative to other ratio sets. It takes precedence over the target size if both are set.

- Target Size

-

The estimated amount of data expected in the pool. The PG autoscaler uses this size to estimate the optimal PG count.

- Min. # of PGs

-

The minimum number of placement groups. This setting is used to fine-tune the lower bound of the PG count for that pool. The PG autoscaler will not merge PGs below this threshold.

Further information on Ceph pool handling can be found in the Ceph pool operation [11] manual.

Erasure Coded Pools

Erasure coding (EC) is a form of ‘forward error correction’ codes that allows to recover from a certain amount of data loss. Erasure coded pools can offer more usable space compared to replicated pools, but they do that for the price of performance.

For comparison: in classic, replicated pools, multiple replicas of the data are stored (size) while in erasure coded pool, data is split into k data chunks with additional m coding (checking) chunks. Those coding chunks can be used to recreate data should data chunks be missing.

The number of coding chunks, m, defines how many OSDs can be lost without losing any data. The total amount of objects stored is k + m.

Creating EC Pools

Erasure coded (EC) pools can be created with the pveceph CLI tooling. Planning an EC pool needs to account for the fact, that they work differently than replicated pools.

The default min_size of an EC pool depends on the m parameter. If m = 1, the min_size of the EC pool will be k. The min_size will be k + 1 if m > 1. The Ceph documentation recommends a conservative min_size of k + 2 [12].

If there are less than min_size OSDs available, any IO to the pool will be blocked until there are enough OSDs available again.

|

|

When planning an erasure coded pool, keep an eye on the min_size as it defines how many OSDs need to be available. Otherwise, IO will be blocked. |

For example, an EC pool with k = 2 and m = 1 will have size = 3, min_size = 2 and will stay operational if one OSD fails. If the pool is configured with k = 2, m = 2, it will have a size = 4 and min_size = 3 and stay operational if one OSD is lost.

To create a new EC pool, run the following command:

pveceph pool create <pool-name> --erasure-coding k=2,m=1

Optional parameters are failure-domain and device-class. If you need to change any EC profile settings used by the pool, you will have to create a new pool with a new profile.

This will create a new EC pool plus the needed replicated pool to store the RBD omap and other metadata. In the end, there will be a <pool name>-data and <pool name>-metadata pool. The default behavior is to create a matching storage configuration as well. If that behavior is not wanted, you can disable it by providing the --add_storages 0 parameter. When configuring the storage configuration manually, keep in mind that the data-pool parameter needs to be set. Only then will the EC pool be used to store the data objects. For example:

|

|

The optional parameters --size, --min_size and --crush_rule will be used for the replicated metadata pool, but not for the erasure coded data pool. If you need to change the min_size on the data pool, you can do it later. The size and crush_rule parameters cannot be changed on erasure coded pools. |

If there is a need to further customize the EC profile, you can do so by creating it with the Ceph tools directly [13], and specify the profile to use with the profile parameter.

For example:

pveceph pool create <pool-name> --erasure-coding profile=<profile-name>

Adding EC Pools as Storage

You can add an already existing EC pool as storage to Proxmox VE. It works the same way as adding an RBD pool but requires the extra data-pool option.

pvesm add rbd <storage-name> --pool <replicated-pool> --data-pool <ec-pool>

|

|

Do not forget to add the keyring and monhost option for any external Ceph clusters, not managed by the local Proxmox VE cluster. |

Destroy Pools

To destroy a pool via the GUI, select a node in the tree view and go to the Ceph → Pools panel. Select the pool to destroy and click the Destroy button. To confirm the destruction of the pool, you need to enter the pool name.

Run the following command to destroy a pool. Specify the -remove_storages to also remove the associated storage.

pveceph pool destroy <name>

|

|

Pool deletion runs in the background and can take some time. You will notice the data usage in the cluster decreasing throughout this process. |

PG Autoscaler

The PG autoscaler allows the cluster to consider the amount of (expected) data stored in each pool and to choose the appropriate pg_num values automatically. It is available since Ceph Nautilus.

You may need to activate the PG autoscaler module before adjustments can take effect.

ceph mgr module enable pg_autoscalerThe autoscaler is configured on a per pool basis and has the following modes:

|

warn

|

A health warning is issued if the suggested pg_num value differs too much from the current value. |

|

on

|

The pg_num is adjusted automatically with no need for any manual interaction. |

|

off

|

No automatic pg_num adjustments are made, and no warning will be issued if the PG count is not optimal. |

The scaling factor can be adjusted to facilitate future data storage with the target_size, target_size_ratio and the pg_num_min options.

|

|

By default, the autoscaler considers tuning the PG count of a pool if it is off by a factor of 3. This will lead to a considerable shift in data placement and might introduce a high load on the cluster. |

You can find a more in-depth introduction to the PG autoscaler on Ceph’s Blog - New in Nautilus: PG merging and autotuning.

Ceph CRUSH & Device Classes

The [14] (Controlled Replication Under Scalable Hashing) algorithm is at the foundation of Ceph.

CRUSH calculates where to store and retrieve data from. This has the advantage that no central indexing service is needed. CRUSH works using a map of OSDs, buckets (device locations) and rulesets (data replication) for pools.

|

|

Further information can be found in the Ceph documentation, under the section CRUSH map [15]. |

This map can be altered to reflect different replication hierarchies. The object replicas can be separated (e.g., failure domains), while maintaining the desired distribution.

A common configuration is to use different classes of disks for different Ceph pools. For this reason, Ceph introduced device classes with luminous, to accommodate the need for easy ruleset generation.

The device classes can be seen in the ceph osd tree output. These classes represent their own root bucket, which can be seen with the below command.

ceph osd crush tree --show-shadow

Example output form the above command:

ID CLASS WEIGHT TYPE NAME -16 nvme 2.18307 root default~nvme -13 nvme 0.72769 host sumi1~nvme 12 nvme 0.72769 osd.12 -14 nvme 0.72769 host sumi2~nvme 13 nvme 0.72769 osd.13 -15 nvme 0.72769 host sumi3~nvme 14 nvme 0.72769 osd.14 -1 7.70544 root default -3 2.56848 host sumi1 12 nvme 0.72769 osd.12 -5 2.56848 host sumi2 13 nvme 0.72769 osd.13 -7 2.56848 host sumi3 14 nvme 0.72769 osd.14

To instruct a pool to only distribute objects on a specific device class, you first need to create a ruleset for the device class:

ceph osd crush rule create-replicated <rule-name> <root> <failure-domain> <class>

<rule-name> |

name of the rule, to connect with a pool (seen in GUI & CLI) |

<root> |

which crush root it should belong to (default Ceph root "default") |

<failure-domain> |

at which failure-domain the objects should be distributed (usually host) |

<class> |

what type of OSD backing store to use (e.g., nvme, ssd, hdd) |

Once the rule is in the CRUSH map, you can tell a pool to use the ruleset.

ceph osd pool set <pool-name> crush_rule <rule-name>

|

|

If the pool already contains objects, these must be moved accordingly. Depending on your setup, this may introduce a big performance impact on your cluster. As an alternative, you can create a new pool and move disks separately. |

Ceph Client

Following the setup from the previous sections, you can configure Proxmox VE to use such pools to store VM and Container images. Simply use the GUI to add a new RBD storage (see section Ceph RADOS Block Devices (RBD)).

You also need to copy the keyring to a predefined location for an external Ceph cluster. If Ceph is installed on the Proxmox nodes itself, then this will be done automatically.

|

|

The filename needs to be <storage_id> + `.keyring, where <storage_id> is the expression after rbd: in /etc/pve/storage.cfg. In the following example, my-ceph-storage is the <storage_id>: |

mkdir /etc/pve/priv/ceph cp /etc/ceph/ceph.client.admin.keyring /etc/pve/priv/ceph/my-ceph-storage.keyring

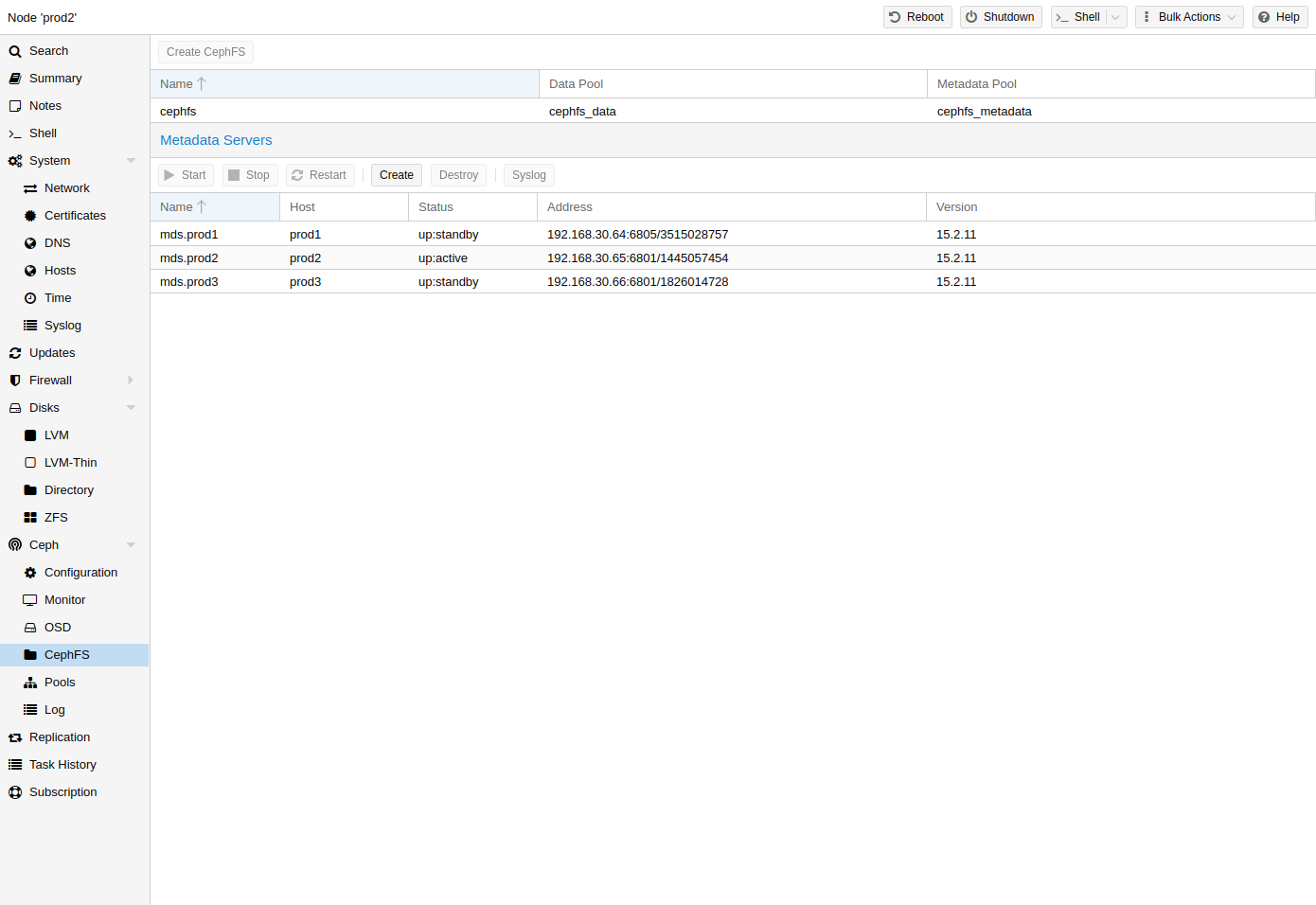

CephFS

Ceph also provides a filesystem, which runs on top of the same object storage as RADOS block devices do. A Metadata Server (MDS) is used to map the RADOS backed objects to files and directories, allowing Ceph to provide a POSIX-compliant, replicated filesystem. This allows you to easily configure a clustered, highly available, shared filesystem. Ceph’s Metadata Servers guarantee that files are evenly distributed over the entire Ceph cluster. As a result, even cases of high load will not overwhelm a single host, which can be an issue with traditional shared filesystem approaches, for example NFS.

Proxmox VE supports both creating a hyper-converged CephFS and using an existing CephFS as storage to save backups, ISO files, and container templates.

Metadata Server (MDS)

CephFS needs at least one Metadata Server to be configured and running, in order to function. You can create an MDS through the Proxmox VE web GUI’s Node -> CephFS panel or from the command line with:

pveceph mds create

Multiple metadata servers can be created in a cluster, but with the default settings, only one can be active at a time. If an MDS or its node becomes unresponsive (or crashes), another standby MDS will get promoted to active. You can speed up the handover between the active and standby MDS by using the hotstandby parameter option on creation, or if you have already created it you may set/add:

mds standby replay = true

in the respective MDS section of /etc/pve/ceph.conf. With this enabled, the specified MDS will remain in a warm state, polling the active one, so that it can take over faster in case of any issues.

|

|

This active polling will have an additional performance impact on your system and the active MDS. |

Since Luminous (12.2.x) you can have multiple active metadata servers running at once, but this is normally only useful if you have a high amount of clients running in parallel. Otherwise the MDS is rarely the bottleneck in a system. If you want to set this up, please refer to the Ceph documentation. [16]

Create CephFS

With Proxmox VE’s integration of CephFS, you can easily create a CephFS using the web interface, CLI or an external API interface. Some prerequisites are required for this to work:

-

Install Ceph packages - if this was already done some time ago, you may want to rerun it on an up-to-date system to ensure that all CephFS related packages get installed.

After this is complete, you can simply create a CephFS through either the Web GUI’s Node -> CephFS panel or the command-line tool pveceph, for example:

pveceph fs create --pg_num 128 --add-storageThis creates a CephFS named cephfs, using a pool for its data named cephfs_data with 128 placement groups and a pool for its metadata named cephfs_metadata with one quarter of the data pool’s placement groups (32). Check the Proxmox VE managed Ceph pool chapter or visit the Ceph documentation for more information regarding an appropriate placement group number (pg_num) for your setup [9]. Additionally, the --add-storage parameter will add the CephFS to the Proxmox VE storage configuration after it has been created successfully.

Destroy CephFS

|

|

Destroying a CephFS will render all of its data unusable. This cannot be undone! |

To completely and gracefully remove a CephFS, the following steps are necessary:

-

Disconnect every non-Proxmox VE client (e.g. unmount the CephFS in guests).

-

Disable all related CephFS Proxmox VE storage entries (to prevent it from being automatically mounted).

-

Remove all used resources from guests (e.g. ISOs) that are on the CephFS you want to destroy.

-

Unmount the CephFS storages on all cluster nodes manually with

umount /mnt/pve/<STORAGE-NAME>

Where <STORAGE-NAME> is the name of the CephFS storage in your Proxmox VE.

-

Now make sure that no metadata server (MDS) is running for that CephFS, either by stopping or destroying them. This can be done through the web interface or via the command-line interface, for the latter you would issue the following command:

pveceph stop --service mds.NAMEto stop them, or

pveceph mds destroy NAME

to destroy them.

Note that standby servers will automatically be promoted to active when an active MDS is stopped or removed, so it is best to first stop all standby servers.

-

Now you can destroy the CephFS with

pveceph fs destroy NAME --remove-storages --remove-pools

This will automatically destroy the underlying Ceph pools as well as remove the storages from pve config.

After these steps, the CephFS should be completely removed and if you have other CephFS instances, the stopped metadata servers can be started again to act as standbys.

Ceph Maintenance

Replace OSDs

With the following steps you can replace the disk of an OSD, which is one of the most common maintenance tasks in Ceph. If there is a problem with an OSD while its disk still seems to be healthy, read the troubleshooting section first.

-

If the disk failed, get a recommended replacement disk of the same type and size.

-

Destroy the OSD in question.

-

Detach the old disk from the server and attach the new one.

-

Create the OSD again.

-

After automatic rebalancing, the cluster status should switch back to HEALTH_OK. Any still listed crashes can be acknowledged by running the following command:

ceph crash archive-all

Trim/Discard

It is good practice to run fstrim (discard) regularly on VMs and containers. This releases data blocks that the filesystem isn’t using anymore. It reduces data usage and resource load. Most modern operating systems issue such discard commands to their disks regularly. You only need to ensure that the Virtual Machines enable the disk discard option.

Scrub & Deep Scrub

Ceph ensures data integrity by scrubbing placement groups. Ceph checks every object in a PG for its health. There are two forms of Scrubbing, daily cheap metadata checks and weekly deep data checks. The weekly deep scrub reads the objects and uses checksums to ensure data integrity. If a running scrub interferes with business (performance) needs, you can adjust the time when scrubs [17] are executed.

Shutdown Proxmox VE + Ceph HCI Cluster

To shut down the whole Proxmox VE + Ceph cluster, first stop all Ceph clients. These will mainly be VMs and containers. If you have additional clients that might access a Ceph FS or an installed RADOS GW, stop these as well. Highly available guests will switch their state to stopped when powered down via the Proxmox VE tooling.

Once all clients, VMs and containers are off or not accessing the Ceph cluster anymore, verify that the Ceph cluster is in a healthy state. Either via the Web UI or the CLI:

ceph -s

In order to not cause any recovery during the shut down and later power on phases, enable the noout OSD flag. Either in the Ceph → OSD panel behind the Manage Global Flags button or the CLI:

ceph osd set nooutStart powering down your nodes without a monitor (MON). After these nodes are down, continue by shutting down nodes with monitors on them.

When powering on the cluster, start the nodes with monitors (MONs) first. Once all nodes are up and running, confirm that all Ceph services are up and running. In the end, the only warning you should see for Ceph is that the noout flag is still set. You can disable it via the web UI or via the CLI:

ceph osd unset nooutYou can now start up the guests. Highly available guests will change their state to started when they power on.

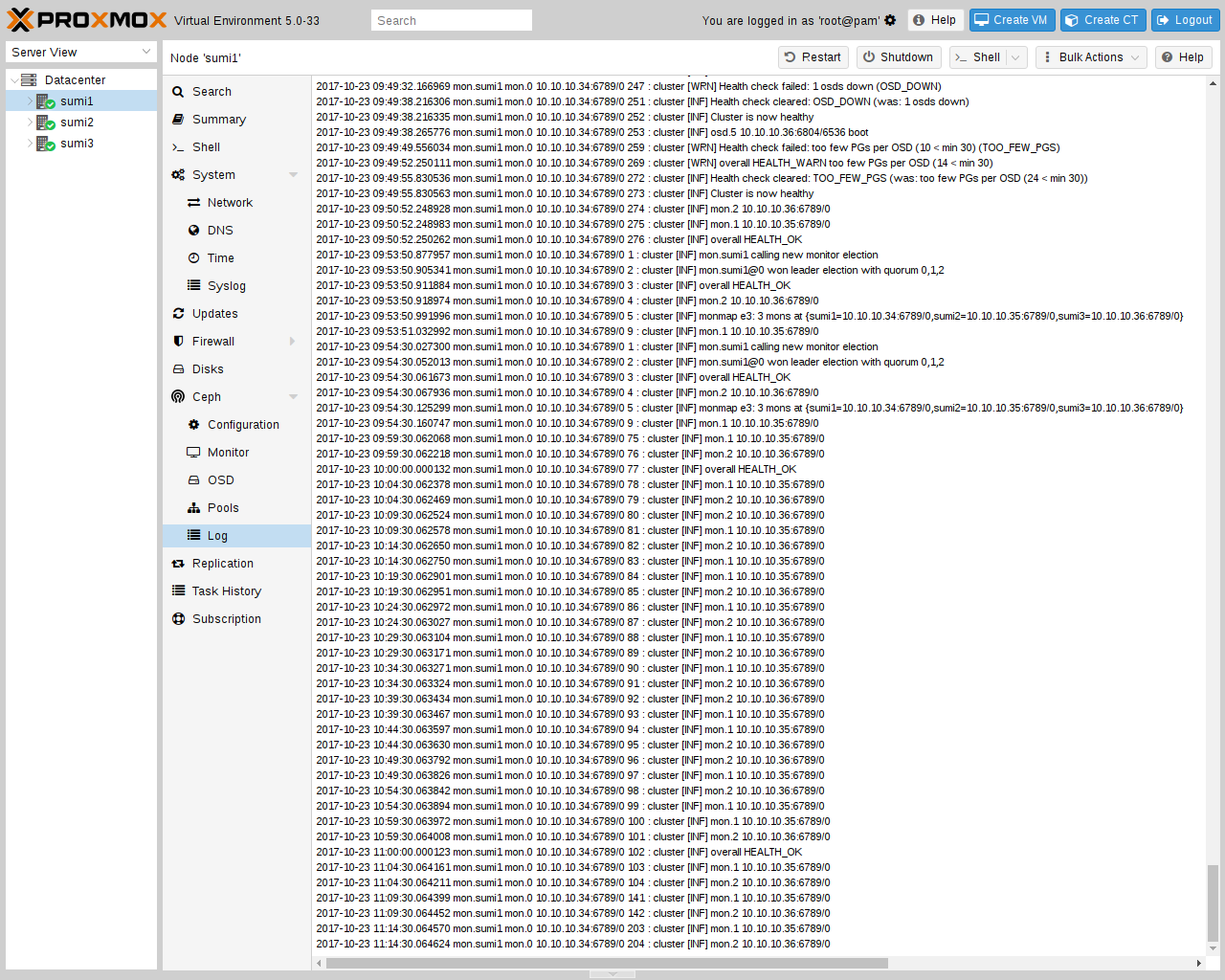

Ceph Monitoring and Troubleshooting

It is important to continuously monitor the health of a Ceph deployment from the beginning, either by using the Ceph tools or by accessing the status through the Proxmox VE API.

The following Ceph commands can be used to see if the cluster is healthy (HEALTH_OK), if there are warnings (HEALTH_WARN), or even errors (HEALTH_ERR). If the cluster is in an unhealthy state, the status commands below will also give you an overview of the current events and actions to take. To stop their execution, press CTRL-C.

Continuously watch the cluster status:

watch ceph --status

Print the cluster status once (not being updated) and continuously append lines of status events:

ceph --watch

Troubleshooting

This section includes frequently used troubleshooting information. More information can be found on the official Ceph website under Troubleshooting [18].

-

Disk Health Monitoring

-

System → System Log or via the CLI, for example of the last 2 days:

journalctl --since "2 days ago" -

IPMI and RAID controller logs

Ceph service crashes can be listed and viewed in detail by running the following commands:

ceph crash ls ceph crash info <crash_id>

Crashes marked as new can be acknowledged by running:

ceph crash archive-all

To get a more detailed view, every Ceph service has a log file under /var/log/ceph/. If more detail is required, the log level can be adjusted [19].

-

Network problems like congestion, a faulty switch, a shut down interface or a blocking firewall. Check whether all Proxmox VE nodes are reliably reachable on the corosync cluster network and on the Ceph public and cluster network.

-

Disk or connection parts which are:

-

defective

-

not firmly mounted

-

lacking I/O performance under higher load (e.g. when using HDDs, consumer hardware or inadvisable RAID controllers)

-

-

Not fulfilling the recommendations for a healthy Ceph cluster.

-

- OSDs down/crashed

-

A faulty OSD will be reported as down and mostly (auto) out 10 minutes later. Depending on the cause, it can also automatically become up and in again. To try a manual activation via web interface, go to Any node → Ceph → OSD, select the OSD and click on Start, In and Reload. When using the shell, run following command on the affected node:

ceph-volume lvm activate --all

To activate a failed OSD, it may be necessary to safely reboot the respective node or, as a last resort, to recreate or replace the OSD.

Copyright and Disclaimer

Copyright © 2007-2022 Proxmox Server Solutions GmbH

This program is free software: you can redistribute it and/or modify it under the terms of the GNU Affero General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public License for more details.

You should have received a copy of the GNU Affero General Public License along with this program. If not, see https://www.gnu.org/licenses/